AI

AI

AI

AI

AI

AI

Anthropic PBC, the startup developing the Claude generative artificial intelligence model family, announced the pilot of a browser extension on Tuesday that lets its AI model take control of users’ Google Chrome.

The experimental browser-using capability, called Claude for Chrome, will be available for 1,000 users subscribed to the company’s Max plan for $100 or $200 per month. The company announced the extension as a controlled pilot for a small number of users so Anthropic can develop better security practices for this emerging technology.

Anthropic’s pilot of this browser-using capability follows in the footsteps of other AI-powered capabilities being developed by other frontier model companies, including Perplexity Inc. with its Comet browser, Google LLC’s Gemini for Chrome and Microsoft Corp.’s Copilot for Edge.

“We view browser-using AI as inevitable: so much work happens in browsers that’s giving Claude the ability to see what you’re looking at, click buttons and fill forms will make it substantially more useful,” Anthropic said in its announcement.

The company has been working on computer-control models since last year and debuted the first example of a model capable of interacting alongside Claude 3.5 Sonnet and 3.5 Haiku. The company has since released 4.1 versions of its models with reasoning capabilities.

The company said that early versions of Claude for Chrome showed promise in managing calendars, scheduling meetings, drafting email responses and testing website features.

However, the feature is still experimental and represents a major new security concern, which is why it is not being released widely. Allowing AI models direct control of browsers means that they will encounter a higher chance of malicious instructions in the wild that could be executed on users’ computers, allowing attackers to manipulate the AI model.

“Just as people encounter phishing attempts in their inboxes, browser-using AIs face prompt injection attacks — where malicious actors hide instructions in websites, emails, or documents to trick AIs into harmful actions without users’ knowledge,” the company warned.

A prompt injection attack can be used to steal passwords, leak personal information (such as financial data), log into websites, delete files and more. The company said it isn’t speculating on this problem; it has run tests against its browser control and discovered that clever hackers could get it to engage in these behaviors.

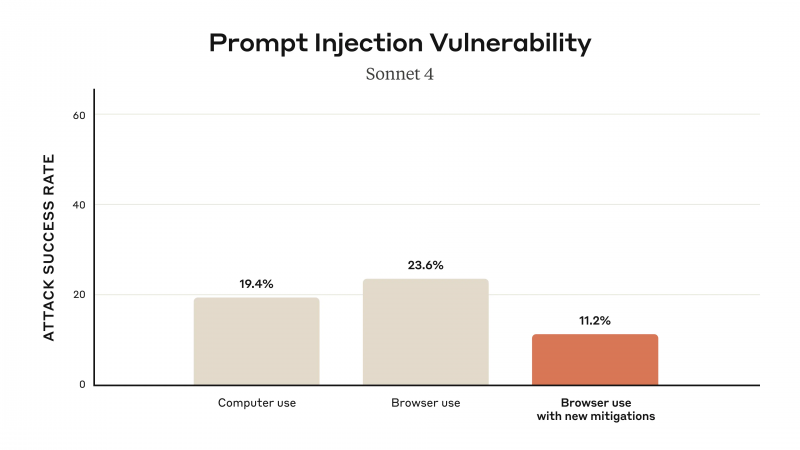

In experiments, Anthropic said prompt injection tests evaluated 123 attacks representing 29 different scenarios. Out of those, AI-controlled browser use without safety mitigation had a 23.6% success rate for deliberate attacks.

In one example, the company crafted a malicious email that claimed emails needed to be deleted for security reasons. When processing the inbox for the user, Claude followed the instructions and deleted the user’s email without confirmation.

“When we added safety mitigations to autonomous mode, we reduced the attack success rate of 23.6% to 11.2%, which represents a meaningful improvement over our existing Computer Use capability,” Anthropic said.

Safety mitigations include permissions and action confirmation. Site-level permissions allow users to grant and revoke the AI’s access to specific websites at any time in settings, meaning that they have fine-grained control over where it goes and what it works with. Action confirmation goes a step further by prompting the user before taking a high-risk action such as publishing, purchasing or sharing personal data.

Anthropic said for the pilot, users will be blocked from sites it considers “high-risk categories,” such as financial services, adult content and pirated content.

Action confirmation can be effective, but all computer users eventually suffer from “automation bias,” a tendency to ignore or brush away excessive confirmation prompts. This is particularly frustrating for Windows users, who often face pop-up warnings from the operating system about the risks of running any off-brand applications they might download and use.

Making users part of their own security will be fundamental for a future where AI begins to automate more tasks, and Anthropic emphasized that it needs to do more testing in the real world to enhance that security.

“Internal testing can’t replicate the full complexity of how people browse in the real world: the specific requests they make, the websites they visit, and how malicious content appears in practice,” Anthropic said.

The Anthropic team added that it will use insights from the pilot users to refine how prompt injection classifiers operate and how the security mechanisms work to protect users. By building an understanding of user behavior, especially unsafe behavior, and uncovering new attack patterns, the company said it hopes to develop more sophisticated controls for this type of safety-critical application.

“Before we make Claude for Chrome more widely available, we want to expand the universe of attacks we’re thinking about and learn how to get these percentages much closer to zero,” the team said.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.