INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. took to the stage at the 2025 OCP Global Summit in San Jose today to talk about how it’s collaborating with more than 70 partners on the design of more efficient “gigawatt AI factories” to support the next generation of artificial intelligence models.

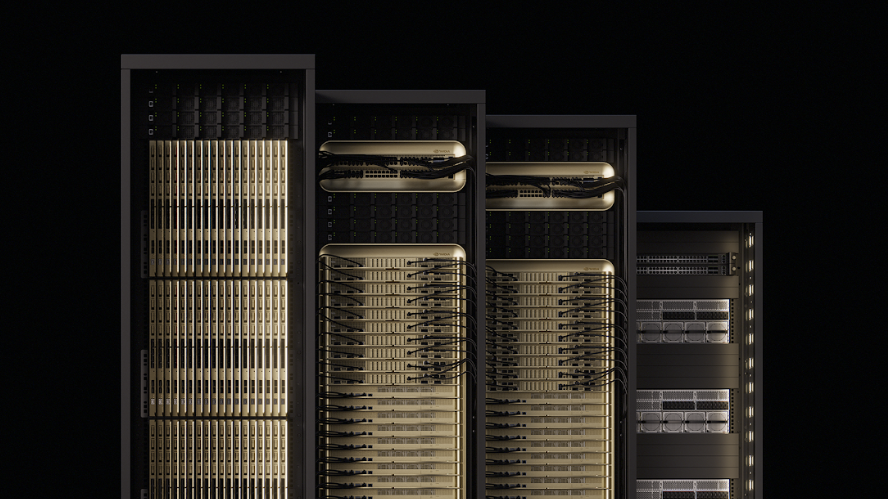

The gigawatt AI factories envisioned by Nvidia will utilize Vera Rubin NVL144, which is an open architecture rack server based on a 100% liquid-cooled design. It’s designed to support the company’s next-generation Vera Rubin graphics processing units, which are expected to launch in 2027. The architecture will enable companies to scale their data centers exponentially, with a central printed circuit board midplane that enables faster assembly, and modular expansion bays for networking and inference to be added as needed.

Nvidia said it’s donating the Vera Rubin NVL144 architecture to the Open Compute Project as an open standard, so that any company will be able to implement it in its own data centers. It also talked about how its ecosystem partners are ramping up support for the Nvidia Kyber server rack design, which will ultimately be able to connect 576 Rubin Ultra GPUs when they become available.

In addition, Nvidia was boosted with the news that both Meta Platforms Inc. and Oracle Corp. have announced plans to standardize their data centers on the company’s Spectrum-X Ethernet networking switches.

The Vera Rubin NVL144 architecture is designed to support the roll-out of 800-volt direct current data centers for the gigawatt era, and Nvidia hopes it will become the foundation of new “AI factories,” or data centers that are optimized for AI workloads.

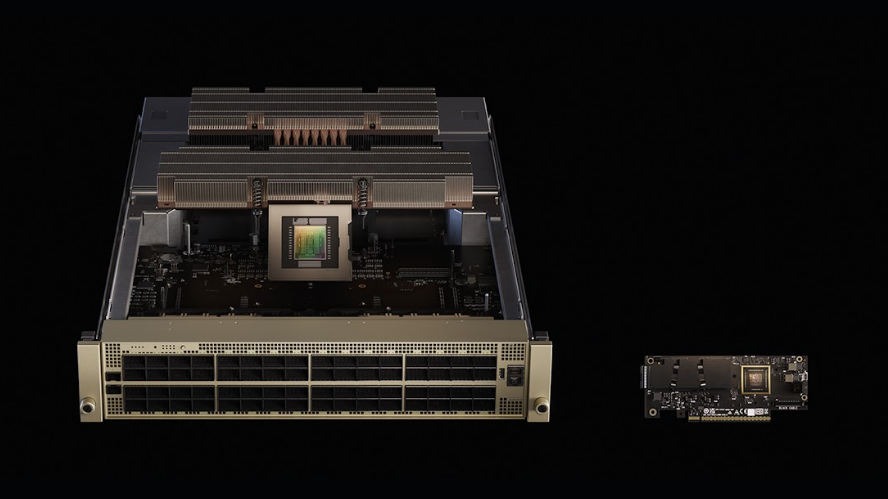

One of the main design innovations is the central printed circuit board midplane, which replaces the traditional cable-based connections in data centers to enable more rapid assembly, while making them easier to service and upgrade. The modular expansion bays help to future proof the architecture, allowing data center operators to add Nvidia ConnectX-9 800GB/s networking and Vera Rubin GPUs to scale up their AI factories to meet increasing demands for computational power and bandwidth. In addition, Vera Rubin NVL144 features an advanced 45°C liquid-cooled busbar to enable higher performance, with 20 times greater energy storage to ensure reliable power supply.

Nvidia explained that Vera Rubin NVL144 is all about preparing for the future, with the flexible architecture designed to scale up over time to support advanced reasoning engines and the demands of autonomous AI agents. It’s based on the existing Nvidia MGX modular architecture, which means it’s compatible with numerous third-party components and systems from more than 50 ecosystem partners. With the new architecture, data center operators will be able to mix and match different components in a modular fashion in order to customize their AI factories.

At the Summit, more than 50 ecosystem partners announced their support for the Vera Rubin NVL144 architecture.

Nvidia also revealed the growing support for its Nvidia Kyber rack server architecture, which is designed to support the infrastructure that will power clusters of 576 Vera Rubin GPUs. Like Vera Rubin NVL144, Nvidia Kyber features several innovations in terms of 800 VDC power delivery, liquid cooling and mechanical design.

The company explained that the increased power demands of the upcoming Vera Rubin GPUs necessitate a revamped energy distribution system. It said the most effective way to counter the challenges of higher power distribution is to increase the voltage, which means it’s ditching the traditional 415 and 480 volt three-phase system in favor of a new 800 VDC architecture. With this system, Nvidia said it will be possible to transmit 150% more power through the same copper wires.

Nvidia Kyber also supports increased rack GPU density to maximize the performance of AI infrastructure. It introduces a new design that rotates compute blades vertically like books on a shelf in order to fit 18 compute blades on a single chassis. At the same time, purpose-built Nvidia NVLink switch blades are integrated at the back through a cableless midplane to scale up the networking capabilities.

Nvidia said Kyber will become a “foundational element” of future hyperscale data centers, with superior performance, greater reliability and enhanced energy efficiency, able to support the anticipated advances in AI over the coming years.

The impact of Vera Rubin NVL144 and Kyber probably won’t be felt for a couple of years yet, but Nvidia says Meta and Oracle will see more immediate gains following their decision to utilize its Spectrum-X Ethernet switches (pictured) in their existing and future data centers.

Spectrum-X Ethernet switches are more advanced networking switches that can deliver immediate performance gains for AI workloads by providing higher-speed connectivity and enhanced data throughput. They utilize adaptive routing to optimize the flow of data through the network, which makes them better able to handle the unique traffic patterns of AI applications, Nvidia said. In an earlier demonstration, Nvidia showed how the world’s largest AI supercomputer was able to achieve 95% faster data throughput speeds using Spectrum-X.

Meta plans to integrate Spectrum-X Ethernet switches within the Facebook Open Switching System, which is the software platform it uses to manage and control network switches at massive scales. Meta Vice President of Network Engineering Gaya Nagarajan said the company expects to unlock immediate gains in AI training efficiency.

“Meta’s next-generation AI infrastructure requires open and efficient networking at a scale the industry has never seen before,” he said. “By integrating Nvidia Spectrum-X into the Minipack3N switch and FBOSS, we can extend our open networking approach while unlocking the efficiency and predictability needed to train ever-larger models and bring generative AI applications to billions of people.”

Meanwhile, Oracle is looking further ahead. Not only will it integrate Spectrum-X Ethernet in its existing data centers, but also its future gigawatt-scale AI factories powered by Vera Rubin GPUs.

“By adopting Spectrum-X Ethernet, we can interconnect millions of GPUs with breakthrough efficiency so our customers can more quickly train, deploy and benefit from the next wave of generative and reasoning AI,” said Oracle Cloud Infrastructure Executive Vice President Mahesh Thiagarajan.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.