AI

AI

AI

AI

AI

AI

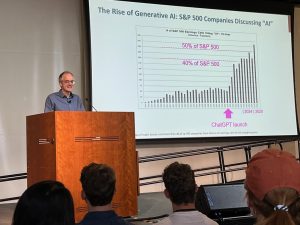

Before OpenAI’s release of ChatGPT in 2022 and its explosion into the public’s consciousness, artificial intelligence was quietly being developed in research labs and discussed in scientific conferences. As much of the enterprise world’s attention has been currently focused on AI agents and massive expectations for reshaping enterprise production, a group of engineers and scientists has been examining what’s next.

Hints of what’s to come were provided by presenters at the Bay Area Machine Learning Symposium or BayLearn, an annual gathering of high-level scientists and engineers from throughout Silicon Valley. This year’s event, hosted by the School of Engineering at Santa Clara University on Thursday, offered a glimpse into how some of AI’s leading voices envision the technology’s future impact as companies and research labs refine their approach to AI.

“We’re not just building systems, we’re trying to think about the underlying problem that systems are trying to solve,” Bryan Catanzaro (pictured), vice president of applied deep learning research at Nvidia Corp., said during his presentation at the conference.

A significant part of Nvidia’s approach to enable problem-solving for systems involves Nemotron, the chipmaker’s collection of open-source AI technologies designed to make AI development more efficient at every stage. These include multimodal models and datasets, pre- and post-training tools, precision algorithms and software for scaling up AI on GPU clusters.

Nemotron, a portmanteau of “neural modules” and the character Megatron in the Transformers toy franchise, is central to Nvidia’s vision for accelerated computing.

“Nemotron is a really fundamental part of how Nvidia thinks about accelerated computing going forward,” Catanzaro said. “Accelerated computing is really about specialization… and doing things you couldn’t do with a standard computer. Accelerated computing is so much more than a chip.”

Nvidia also believes that future progress of AI will be fueled by contributions in the open-source community. In an interview with SiliconANGLE following his presentation, Catanzaro noted that Meta Platforms Inc. and China’s Alibaba Group Holding Ltd. and DeepSeek had all participated in Nemotron.

“There’s been a lot of great contributions,” Catanzaro said. “The Nemotron datasets are being used by everybody.”

Catanzaro has made his own unique contribution to the advancement of AI. As documented in Stephen Witt’s book on Nvidia’s rise, “The Thinking Machine,” founder and Chief Executive Jensen Huang’s fateful decision to pivot his company toward artificial intelligence could be traced to his interaction with Catanzaro, who believed that deep learning was key to the future of AI.

In conversation with SiliconANGLE, Catanzaro described how his work in field-programmable gate arrays or FPGAs gave him an appreciation for the speed of Nvidia’s GPU-based CUDA compute architecture. He was intrigued by how the technology could be applied to artificial intelligence and discussed its application for machine learning with Huang in 2013.

“I looked at that and thought there’s something special about the programming that Nvidia is bringing to CUDA,” Catanzaro said. “Back then, CUDA was not so much focused on machine learning. It was focused on high-performance computing. That journey was pretty exciting… and the rest is history.”

The history of AI’s development and rise is also thanks to the influence of computer scientists such as Professor Christopher Manning. A noted expert in the field of natural language processing or NLP, Manning reminded BayLearn attendees that large language models weren’t even on the radar of many scientists more than 20 years ago, when 33 papers on AI were presented at the Association for Computational Linguistics Conference.

Stanford’s Professor Christopher Manning talked about his NLP research and AI at the BayLearn conference.

“How many LLM papers were there in 1993?” Manning asked. “There were zero. Without 20/20 hindsight, it’s really surprising no one was talking about language models. We clearly could have been and should have been pushing LLMs much earlier. There was this disbelief that LLMs were going to be useful.”

What proved to be useful, however, was natural language capabilities for AI-based applications. Manning’s research at Stanford University paved the way for the application of deep learning to NLP, which has since become a foundation of AI’s growth and use in a wide range of applications today.

Manning, who is founder and associate director of the Stanford Institute for Human-Centered Artificial Intelligence, expressed frustration that a current focus on AI to collar immediate results has ignored the technology’s potential to become better through interaction with the world around it.

“LLMs don’t work interactively at all,” Manning said. “Human beings can learn with orders of magnitude less data than our current models. We’ve got better human learning than we have machine learning.”

The solution, according to Manning, is systematic generalization, an ability for AI models to move beyond current industry solutions that jam them with data and into a world where agents can learn through interaction. The goal is to create AI models that combine known elements into novel meaning. This will involve building a system that will learn by “poking around websites,” according to Manning, becoming better through exploration.

“To a reasonable extent, brute-forcing [data] works, but that’s not how human beings work,” Manning noted. “We need to get to more efficient models that can get to systematic generalization.”

The quest for systematic generalization will take new AI frameworks built to run more efficiently on computing networks. Apple Inc. is working on such a solution with enhancements for MLX, machine learning software for Apple silicon.

The open-source machine learning framework was developed by Apple for Mac computers. Released nearly two years ago, MLX can transform high-level Python code into optimized machine code. Reports have indicated that Apple is also working with Nvidia to add CUDA back-end support to MLX as part of its effort to reduce the cost of building machine learning frameworks.

“We thought it was an opportunity to build machine learning software tailored for hardware,” Ronan Collobert, a research scientist at Apple, told the BayLearn gathering. “We have to think from a systems standpoint how to get AI reliably deployed.”

For the average consumer, engineers’ enthusiasm for machine learning frameworks and coding support may not move the needle. Yet advancements in AI are also transforming the robotics world in ways that may soon become much more visible in the world around us.

Google LLC’s DeepMind research unit has been working on developing models designed to make robots more intelligent. Last month, the company released its Gemini Robotics 1.5 and E.R. 1.5 models, which embody reasoning capabilities to help robots actually think.

DeepMind’s approach has been to previously equip robots with an ability to perform singular tasks, such as folding a piece of paper. Now they are capable of more advanced functions such as choosing clothes suitable for predicted weather conditions.

AI is driving progress in the field of general robotics, according to Ed Chi, vice president of research at Google DeepMind, where a machine can pick up an item and throw it away based on a simple natural language prompt. It has forced engineers to rethink grandiose visions of a world in which artificial general intelligence, or AGI, enables robots to understand, learn and apply knowledge across an infinite range of human tasks.

“I’m sick of all this talk about AGI when I don’t have a robot that can clean my house,” Chi said during a conference panel session. “The huge advancement that we’re making in robotics right now is in the area of general robotics. It’s good enough.”

Being “good enough” may indeed become the mantra for developers in the AI field, as advancements move at light speed and enterprises continue to clamor for immediate results. AI is driving societal and economic change at a pace that has left even the most experienced practitioners stunned. Yet there is also a belief that as AI’s capabilities continue to improve, the impact will be enormous.

“We live in an absolutely extraordinary time at the moment,” said Stanford’s Manning. “We’re on a path where there’s going to be continual progress. We are going to be into a wild ride in how this technology develops.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.