AI

AI

AI

AI

AI

AI

Artificial intelligence startup Gimlet Labs Inc. launched today with $12 million in funding from a group of prominent investors.

Factory led the consortium. It was joined by Intel Corp. Chief Executive Lip-Bu Tan, Figma Inc. CEO Dylan Field, former VMware Inc. CEO Raghu Raghuram and other angel investors.

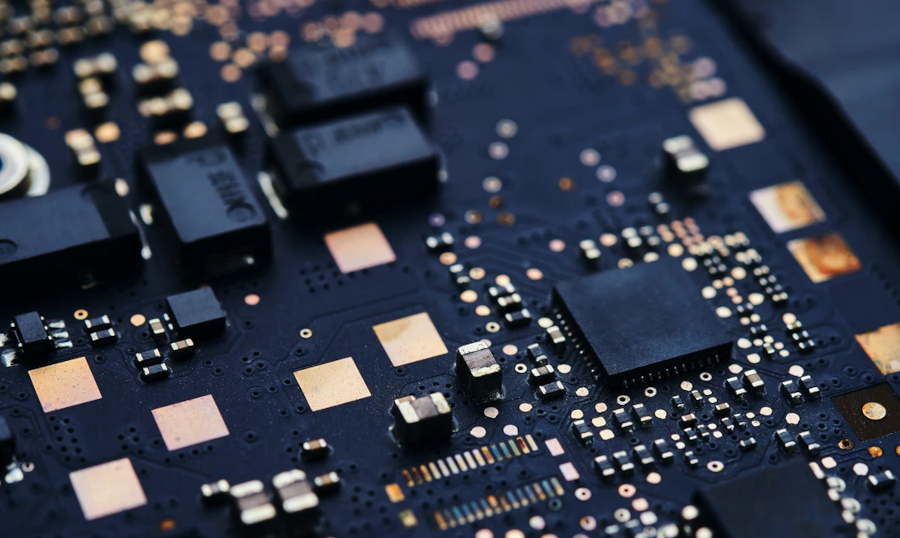

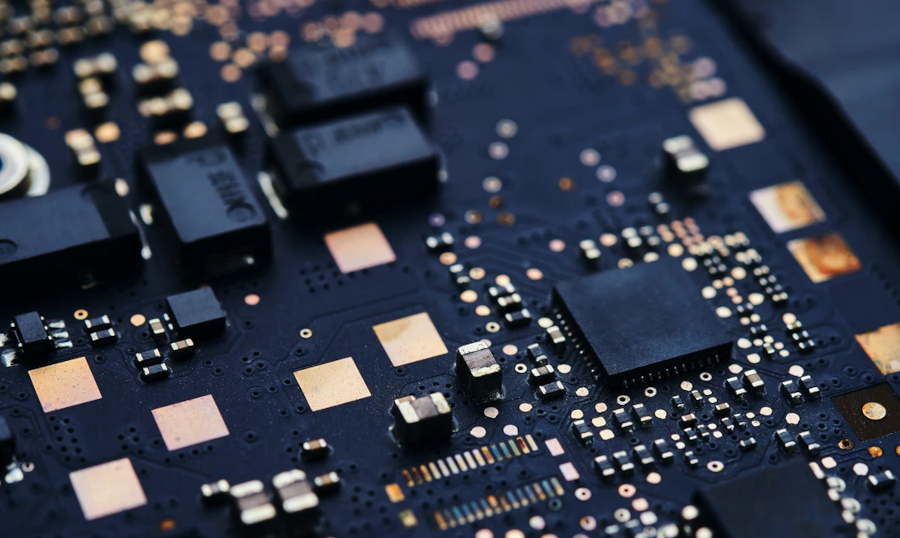

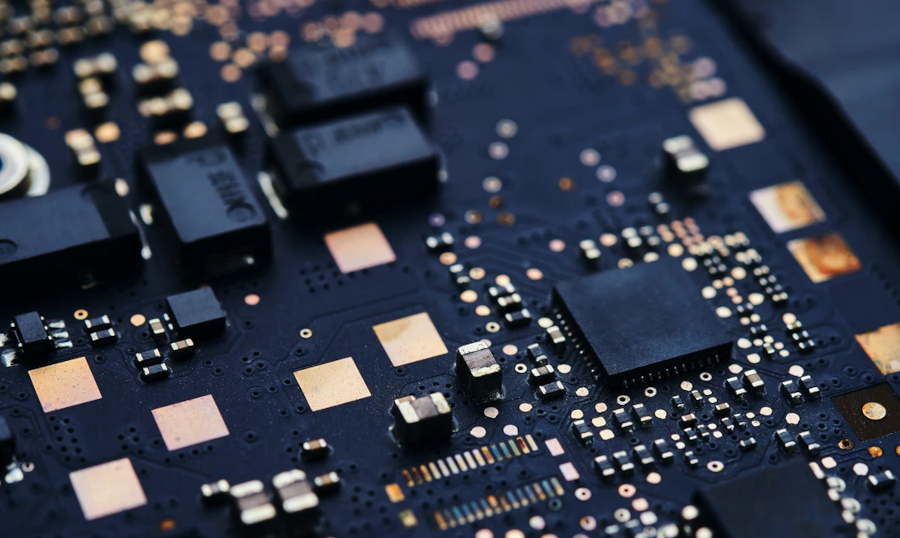

By default, many AI models can only run efficiently on the specific type of graphics card that was used to train them. It’s possible to port neural networks to other chips, but the task requires a significant amount of time and effort. Gimlet Labs has built a software platform that enables developers to port their AI models across chips without writing any code.

There are situations where moving a neural network to a new processor can cut costs. For example, it’s possible the graphics card that was used to train an AI isn’t as adept at inference as a newly launched competing chip. In such a scenario, porting the model to the newly launched competing chip can boost efficiency.

Gimlet Labs says that its platform not only makes it easier to move AI models across processors but also takes the concept a step further. It breaks down an AI agent into multiple components and deploys each component on the chip that can run it in the efficient manner.

The hardware requirements of an AI agent’s components vary significantly. Usually, the module that performs the initial calculations involved in processing user prompts requires a large amount of memory bandwidth. In contrast, the component that generates prompt responses prioritizes graphics card core performance over memory bandwidth. Running each component on the chip that best meets its requirements reduces inference costs.

“Our platform automatically disaggregates each workload into its component stages, and maps each stage to the most suitable accelerator,” Gimlet Labs’ four founders wrote in a blog post today. “Compute-bound tasks go to high-throughput GPUs, memory-bound tasks to higher-bandwidth accelerators, and network-bound tasks to nodes with fast interconnect.”

Gimlet Labs optimizes AI models with a custom compiler. In many cases, the methods that developers use to improve a neural network’s efficiency vary from chip to chip. Gimlet Labs says that its compiler can automatically apply chip-specific optimizations to save time for users.

One of the most common approaches to optimizing AI models’ performance is called operator fusion. When an AI carries out a calculation, the underlying graphics card must fetch the necessary data from memory and then send back the results. Operator fusion combines multiple calculations into a single operation, which reduces the number of times data has to travel to and from memory.

Gimlet Labs’ platform doesn’t translate AI models directly into machine code, the low-level representation of software that chips use to perform processing. It first turns models into a so-called intermediate representation using an open-source technology called MLIR. The technology makes it easier to apply certain types of optimizations.

After optimizing an AI workload, the platform turns it into kernels. Those are snippets of code that can be efficiently parallelized across multiple graphics card cores. Carrying out multiple calculations in parallel is faster than completing them one after one another.

Gimlet disclosed on occasion of today’s funding round that its platform is already generating “eight-figure revenues.” The company sells the software in an on-premises edition and a cloud version that removes the need for users to maintain infrastructure. Gimlet says its customers include Fortune 500 enterprises and AI providers.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.