AI

AI

AI

AI

AI

AI

Shares of Nvidia Corp. closed 2.6% lower today following a report that Meta may buy Google LLC’s TPU artificial intelligence chips.

Sources told The Information that the tensor processing unit processor deal could be worth billions of dollars. It’s believed Meta may kick off the partnership next year by leasing TPUs hosted in Google LLC. According to the report, the Facebook parent may deploy the chips in its own data centers from 2027 onwards.

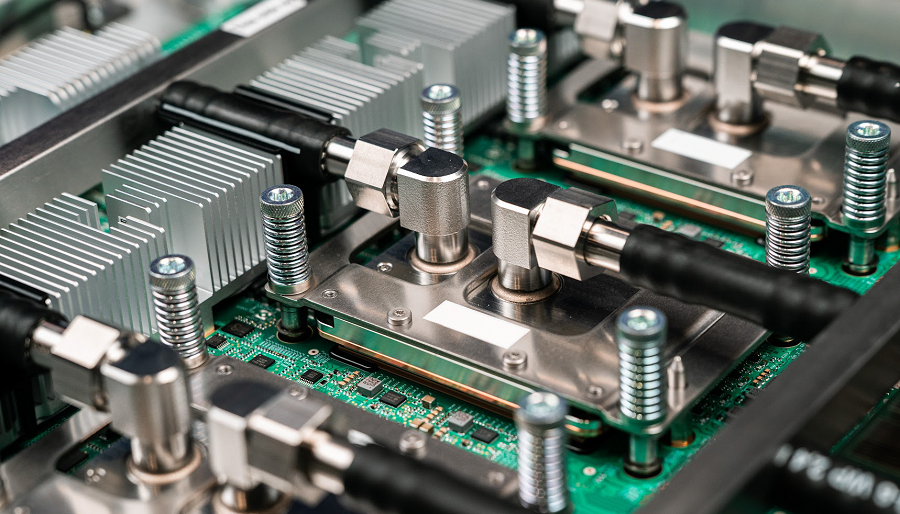

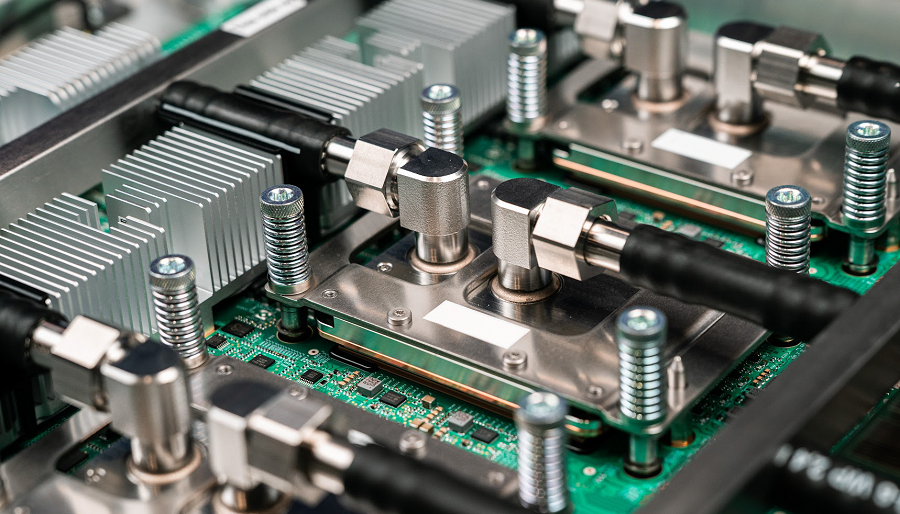

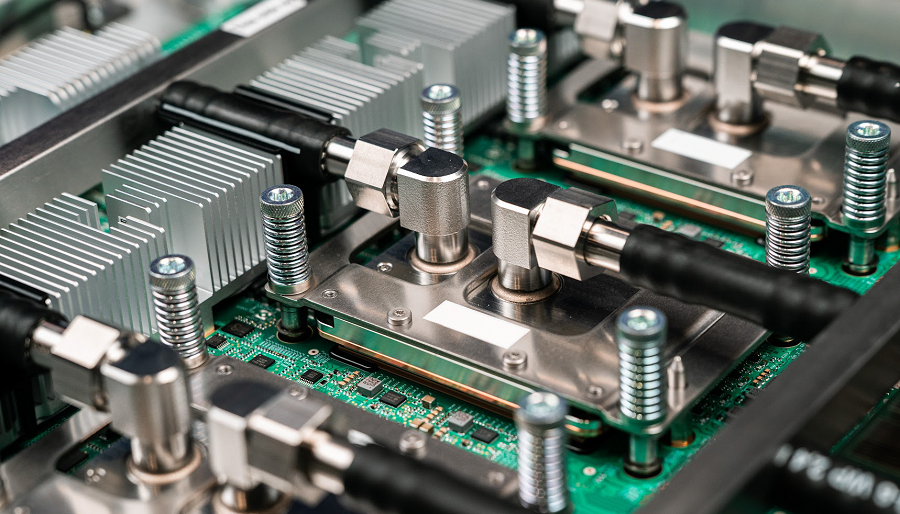

Google debuted its newest TPU, Ironwood, in April. The chip (pictured) comprises two dies that host 192 gigabytes of high-speed HBM memory and six custom AI processing modules. Those accelerators are based on two different designs.

Each Ironwood chip includes four SparseCores, accelerators optimized to process large embeddings. An embedding is a mathematical structure that stores information for AI models. There are also two TensorCores designed to speed up matrix multiplications, the calculations AI models use to process data.

Google deploys Ironwood in liquid-cooled clusters that contain up to 9,216 liquid chips. The company says that a single cluster can provide 42.5 exaflops of performance. One exaflop corresponds to a billion billion calculations per second.

It’s unclear whether Meta’s potential chip deal with Google would see it deploy Ironwood or a different TPU. Given that the Facebook parent is expected to start installing the chips in 2027, it may seek to buy the successor to Ironwood that will likely debut next year.

Google could theoretically provide Meta with complete TPU clusters of the kind it uses to power its cloud platform. However, it’s possible the social media giant will instead opt to buy only chips and install them in its own systems. Meta uses custom server and rack designs in its data centers.

A few years ago, Facebook parent developed a custom inference chip called MTIA. In February, Reuters reported that the company was planning to deploy a new iteration of the processor by the end of 2025. The potential TPU contract with Google suggests the company might be scaling back its plans for MTIA. Alternatively, Meta may be planning to use MTIA for inference and run training workloads on TPUs.

Google’s shares closed 1.6% higher on the report. Broadcom Inc., which helps the search giant design its TPUs, gained 1.9%.

The potential chip deal drew a response from only Wall Street but also Nvidia. “We’re delighted by Google’s success — they’ve made great advances in AI and we continue to supply to Google,” the chipmaker wrote in a statement published on X.“NVIDIA is a generation ahead of the industry — it’s the only platform that runs every AI model and does it everywhere computing is done.”

Nvidia’s flagship AI accelerator, the Blackwell Ultra, provides 10 petaflops of performance when processing four-bit floating point numbers. One petaflop equals a million billion calculations per second. The upcoming Rubin graphics card series that Nvidia plans to launch next year is expected to provide higher performance.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.