INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

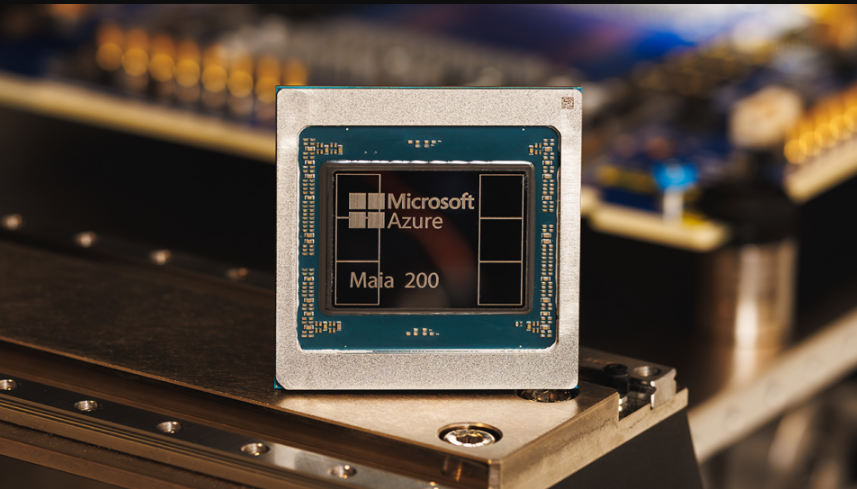

Microsoft Corp. today unveiled the second generation of its custom artificial intelligence processor in Maia 200, boldly claiming that it’s the most powerful chip offered by any public cloud infrastructure provider so far.

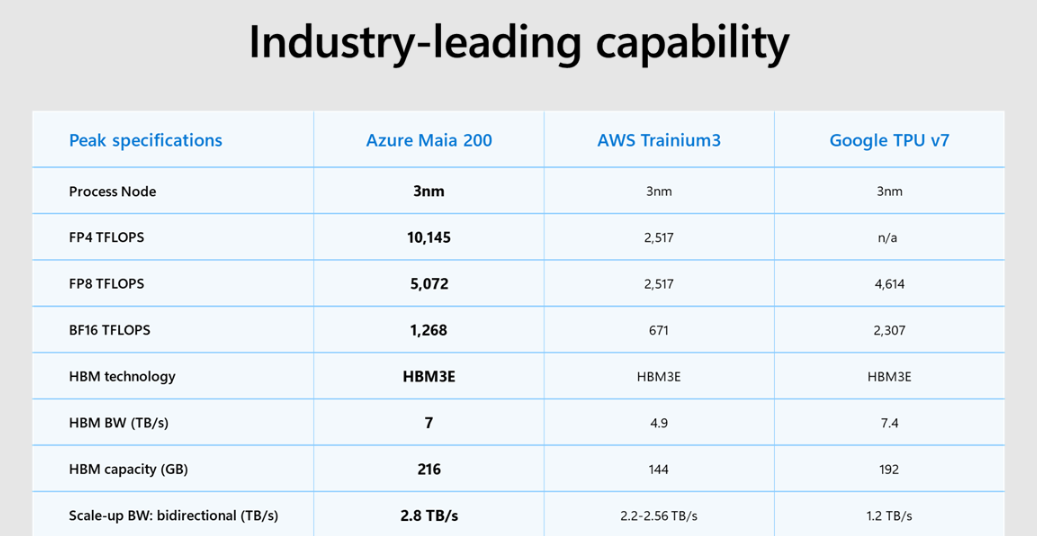

The company said Maia 200 offers three times the compute performance of Amazon Web Services Inc.’s most advanced Trainium processor on certain popular AI benchmarks, while exceeding Google LLC’s newest tensor processing unit on some others.

Maia 200 is the successor to the Maia 100 chip that was launched in 2023. It has been designed to run the most powerful large language models at faster speeds and with greater energy efficiency. It features more than 100 billion transistors that combine to deliver more than 10 petaflops with four-bit precision, and is capable of approximately 5 petaflops of eight-bit performance, making it a significant improvement on its original custom silicon.

Microsoft said the chip is designed primarily for AI inference workloads. Inference is the process of running trained AI models, which has become an increasingly more important element of AI operating costs as the technology matures.

According to Microsoft, the Maia 200 is already running workloads at the company’s data center in Des Moines, Iowa, including Copilot 365, OpenAI Group PBC’s GPT-5.2 models and various internal projects within its AI Superintelligence team. The company plans to deploy additional Maia 200 chips at its Phoenix data center facility in the coming weeks.

The launch of Maia 200 accelerates a trend among the major cloud providers to develop their own custom AI processors as an alternative to Nvidia Corp.’s graphics processing units, which still process the vast majority of the world’s AI workloads but are pricey and in extremely high demand. Google kicked off the trend with its TPUs almost a decade ago, and Amazon’s Trainium chips are currently in their third generation, with a fourth coming up.

Microsoft was later to the party, with the first Maia chips only arriving in 2023 during that year’s Ignite conference. But although it’s playing catch-up, Microsoft says Maia 200’s tight integration with AI models and applications such as Copilot provide it with an advantage on many workloads. The latest generation is more specifically focused on inference, rather than training, which helps it achieve 30% better price-performance per dollar than the original Maia chip.

Cost has become an important differentiator for public cloud providers due to the massive amounts of cash they’re burning through to fuel their AI projects. Though training models is usually only a onetime or periodic expense, inference costs are continuous, so Microsoft, Google and Amazon are all trying to undercut the price of Nvidia’s GPUs.

Holger Mueller of Constellation Research said three years is a long time to wait for an updated Maia chip, but Microsoft appears to have made up for the delay, based on the specifications of the new generation. “Microsoft offers a direct comparison between Maia 200 and the latest alternatives from Google and Amazon, and it looks to provide a real performance boost over those platforms, as it should,” he said. “But the key to success is not specs but customer adoption, and enterprises will be looking to see real gains in terms of energy efficiency.”

The analyst said the next challenge for Microsoft will be to show it can match the same yearly release cadence as Nvidia and Google, and start introducing annual updates to Maia instead of making customers wait for another three years. “If it can do this, it’s a good thing for enterprises, because it will mean more choice for AI platforms,” he added.

There are signs that Microsoft is taking custom silicon more seriously, because it said it’s also going to open the door to third-party developers. To do that, it’s offering access to a new software development kit that will help developers to optimize their AI models for Maia 200. It’s available in early preview starting today.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.