INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Meta Platforms Inc. has agreed a new deal with Nvidia Corp. to buy millions of its next-generation Vera Rubin graphics processing units and a similar number of Grace central processing units to fuel its artificial intelligence ambitions.

The deal, announced today, is likely to be worth billions of dollars. In a statement, Meta Chief Executive Mark Zuckerberg said the expanded partnership is crucial to enabling his company’s previously announced vision of “delivering personal superintelligence to everyone in the world.”

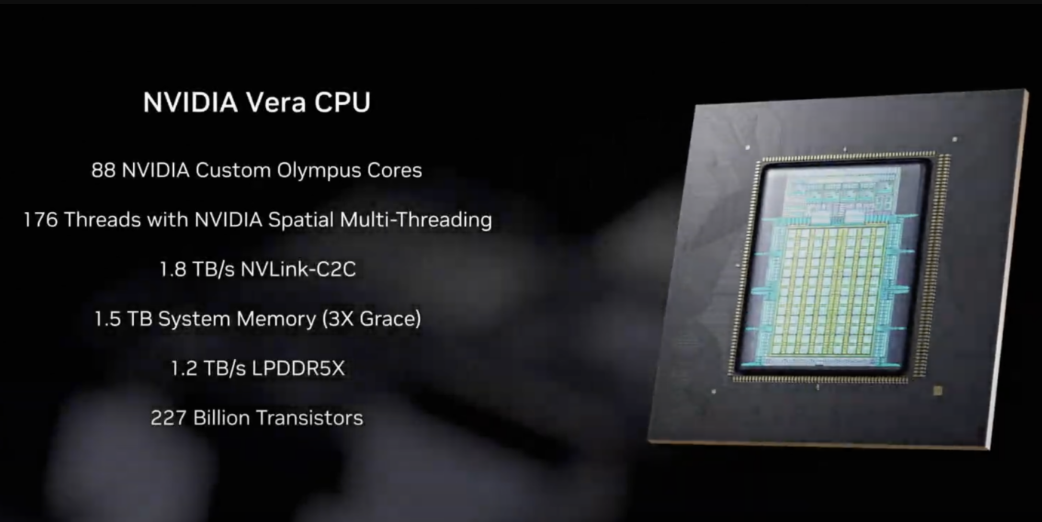

Meta is already one of Nvidia’s biggest customers and is believed to have been using its GPUs for years already, but the deal suggests a much deeper partnership between the two companies. It’s notable because Meta has also committed to buying substantial volumes of Nvidia’s Grace CPUs and deploying them as standalone chips, rather than use them in tandem with its GPUs.

Typically, CPUs are combined with GPUs in a server to improve efficiency: The CPUs handle complex tasks and manage system operations, while the GPUs focus purely on AI processing. By offloading the management to CPUs, GPUs can run much faster.

Nvidia said Meta will be the first company to deploy Grace CPUs on their own, and starting next year, it will deploy the next-generation Vera CPUs too. Ben Bajarin, a chip analyst at Creative Strategies, said this is significant. “Meta doing this at scale is affirmation of the soup-to-nuts strategy that Nvidia’s putting across both sets of infrastructure: CPU and GPU,” he told the Financial Times.

Neither company disclosed the terms of the deal, but it’s likely to be worth tens of billions of dollars, analysts say. Last month, Meta told investors that it’s planning to invest $135 billion in its continued AI infrastructure build out this year, and the money spent on chips will account for a significant portion of that amount.

Meta has committed to spending $600 billion on AI infrastructure by 2028. Its plans include building 26 data centers in the U.S. and four internationally. The two largest facilities include the 1-gigawatt Prometheus site in New Albany, Ohio, and the 5-gigawatt Hyperion site in Richland Parish, Louisiana, which are both currently under construction. Many of Nvidia’s chips are destined to end up in those locations.

Meta won’t be buying chips alone. The deal also includes Nvidia’s Spectrum-X Ethernet switches and InfiniBand interconnect technologies, which are used to link massive clusters of GPUs so they can work together in tandem. In addition, it will use Nvidia’s security products to secure the AI features on platforms such as Facebook, Instagram and WhatsApp.

While Meta is doubling down on Nvidia, it has been careful to diversify its chip strategy too. It’s a major customer of Nvidia’s rival Advanced Micro Devices Inc., which also makes GPUs, and in November, a report in The Information revealed that the company is also considering using Google LLC’s tensor processing units for some of its AI workloads. That report made shareholders nervous at the time, with Nvidia’s stock falling more than 4%, although no deal with Google has been announced so far.

Perhaps the most telling aspect of today’s deal is what it means for Meta’s attempts to develop its own, in-house processors for AI. The company first deployed the Meta Training and Inference Accelerator or MTIA chip in 2023 and updated it in 2024, as part of an effort to reduce its reliance on external suppliers. It’s primarily used for AI inference, running AI models in production to power applications such as content recommendations on its social media feeds.

Last year, Meta revealed it’s also working on a new version of MTIA that’s specifically designed for model training, which is an area where its first chips have struggled. At the time, it said it was aiming for a broad rollout of its new chips in 2026. It’s reportedly working with Taiwan Semiconductor Manufacturing Co. to manufacture the chips, and late last year it was said to have completed the “tape-out” phase, meaning it has apparently finalized the design ahead of testing.

Zuckerberg has previously said the new MTIA training chips are designed to be more energy-efficient and optimized for the company’s “unique workloads,” which should allow it to reduce its AI operating costs. But the deal with Nvidia suggests that its planned rollout this year might be delayed. The Financial Times reported that Meta has experienced “technical challenges” with the new chips, citing anonymous sources.

While its in-house chip ambitions may have been frustrated, Meta has at least secured a plentiful supply of GPUs to make up for those delays. It said it’s planning to work closely with Nvidia to optimize the processors for its AI models, which are thought to include its upcoming frontier model Avocado, which is the successor to its flagship LLM Llama 4. The company needs to make a good impression with Avocado, because Llama 4 was largely viewed as a disappointment, failing to match the capabilities of its rival’s most advanced models.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.