INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. wants to play a bigger role in enabling artificial intelligence at the network edge with the launch of Jetson Xavier NX, which it says is the world’s smallest and most powerful AI supercomputer for robotic and embedded devices.

The word “supercomputer” might be a bit strong, but the Jetson Xavier NX module is nonetheless very powerful and extremely energy-efficient, providing a level of performance comparable to most standard data center servers. It delivers up to 21 Theoretical Operations Per Second, a standard measure of performance, when running modern AI workloads, and it consumes as little as 15 watts of power.

Best of all, it fits into a tiny chassis that’s even smaller than a credit card, which means it can be installed in almost any kind of device at any location.

Nvidia said Jetson Xavier NX is ideal for edge computing devices such as small commercial robots and drones, intelligent high-resolution sensors used in manufacturing operations and portable medical devices, among others. These kinds of devices have always been difficult to cater to as they demand a high level of computing performance to be able to process data on site. That technology simply wasn’t available before because of size, weight and power constraints.

But Jetson Xavier NX changes that since it’s powerful enough to run multiple neural networks in parallel while processing data from numerous sources in real time.

James Kobielus, an analyst with SiliconANGLE Media’s sister market research firm Wikibon, said Jetson Xavier NX’s blend of a small footprint, low power, high performance and flexible deployment options would be critical to its widespread adoption.

“These commercial imperatives are especially important for edge computing in mobile, embedded, robotic, and “internet of things” devices,” Kobielus said. “Just as important is its ecosystem-readiness, being both pin-compatible with the existing Jetson Nano hardware platform and supporting machine learning models built in all major AI frameworks, including TensorFlow, PyTorch, MxNet, Caffe and others.”

The hardware runs on Nvidia’s CUDA-X AITM architecture and supports Nvidia’s JetPack software development kit that includes accelerated libraries for deep learning, computer vision, computer graphics and other AI-based workloads.

“In a world where AI chips are announced on what seems like a daily basis, I believe Nvidia has raised the bar with Jetson Xavier NX, showing that exceptional performance at small size and low power, together with a consistent and powerful software architecture, is what matters in embedded edge computing,” said Patrick Moorhead, president and principal analyst of Moor Insights & Strategy. “It’s ideal for devices with the need for many sensors, even a battery-powered, highest-performance drone. The biggest benefit is a massive amount of ML inference performance with a mature toolchain.”

Nvidia said the Jetson Xavier NX module will be available from March 2020, priced at $399.

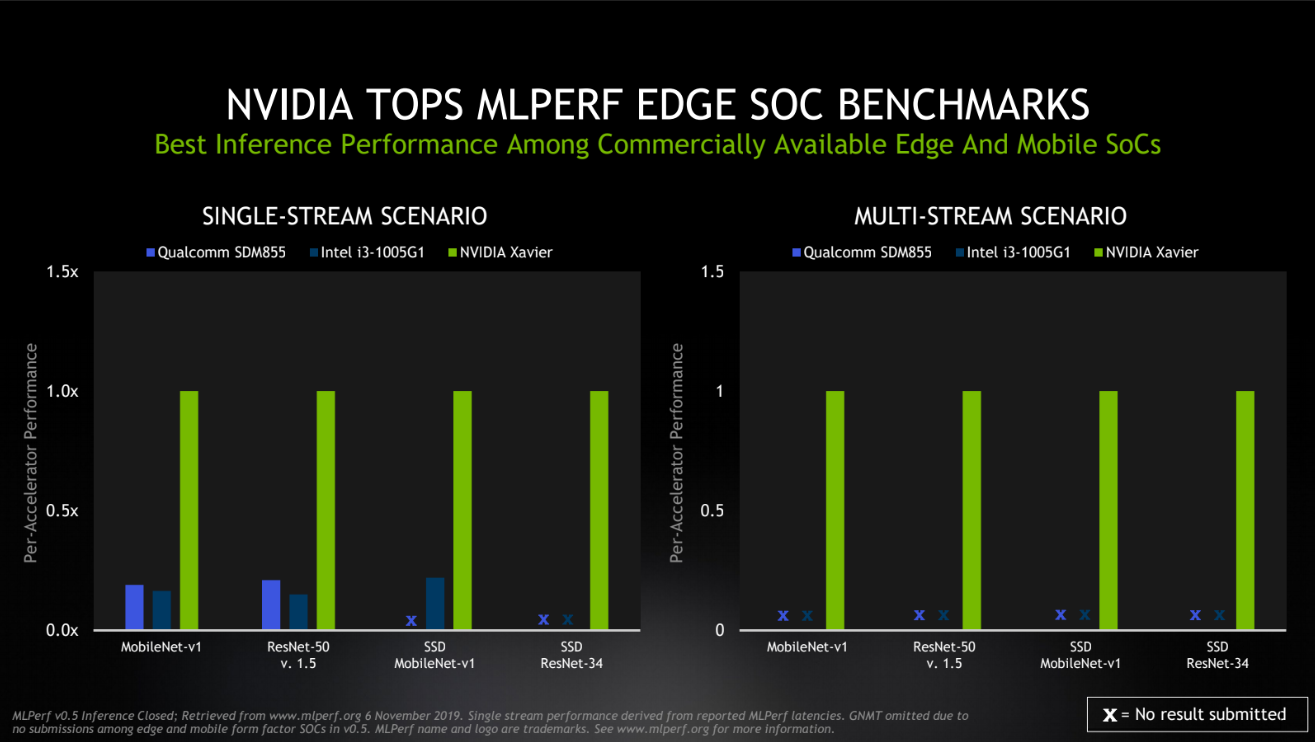

The launch of Jetson Xavier NX comes as Nvidia released a new set of benchmark test results, showing it came out top in all five benchmarks measuring the performance of AI inference workloads in data centers and at the network edge.

The MLPerf Inference 0.5 benchmark measured how Nvidia’s Turing graphics processing units for data centers and the Xavier NX module performed in a variety of traditional AI work cases, including image classification, object detection and translation.

Nvidia’s hardware came out top in all five benchmarks for both data center scenarios and edge use cases, performing faster that comparable chips from rivals such as Intel Corp., Google LLC and Qualcomm Inc.

Kobielus said that’s impressive because MLPerf has become the de facto standard benchmark for AI training, and now for inferencing from cloud to edge.

“NVIDIA’s recent achievement of the fastest results on a wide range of MLPerf inferencing benchmarks is no mean feat, and coming on its equally dominant results on MLPerf training benchmarks, no big surprise either,” Kobielus said. “As attested by avid customer adoption and testimonials, the vendor’s entire AI hardware/software stack has been engineered for the highest performance in all deep learning and machine learning workloads in all deployment modes.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.