INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. late today detailed a new liquid-cooled version of its A100 graphics processing unit that can help increase data centers’ energy efficiency and reduce their carbon footprint.

The GPU made its debut at Computex 2022 early Tuesday in Taipei, Taiwan. During the event, Nvidia also shared details about a number of upcoming partner-developed hardware products that will incorporate its Grace CPU Superchip, Grace Hopper Superchip and Jetson AGX Orion products.

The A100 was until recently Nvidia’s flagship data center graphics card. Introduced in 2020, the chip can be used both to train artificial intelligence models and to perform inference, or the task or running a neural network after training is complete. The A100 includes 54 billion transistors that enable it to provide 20 times the performance of its predecessor.

The original version of the A100 is designed for data centers that rely on a method known as air cooling to dissipate the heat generated by servers. This method has been widely used in the data center industry for decades. According to Nvidia, an A100 operating in a facility that uses air cooling can provide 20 times higher energy efficiency than a central processing unit when running AI software.

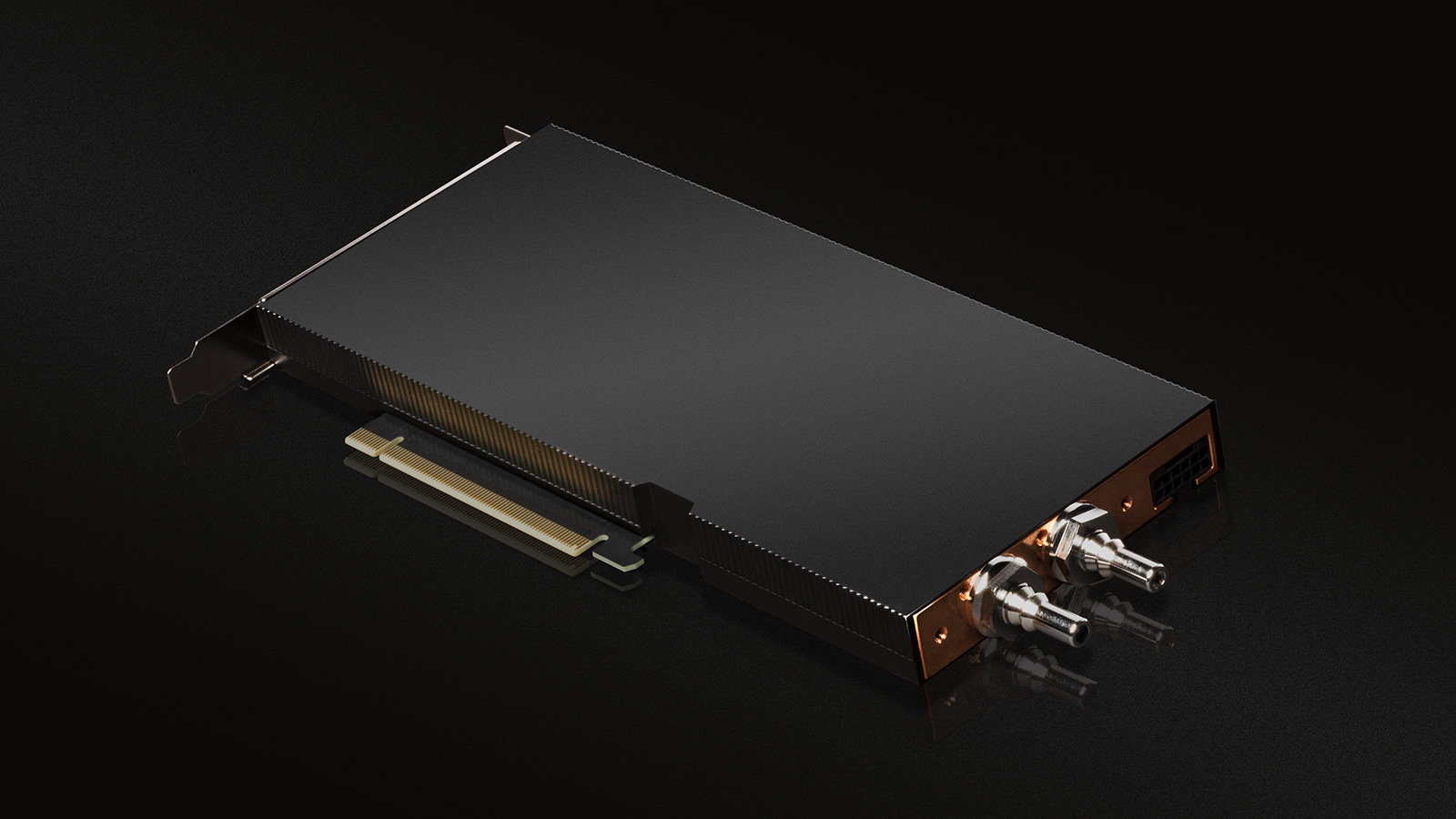

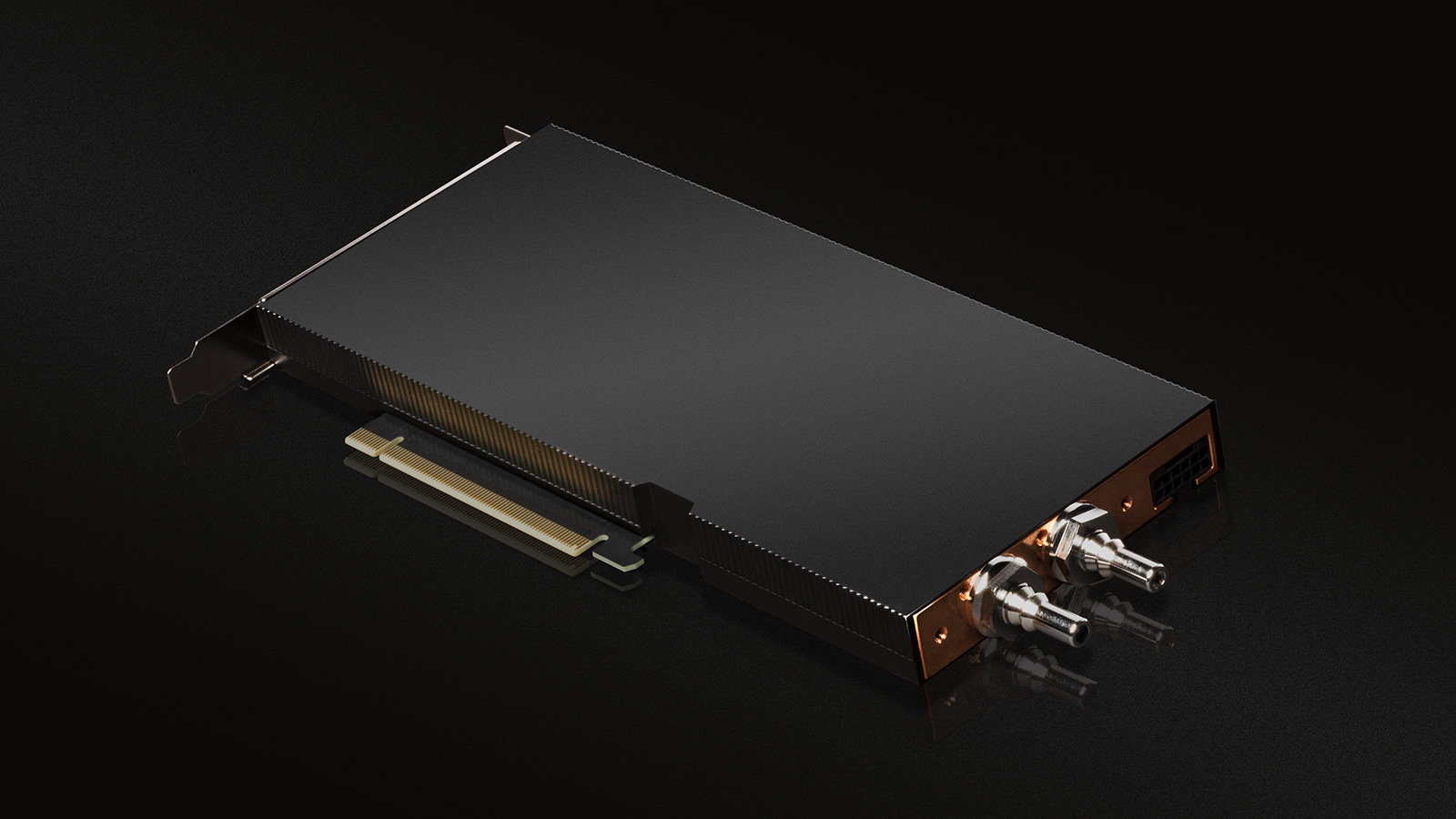

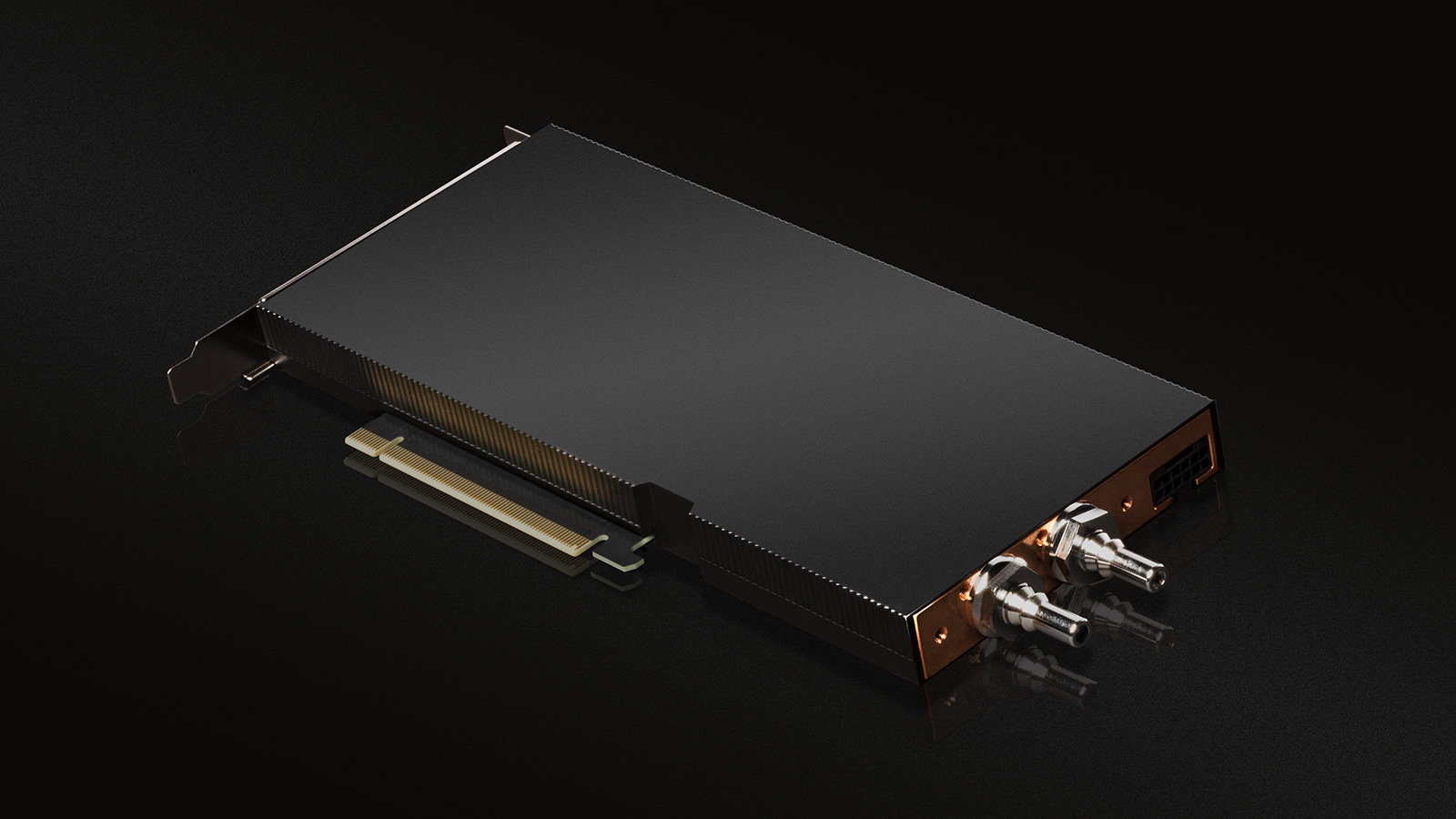

The new liquid-cooled version of the A100 that Nvidia debuted today promises even better efficiency. According to Nvidia, the liquid-cooled version can provide the same performance as its air-cooled counterpart using about 30% less electricity. The electricity savings were measured in separate tests conducted by the chipmaker and Equinix Inc., a major data center operator.

Liquid cooling also has other advantages. Many air cooling systems dissipate heat from servers through a process that involves evaporating large amounts of water. Liquid cooling systems, in contrast, require a significantly lower quantity of water and can recycle most or all of it. This enables data center operators to significantly reduce their water usage.

Yet another benefit of liquid cooling systems is that they take up less space in a data center. The result, according to Nvidia, is that more graphics cards can be added to servers. Attaching the new liquid-cooled version of the A100 to a server requires using one PCIe slot, while the original air-cooled version requires using two.

Nvidia is currently sampling the liquid-cooled A100 to early customers and plans to make it generally available this summer. At least a dozen hardware partners intend to incorporate the chip into their products.

Further down the line, Nvidia plans to release a liquid-cooled version of the H100, the new flagship data center GPU that it debuted earlier this year. The H100 can run Transformer natural language processing models up to six times faster than the A100. In the future, Nvidia also expects to introduce liquid-cooled versions of its GPUs that will use the increased power efficiency to provide more performance than the air-cooled editions.

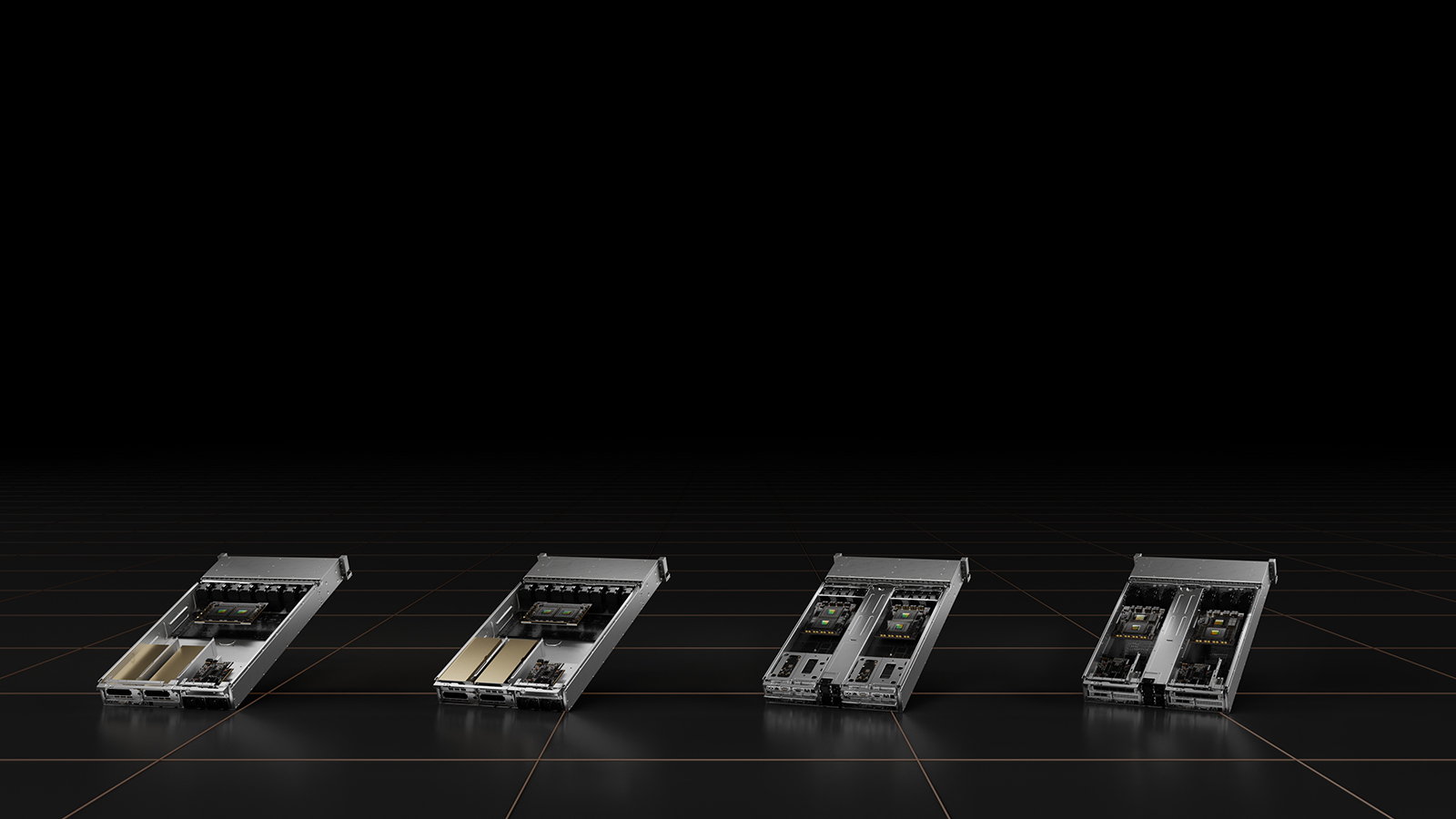

Alongside the liquid-cooled A100, Nvidia today announced that hardware partners based in Taiwan are launching dozens of servers based on Grace CPU Superchip and Grace Hopper Superchip. The Grace CPU Superchip is a computing module that combines two of Nvidia’s internally developed Grace central processing units using a high-speed interconnect in a single package. The Grace Hopper Superchip, in turn, is a computing module that combines a Grace CPU with a graphics card.

According to Nvidia, the new partner-developed servers that incorporate the modules are based on four specialized reference designs. The reference designs focus on different sets of enterprise use cases.

Two of the reference designs use the Grace Hopper Superchip. They enable hardware makers to build servers optimized for AI training, inference and high-performance computing workloads. The other two reference designs, which incorporate the Grace CPU Superchip, focus on digital twin, collaboration, graphics processing and videogame streaming use cases.

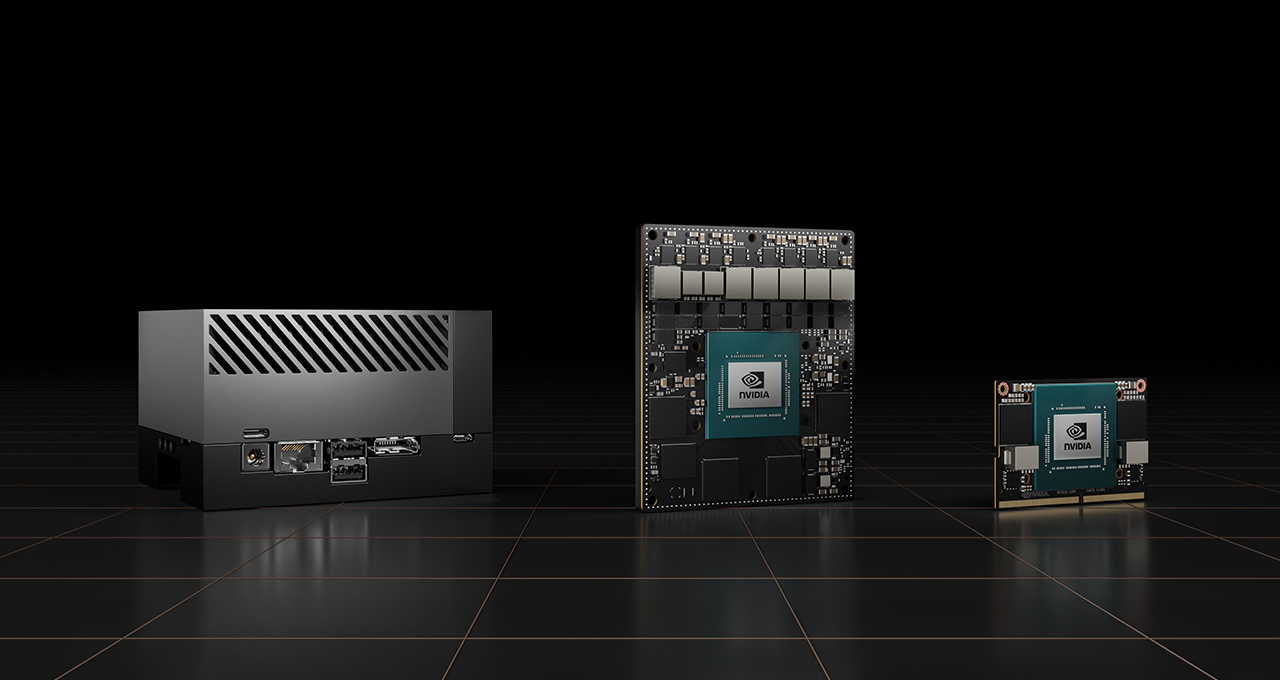

Nvidia today also provided an update about the hardware ecosystem around its Jetson AGX Orin offering, a compact computing module designed for running AI models at the edge of the network. Jetson AGX Orin combines a GPU with multiple CPUs based on Arm Holdings plc designs, as well as other processing components. It can carry out 275 trillion computing operations per second.

Nvidia today detailed that more than 30 hardware partners plan to launch products based on the Jetson AGX Orin. The initial lineup of partner-developed systems will include servers, edge computing appliances, industrial personal computers and carrier boards, circuit boards that are used by engineers to develop new hardware products.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.