AI

AI

AI

AI

AI

AI

Howso, formerly known as Diveplane, the provider of accurate and explainable machine learning models, today announced that it is releasing the Howso Engine into open-source as an alternative to what it calls black box AI libraries in use today.

Co-founder and Chief Executive Mike Capps and co-founder and Chief Technology Officer Chris Hazard told SiliconANGLE in an interview that they founded the company to change how AI was used in people’s lives, because obscure black boxes can be problematic when decisions being made by them can have profound effects.

“Chris and I started this company entirely like how do we kill black box, which is kind of a negative statement,” said Capps. “But you know, that approach of ‘It’s being used improperly,’ and decisions are where people’s lives are in the balance, whether it’s parole, or college or cars, you name it.”

The Howso Engine is built on instance-based learning. Capps said that unlike neural networks, AI built on IBL is fully explainable. Examples of neural network machine learning technologies include popular frameworks include PyTorch, developed by Meta Platform Inc.’s Facebook AI Research and JAX.

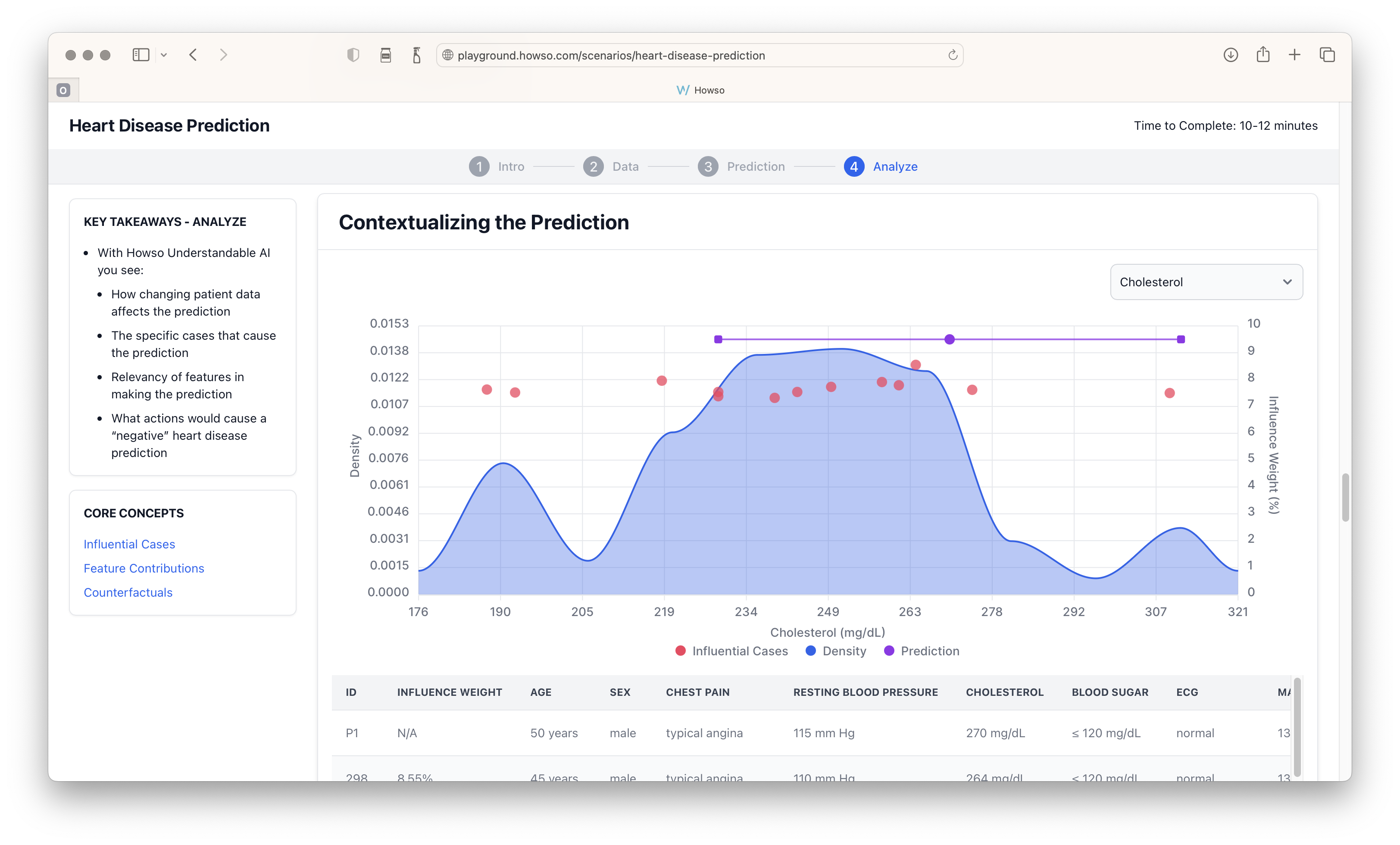

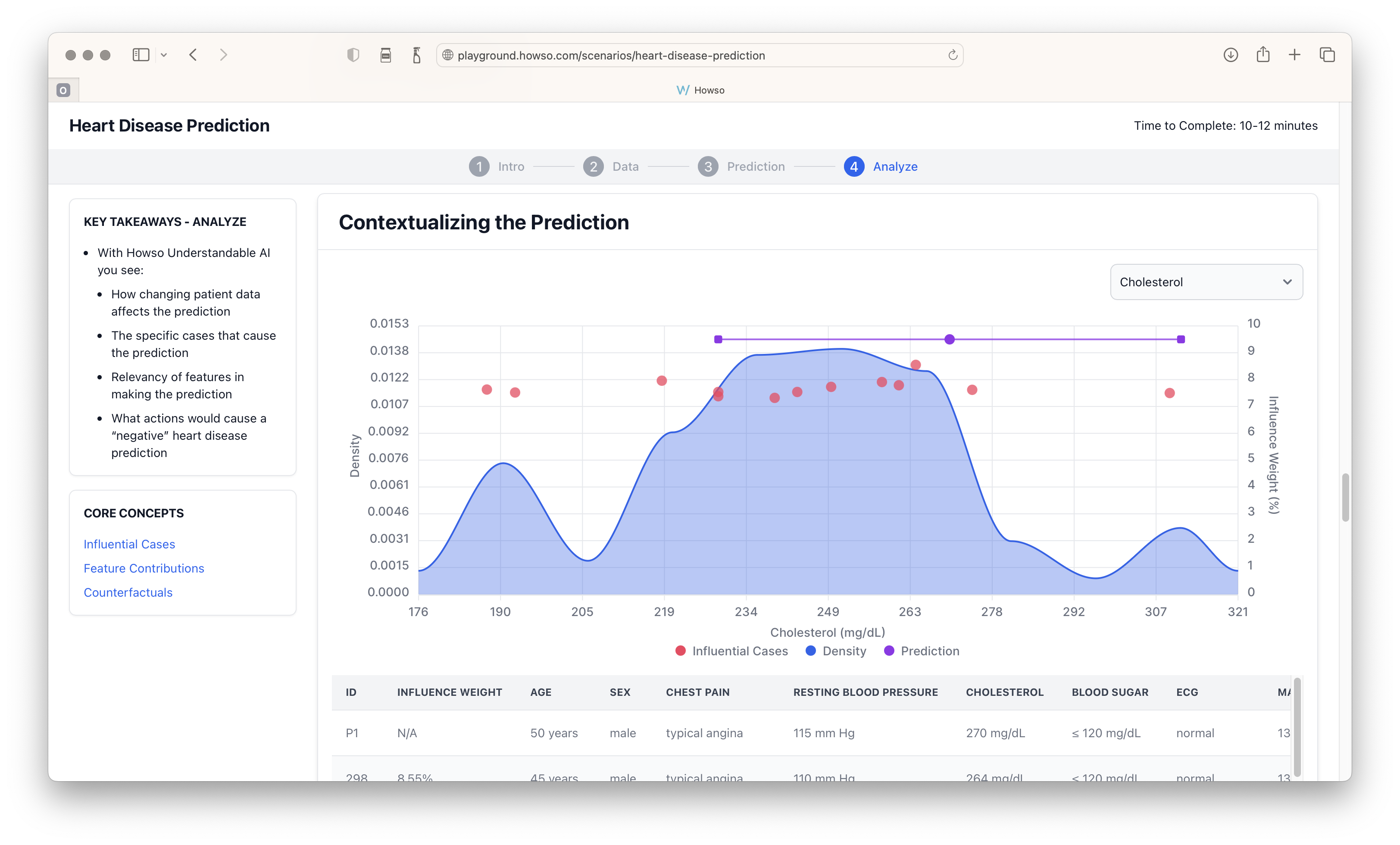

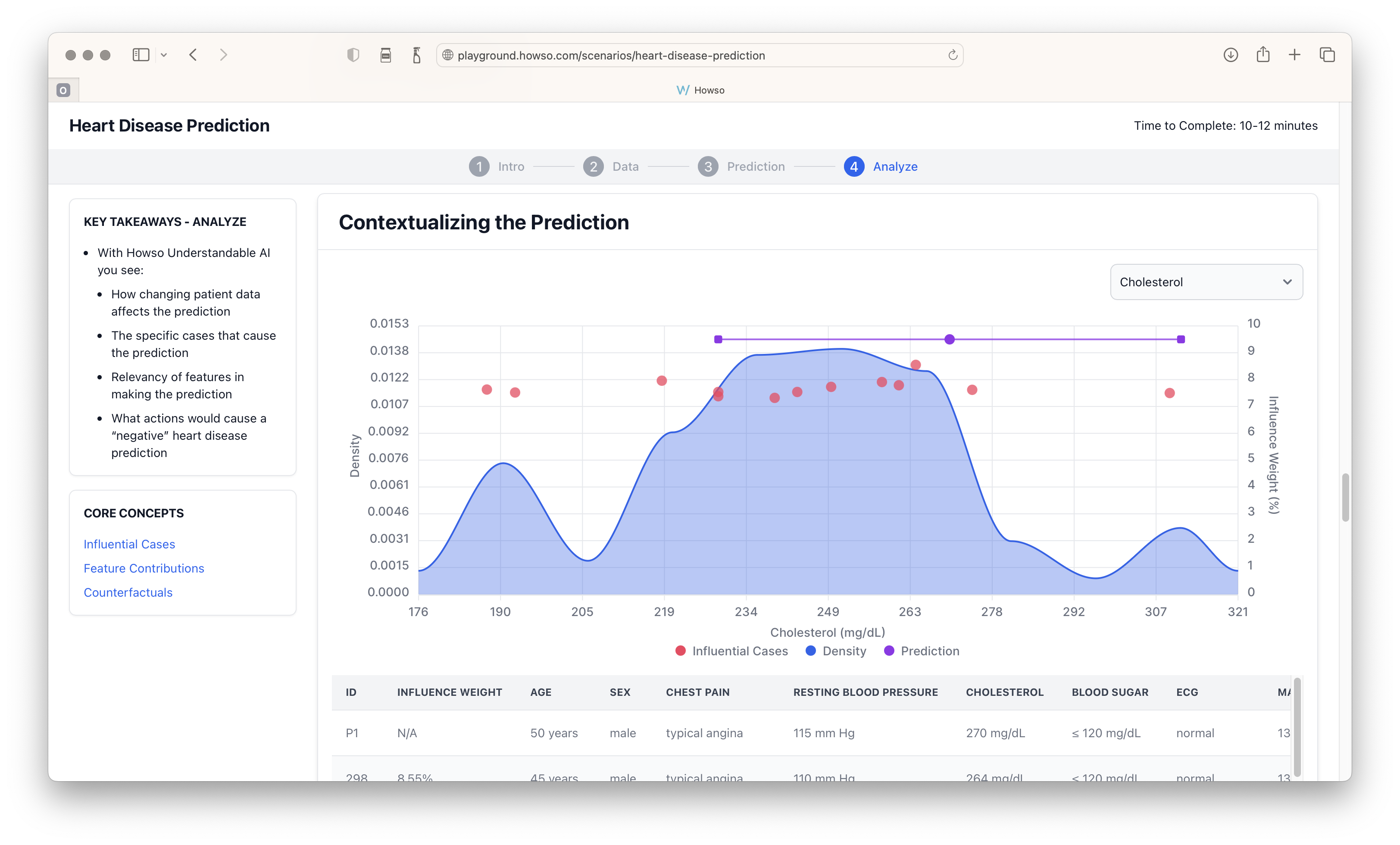

Instance-based learning is a type of machine learning that uses algorithms to make predictions about future data, but instead of creating predictions, it stores training data and then searches for comparable points to create predictions. As a result, it doesn’t make assumptions about the data, which makes it easier to understand its outcomes and trace its decisions. Unlike neural networks, IBL’s predictions, outcomes and labels can be fully audited and interrogated back to their source data, allowing users to determine and correct mistakes or biases.

Hazard likened the problem of lacking explainability in AI back to failing to communicating how it came to a decision, because in the end it’s the source data and the decisions of the academics and the data scientists that lead to the outcomes.

“The thing to me that resonates the most is not so much the books and stories about robots, it’s about decisions where there’s some soothsayer or some process where the ‘why’ wasn’t communicated,” said Hazard. “Like ‘Macbeth who shall not be killed by anyone of woman born,’ or whatever. And well, there’s a piece missing, there was the explanation.”

In Shakespeare’s play “Macbeth,” the namesake character thought he couldn’t be killed because no person born could kill him. That was until he met a man who was “born” by a cesarian, meaning he wasn’t born in the traditional sense.

Hazard pointed out that a lot of prophecies in books operate this way: They’re riddles without explanations where the work isn’t shown, and the result is often a downfall or confusion. This is how it feels when working with a black-box AI, he said.

As more and more business and real-world operations begin to rely heavily on machine learning and AI models, the safety and trustworthiness of those models continue to come into question — as do the internal observability and reliability of those models. Predictive models can be used to help drive numerous beneficial outcomes in industries such as healthcare, where they can help predict the onset of heart disease or high blood pressure in different populations. However, there’s also the use of AI in banking and insurance where models could bake in a racial bias when choosing interest rates or when offering a loan.

The explainability natively built into the models themselves would make it possible to audit the decision-making to see where the bias came from and correct it before it begins to hurt users.

Right now, the Howso Engine operates primarily with statistical models and structured data, anything that can be put into a database, including time series and graph data. According to Hazard, the company is advancing toward text as well.

Howso already works with some big names such as MasterCard Inc. and Duke University Health System on the transparent use of AI. But Capps said it’s a totally different thing to approach developers by open-sourcing the engine.

“This is me, it’s a big step. For us, it’s scary to put everything out there,” said Capps. “But the only way we’re ever going to change the world is if we democratize not just explainable AI, but really, really good explainable AI.”

Capps explained that means Howso will be competing with big companies such as OpenAI LP and Google LLC, which make generative AI chatbots such as ChatGPT and Bard, as well as other machine learning models. Getting Howso’s AI paradigm that happens to be natively explainable and safer adopted by the industry can’t be done alone, Capps said. It’s going to take going to the community.

“We can’t do that by ourselves,” Capps said. “That’s the play, to get everybody to jump on board and help.”

The open-source offering will initially be aimed at data scientists, developers, academics, researchers, students and anyone else interested in building apps based on the Howso Engine’s explainable AI model. Starting today it will be available for download from GitHub.

Support our open free content by sharing and engaging with our content and community.

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.