INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp.’s upcoming new Blackwell graphics processing units, which have already been delayed to market, are now reportedly encountering overheating issues that prevent their deployment in data center racks.

The report today by The Information says customers have raised serious concerns about the issue, worrying that it will affect their plans for building out their new data center infrastructure for artificial intelligence.

The problem is that the Blackwell GPUs seem to overheat when connected together in data center server racks designed to hold up to 72 chips at once. The Information cited sources familiar with the issue as saying that when the chips are integrated into Nvidia’s customized server racks, they produce excessive heat that can result in operational inefficiencies or even hardware damage.

Nvidia has reportedly told its suppliers to alter the design of their racks several times to try and resolve the overheating problems, but without success. The Information did not name the suppliers involved.

In response to the report, Nvidia downplayed the issue. “Nvidia is working with leading cloud service providers as an integral part of our engineering team and process,” a spokesperson for the company told Reuters today. “The engineering iterations are normal and expected.”

Nvidia first announced Blackwell in March, as the successor to the hugely successful H100 GPUs that are used to power the majority of the world’s AI applications today. They’re said to deliver a 30-times performance boost versus the H100 chips while reducing energy consumption by up to 25% on some workloads.

The company had initially planned to ship the Blackwell chips in the second half of this year, but its plans came unstuck when a design flaw was revealed, causing the launch date to be pushed back to early 2025.

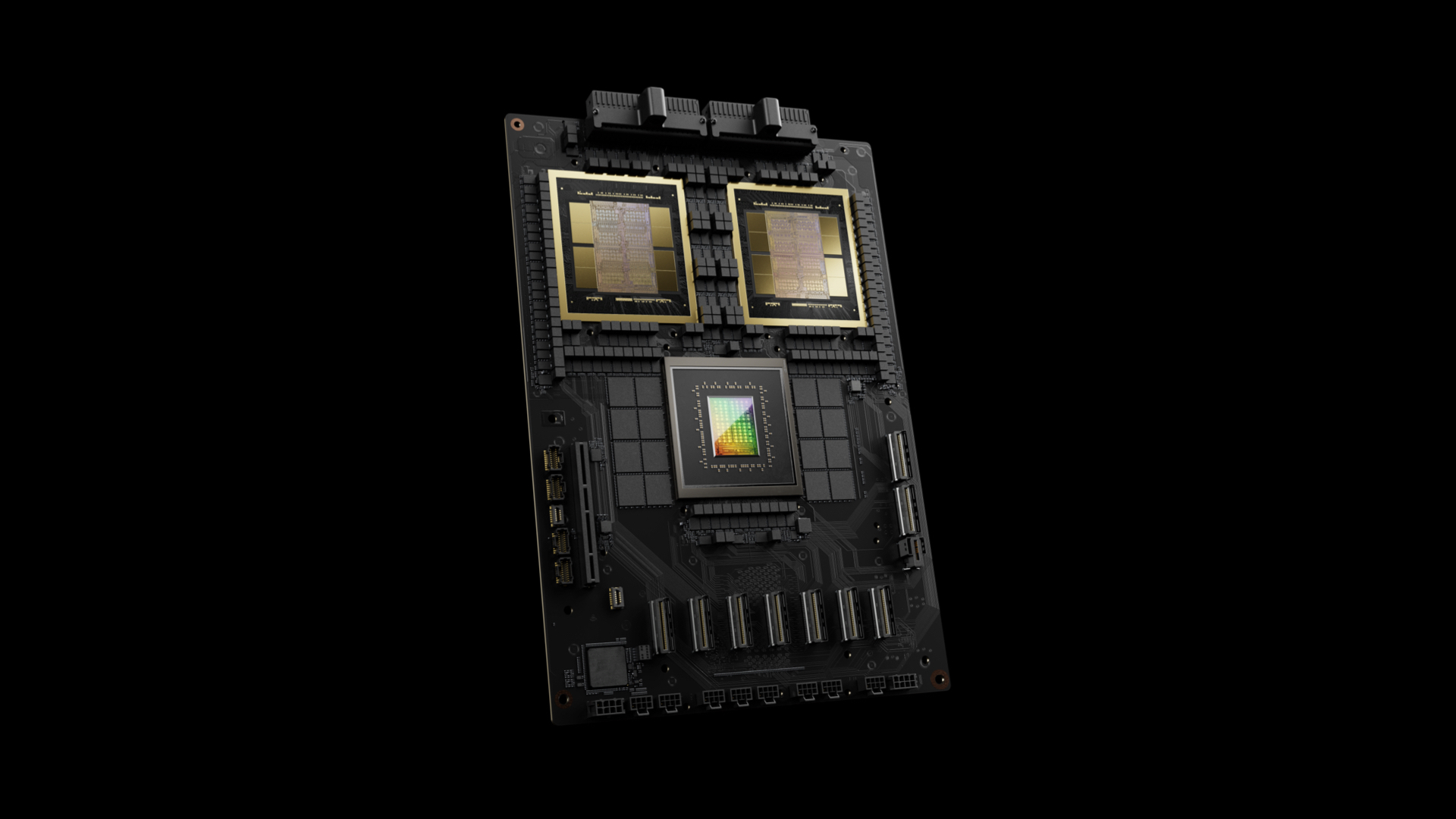

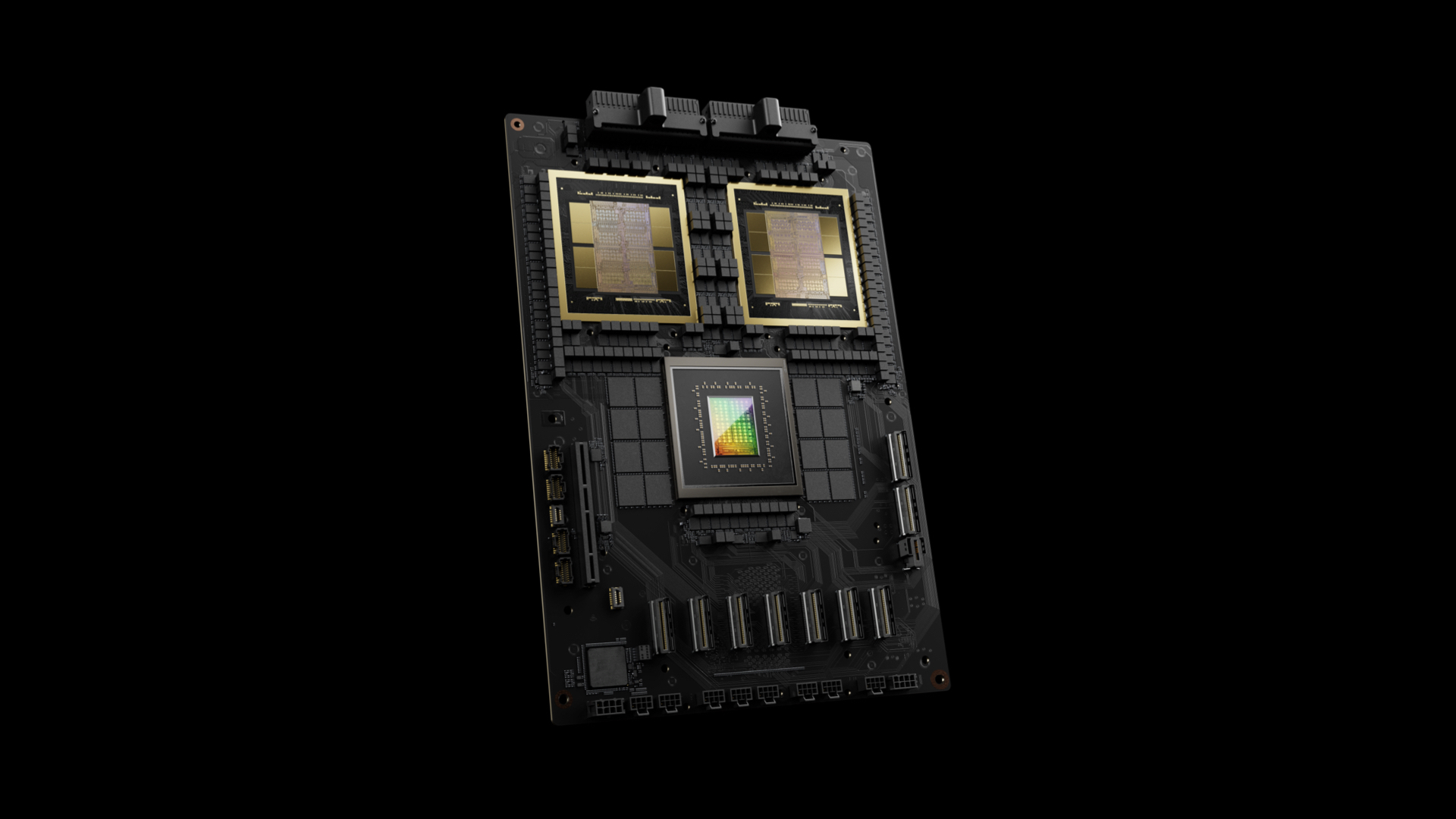

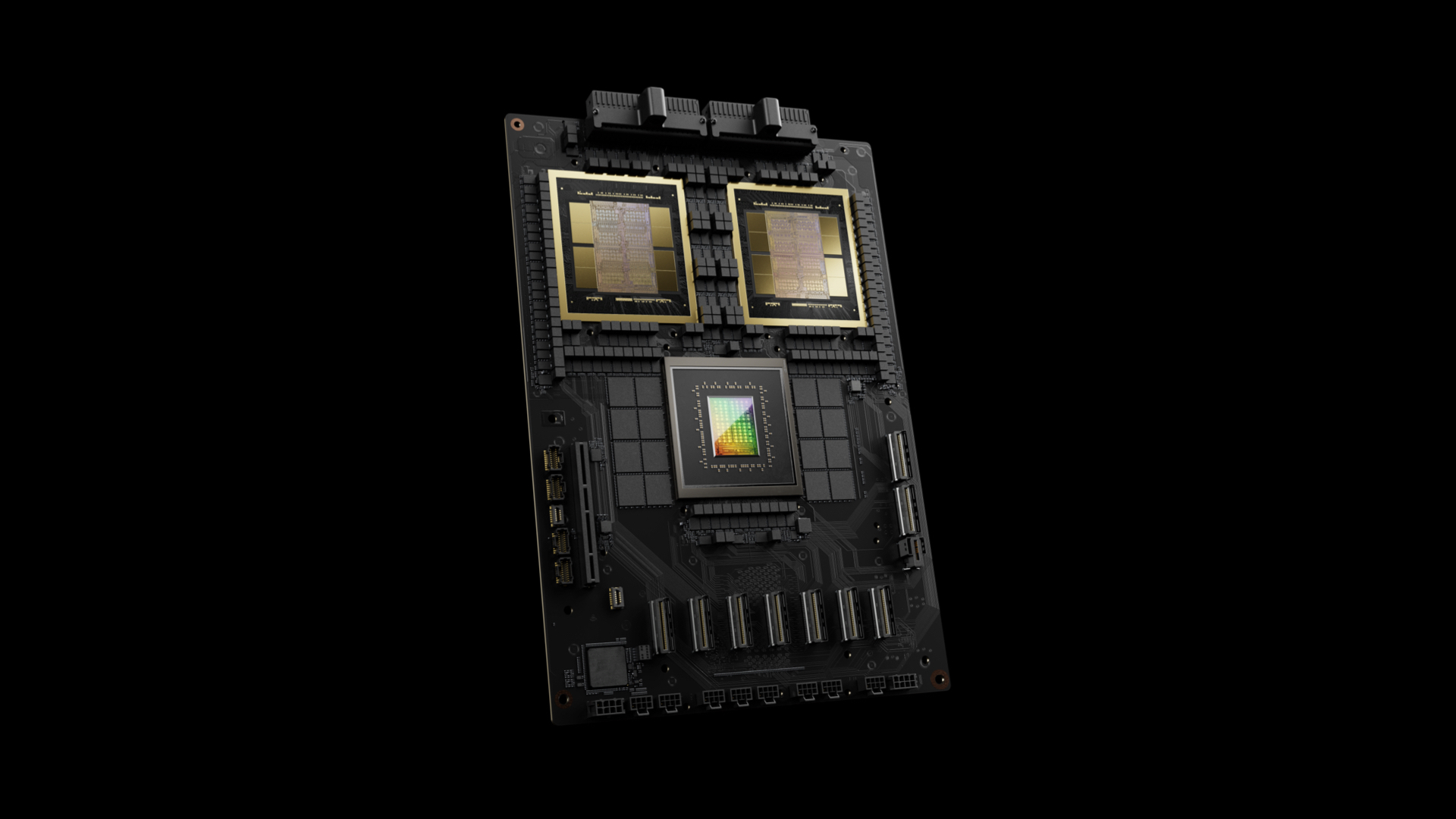

One of the key innovations in Blackwell is that it merges two silicon squares, each the size of the company’s H100 chip, into a single component. This is the crucial advancement that enables the chip to process AI workloads much faster, enabling more rapid data processing.

The original problem reportedly had something to do with the processor die that connects those two silicon squares, but Nvidia Chief Executive Jensen Huang said during a visit to Denmark last month that the issue had been resolved with assistance from its manufacturing partner, Taiwan Semiconductor Manufacturing Co.

It’s not immediately clear if the new overheating issues will affect Blackwell’s new launch date, slated for early next year, but Nvidia has every incentive to ensure that it gets the product just right. The GB200 Grace Blackwell superchips are set to cost up to $70,000 a piece, while a complete server rack is priced at more than $3 million.

Nvidia has previously said it hopes to sell around 60,000 to 70,000 complete servers, so any more delays could be extremely expensive for the company, which has become one of the most valuable publicly traded entities in the world due to its dominance of the AI industry. It’s set to report quarterly earnings results Wednesday.

Analysts had mixed opinions on the report, with Rob Enderle of the Enderle Group telling SiliconANGLE that Nvidia is likely well aware of the issues and has an idea how to fix them.

“We knew Blackwell was going to run hot and that it would likely need water cooling, particularly in dense configurations, because air cooling just doesn’t remove heat effectively enough,” the analyst said. “So far, only Lenovo has really pushed hard on water cooling with its Neptune configurations, which are rolling out to market now, but I expect that every Blackwell server with high densities of chips will need to move to a water-based cooling system in order to prevent overheating.”

Holger Mueller of Constellation Research Inc. said cooling systems are critical for AI platforms as the most powerful accelerator chips run at higher-than-optimal temperatures and will quickly break down if they can’t be cooled, so this could potentially be a significant problem. According to him, Nvidia seems to have admitted there is an issue, but it hasn’t said how much of a problem it really is.

“The questions are how expensive will this be to fix, and how long will it take?” Mueller said. “Blackwell is by far the most compelling platform for generative AI, so customers really have no choice but to sit tight and wait for a fix. The duration of that wait will determine if there’s any impact on Nvidia’s sky-high stock price.”

The analyst said we might get some clues as to the scale of the problem later this week, as Nvidia is due to announce its latest quarterly financial results in the coming days. No doubt, Huang will be asked about the issue, but we can only wait to see how forthcoming he will be.

“The first indicator of the size of the problem might be the impact on Nvidia’s guidance for the next quarter, so analysts, customers and investors will be watching that metric carefully,” Mueller said.

For customers, the main fear is that any delay would affect their data center infrastructure deployment plans and potentially hurt their ability to develop more advanced AI models and applications.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.