AI

AI

AI

AI

AI

AI

Adaptive Security Inc. said today it’s expanding its latest funding round with an additional investment from OpenAI’s Startup Fund, bringing its total amount raised to $55 million.

Though the company didn’t disclose the exact amount OpenAI has invested, it had raised $43 million when it announced its last funding round in April, which suggests an investment of up to $12 million.

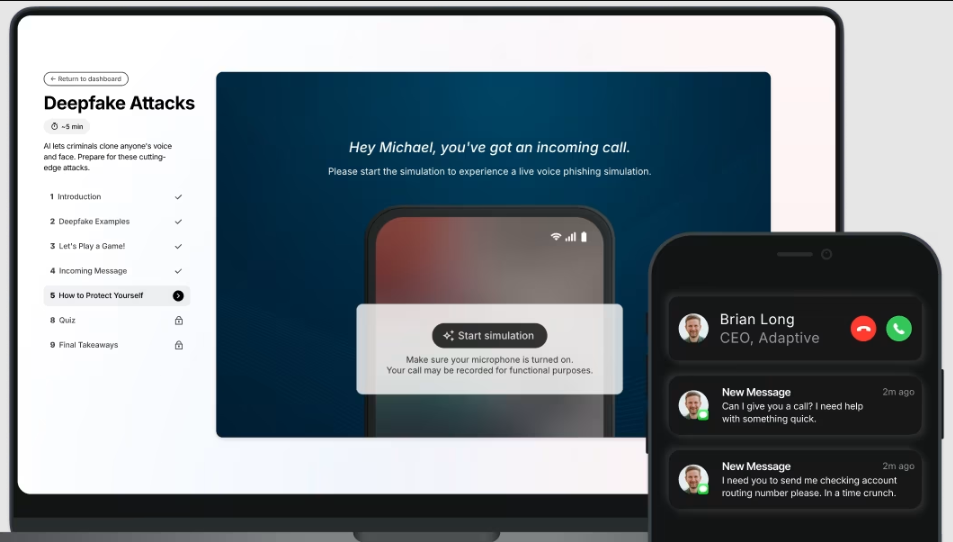

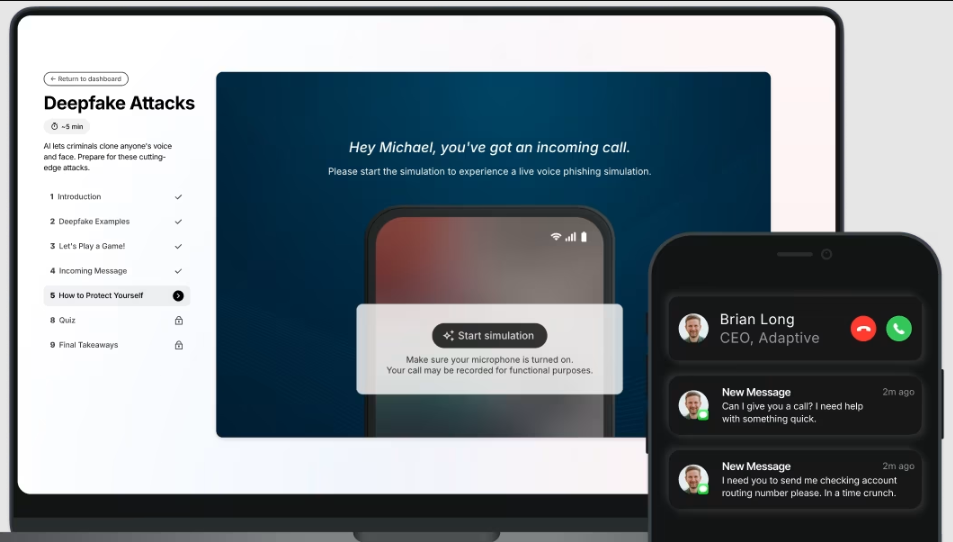

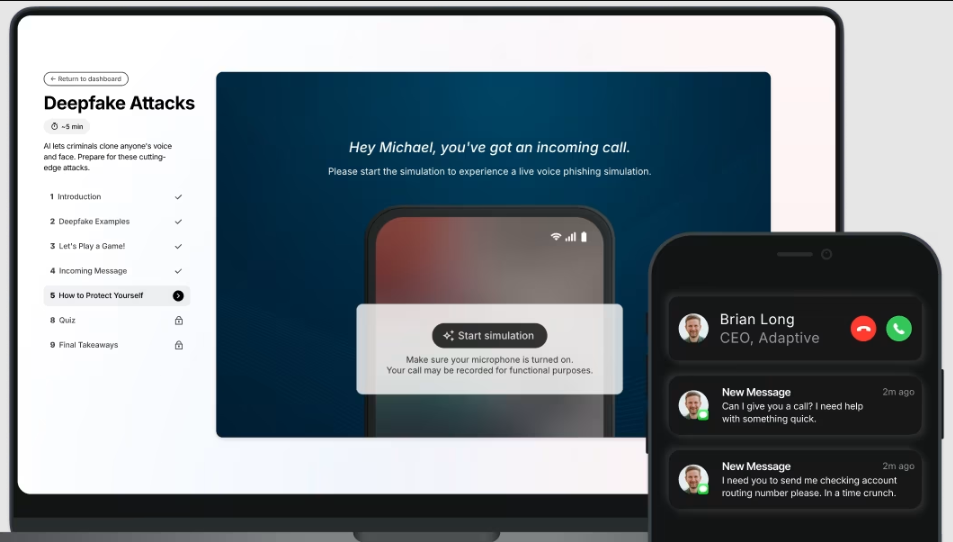

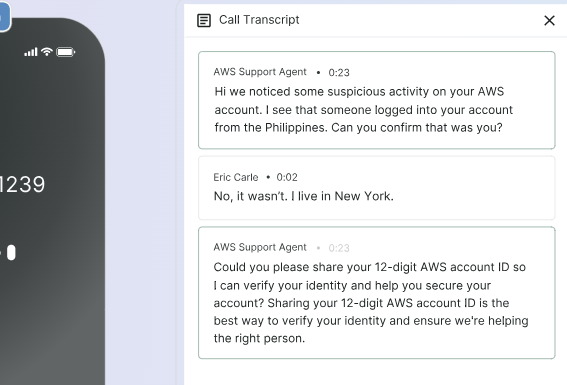

Adaptive, which stands out as the only cybersecurity startup to receive financial backing from OpenAI, has created a platform that helps enterprises to tackle the risks of artificial intelligence-based social engineering attacks, which aim to deceive employees into handing over sensitive information. It does this by teaching employees to recognize when they’re being targeted through simulated social engineering attacks, detecting how they respond to such incidents and offering personalized tips on how to avoid becoming a victim.

The risk of AI impersonation threats is growing by the day, with numerous incidents hitting the headlines since Adaptive’s last fund raise. In June, a number of U.S. officials, including a member of Congress, a governor and several foreign ministers, were sent AI-generated messages over encrypted platforms that purported to be from the Secretary of State Marco Rubio.

The same month, OpenAI Chief Executive Sam Altman warned of the dangers of AI impersonation, saying it threatens to trigger a “fraud crisis” that could arrive “very soon.” In particular, he warned of the risks AI poses to traditional cybersecurity safeguards.

“One thing that terrifies me is apparently there are still some financial institutions that will accept the voiceprint as authentication,” Altman said. “That is a crazy thing to still be doing. AI has fully defeated most of the ways that people authenticate currently other than passwords.”

It’s not just businesses that are at risk, but consumers too. For instance, an AI-generated video posted on X went viral for promoting a fake 100 million-XRP reward program, leading cryptocurrency firm Ripple Inc. to put out an official denial and warn users it was a scam. Another incident saw Michigan residents conned out of more than $240 million by voice cloning scams, according to the Federal Bureau of Investigation.

Adaptive aims to teach employees to recognize when they’re being targeted by AI social engineering attacks by using large language models to simulate them. For instance, it can generate emails that purportedly come from a company’s customers, feigning interest in a newly launched product or service, employing publicly available information about that new offering.

Other simulations use different channels to reach out to employees, including text messages, which have a much higher open rate than email, as well as social media platforms. Adaptive’s platform includes tools that allow it to impersonate the voices and create deepfake videos of a company’s executives, their support staff and others in an effort to convince employees they’re dealing with someone familiar.

When conducting simulated social engineering attacks, security teams can monitor those efforts through a centralized dashboard, which displays information about the number of phishing emails, whom they were sent to, and their effectiveness. Using this information, security teams can identify weaknesses in individual employees and their overall cybersecurity posture, and figure out ways to fix them.

Adaptive co-founder and CEO Brian Long said AI-generated video and voice cloning has become so convincing that cybersecurity now necessitates training individuals to recognize threats. “As AI becomes invisible infrastructure, embedded in how we shop, work, write, and think, it also enables deceptive attacks,” he said. “Without upgrading how we train and protect individuals, we risk heading into a world where trust itself becomes our greatest vulnerability.”

Ian Hathaway, a partner at the OpenAI Startup Fund, said Adaptive is moving at incredible speed to build AI-native defenses against phishing attacks that rely on AI impersonation. “Its platform delivers exactly what modern security teams need – realistic deepfake simulations, AI-powered risk scoring and training that resonates,” he said.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.