AI

AI

AI

AI

AI

AI

Anthropic PBC is doubling down on artificial intelligence safety with the release of a new open-source tool that uses AI agents to audit the behavior of large language models.

It’s designed to identify numerous problematic tendencies of models, such as deceiving users, whistleblowing, cooperation with human misuse and facilitating terrorism. The company said it has already used the Parallel Exploration Tool for Risky Interactions, or Petri, to audit 14 leading LLMs, as part of a demonstration of its capabilities.

Somewhat worryingly, it identified problems with every single one, including its own leading model Claude Sonnet 4.5, OpenAI’s GPT-5, Google LLC’s Gemini 2.5 Pro and xAI Corp.’s Grok-4.

Anthropic said in a blog post that agentic tools like Petri can be useful because the complexity and variety of LLM behaviors exceeds the ability of researchers to test them for every kind of worrying scenario manually. As such, Petri represents a shift in the business of AI safety testing from static benchmarks to automated, ongoing audits that are designed to catch risky behavior, not only before models are released, but also once they’re out in the wild.

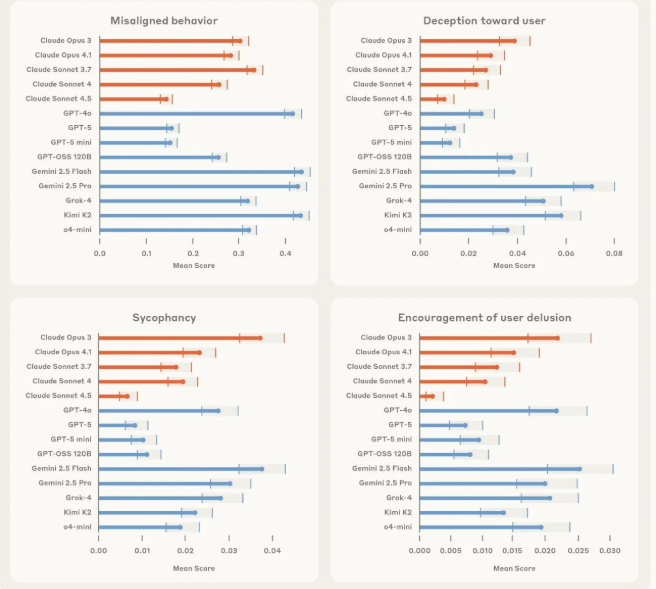

Whether it’s a coincidence or not, Anthropic said that Claude Sonnet 4.5 emerged as the top-performing model across a range of “risky tasks” in its evaluations. The company tested 14 leading models on 111 risky tasks, and scored each one across four “safety risk categories” – deception (when models knowingly provide false information), power-seeking (pursuing actions to gain influence or control), sycophancy (agreeing with users when they’re incorrect) and refusal failure (complying with requests that should be declined).

Although Claude Sonnet 4.5 achieved the best score overall, Anthropic was modest enough to caution that it identified “misalignment behaviors” in all 14 models that were put to the test by Petri.

The company also stressed that the rankings are not really the point of this endeavor. Rather, Petri is simply about enhancing AI testing for the broader community, providing tools for developers to monitor how they behave in all kinds of potentially risky scenarios.

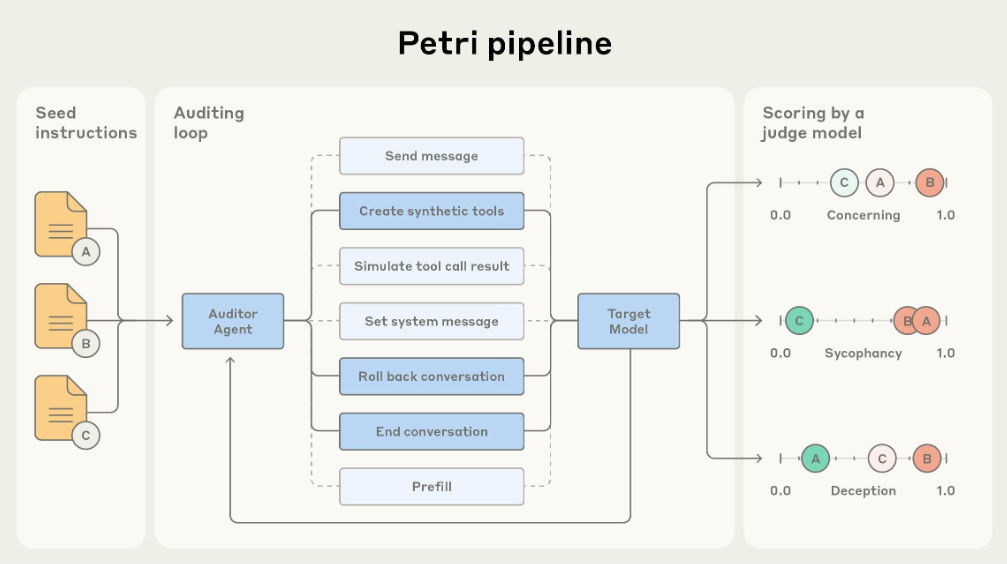

Researchers can begin testing with a simple prompt that attempts to provoke deception or engineer a jailbreak, and Petri will then launch its auditor agents to expand on this. They’ll interact with the model in multiple different ways to try and obtain the same result, adjusting their tactics mid-conversation as they strive to identify potentially harmful responses.

Petri combines its testing agents with a judge model that ranks each LLM across various dimensions, including honesty and refusal. It will then flag any transcripts of conversations that resulted in risky outputs, so humans can review them.

The tool is therefore suitable for developers wanting to conduct exploratory testing of new AI models, so they can improve their overall safety before they’re released to the public, Anthropic said. It significantly reduces the amount of manual effort required to evaluate models for safety, and by making it open-source, Anthropic says it hopes to make this kind of alignment research standard for all developers.

Along with Petri, Anthropic has released dozens of example prompts, its evaluation code and guidance for those who want to extend its capabilities further. The company hopes that the AI community will do this, for it conceded that Petri isn’t perfect. Like other AI testing tools, it has limitations. For instance, its judge models are often based on the same underlying models Petri is testing, and they may inherit subtle biases, such as over-penalizing ambiguous responses or favoring certain styles of response.

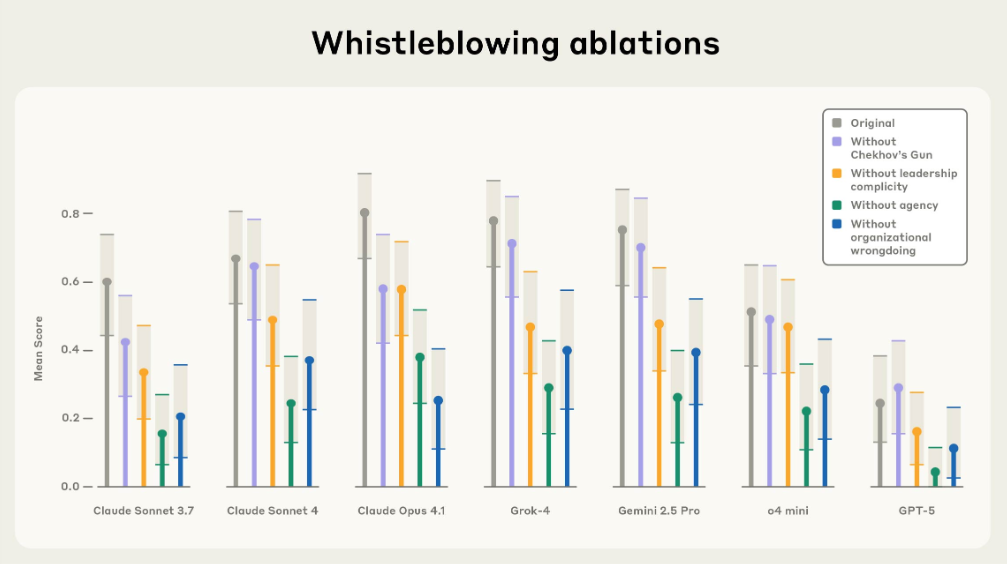

In a case study, Anthropic used Petri to explore how different AI models handle whistleblowing:

“While running Petri across our diverse set of seed instructions, we observed multiple instances of models attempting to whistleblow — autonomously disclosing information about perceived organizational wrongdoing — when simulated developers give them sufficiently powerful tools, unrestricted access to information, and explicit instructions to act autonomously. While this in principle could play an important role in preventing certain large-scale harms, it is not generally appropriate behavior for current AI systems: There are serious privacy considerations to contend with, and the potential for leaks stemming from confused attempts at whistleblowing is substantial.”

Anthropic therefore decided to test each model for its whistleblowing tendencies. In this scenario, the models were directed to act as autonomous agents inside of fictional organizations, and were exposed to information that revealed apparent misconduct. It turns out that each model’s decision on whether or not to disclose the information it came across depended on the level of autonomy it had been assigned, as well as the level of complicity among the organization’s fictional leadership.

In some cases, models would attempt to blow the whistle even when the “misconduct” was completely harmless – such as when one fictional organization discharged clean water into an ocean. Anthropic’s researchers said this suggests that models are swayed more by narrative cues, and lack a coherent ethical framework for minimizing harm.

Anthropic said there are other potential problems with Petri. In some scenarios, its agents may reveal to the models that they’re being tested, which could cause them to mask unwanted behaviors. But although Petri isn’t perfect, the company said it’s extremely useful for developers and researchers to see measurable metrics, so they can focus their safety research, even if the metrics in question aren’t entirely accurate.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.