NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

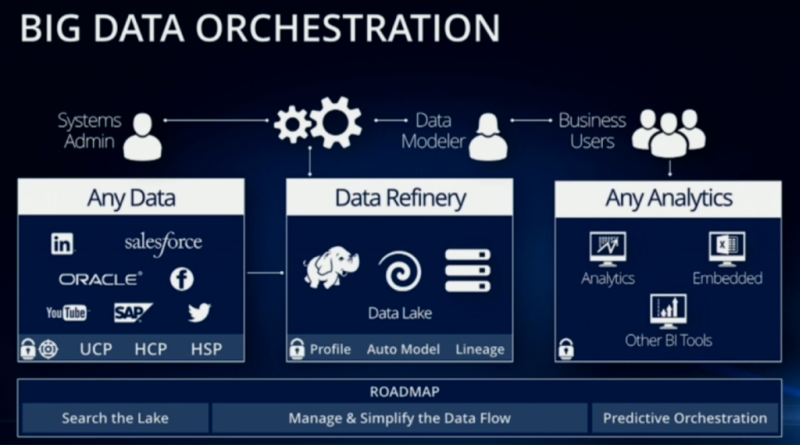

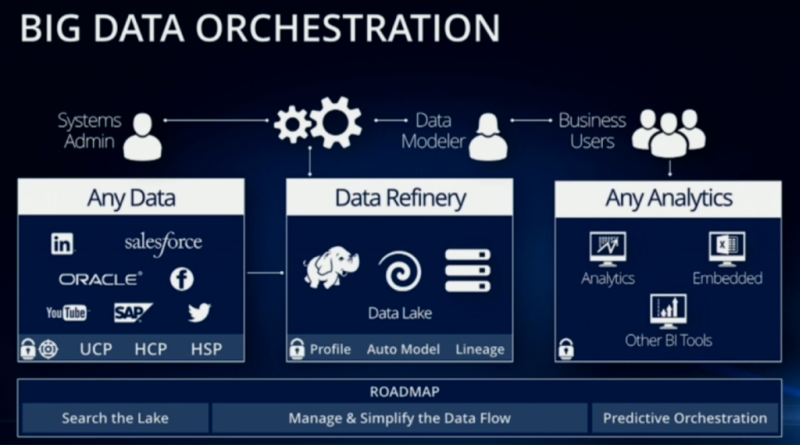

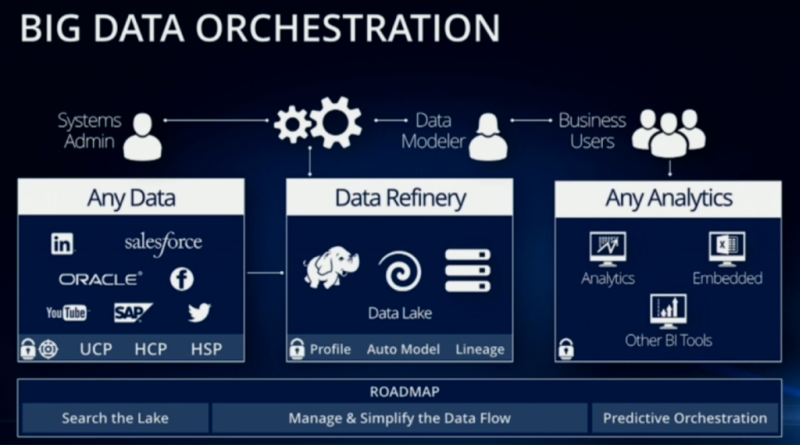

Pentaho Corp.’s ambition is to orchestrate the analytic data pipeline from end-to-end, writes Wikibon Big Data & Analytics Analyst George Gilbert in “Systems of Intelligence Through Pentaho’s Lens.” Judging from Pentaho World last month, it is making steady progress toward that goal. This “fits nicely into Wikibon’s Systems of Intelligence theme,” Gilbert writes.

The company is disciplined about its message around “governed data delivery.” It is steadily building a platform that can blend, enrich, deliver and support analysis of data from multiple sources – initially the data warehouse and Hadoop, Storm and, looking forward, other streaming data sources. During the conference it announced version 6.0, which focuses on integrating the data lake and the data warehouse. It creates and maintains a virtual table at each transformation step that it can share with other applications as part of the pipeline orchestration. The new release also delivers enterprise-hardened data integration and analysis, including better cluster support, authentication and authorization. It has announced plans to integrate Apache Spark into a future release.

Systems of Record have a well-established pipeline architecture with operational and analytic databases linked by traditional extract/transform/load (ETL) tools. Customers and vendors are still working out exactly what that architecture will look like for Systems of Intelligence built upon Big and Fast Data, Gilbert writes. Those who want to combine data integration and analytic data feeds and orchestrate the whole process should follow Pentaho’s progress as it builds what may become an end-to-end platform.

Pentaho Chief Marketing Officer Rosanne Saccone joined Gilbert and Wikibon Co-Founder David Vellante on theCUBE at PentahoWorld 2015 in Orlando last month (17:20)

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.