NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

Amazon Inc. is apparently not the only web giant that designs its own processors. At its annual developer summit this week, Alphabet Inc. revealed the existence of a homegrown server chip that can supposedly run machine learning algorithms ten times faster than publicly-available alternatives.

This performance advantage is the result of a secretive development effort that began about two years ago, shortly before Amazon’s own entry into semiconductor world with the $350 million acquisition of Annapurna Labs Ltd. Alphabet tried to keep the project under wraps but the world found out in October when it was caught recruiting chip designers for a then-unspecified internal initiative. In its new blog post, the search giant reveals that it’s since started mass-producing the homegrown chip and now has thousands of units deployed throughout its data centers.

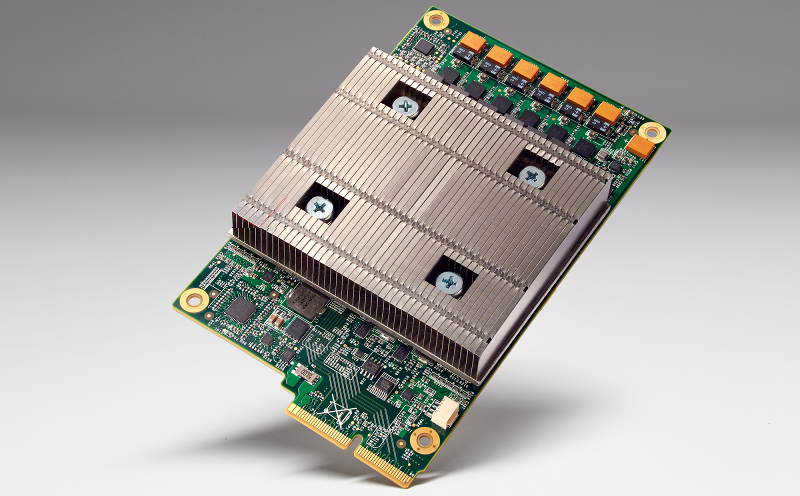

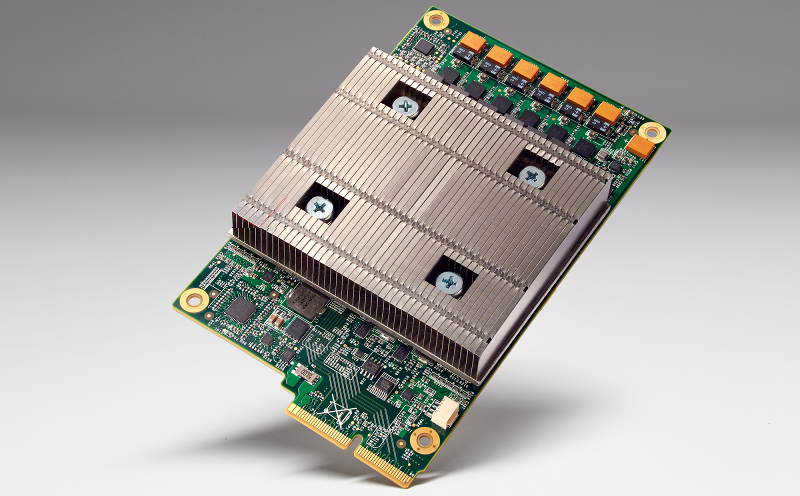

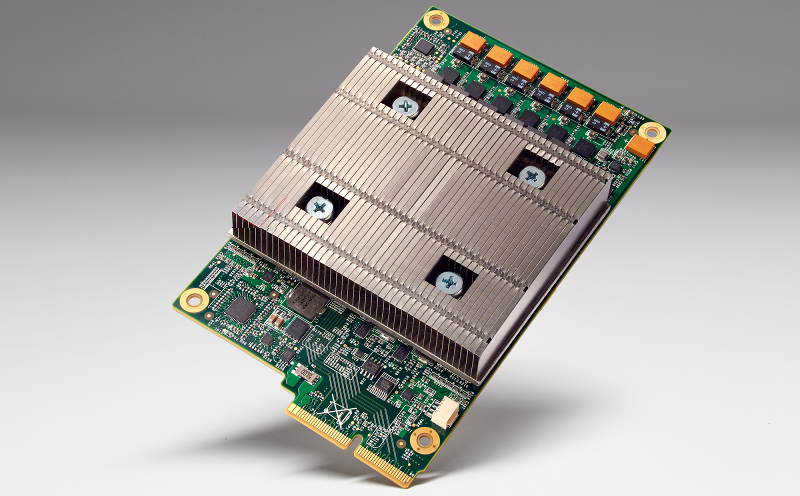

The Tensor Processing Unit, as the chip is called, is a custom ASIC built to fit inside the existing external storage slots of Alphabet’s likewise internally-designed server racks. It’s sourced from a third party supplier and programmed with special instructions that facilitate the processor’s high performance by sacrificing some precision when carrying out calculations. As a result, the company claims that its processor can not only run machine learning faster but also do so while consuming less power than a conventional server accelerator.

Alphabet estimates that the semiconductor industry will have to go through two more cycles of Moore’s law to organically match the speed of the Tensor Processor Unit, which amounts to about seven years. But given how chip makers are finding it increasingly difficult to improve the density of their processors as they move down the nanometer scale, it will likely take much longer than that in practice. Thankfully, however, the search giant doesn’t plan on keeping the machine learning ecosystem waiting another decade.

Alphabet’s head of infrastructure, Urs Hölzle, revealed at its developer event that it will publish a paper detailing the innovations in the Tensor Processor Unit this fall. The goal is presumably to let chip makers incorporate the technology into their designs and thus make it available for the broader machine learning community. Of course, there’s a chance the document will only contain partial specifications given the importance of machine learning to the search giant’s business and the massive investment required to develop a custom processor. But the move should nonetheless provide a major boon for artificial intelligence projects in the years to come.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.