NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

Alphabet Inc.’s artificial intelligence research has made it into the headlines a few times over the last few years, for developments such as AlphaGo’s surprise victory over a human Go grand master. Now, the parent company of Google says it has made major strides in the field of computer vision, with its image recognition program Inception V3 now capable of describing the objects in a photo with 93.9 percent accuracy.

In a recent post on Google’s research blog, Chris Shallue, a software engineer on the Google Brain Team, outlined some of the improvements that have been made to Google’s image recognition program, including a new “fine-tuning” feature that give Inception V3 the ability to describe pictures with greater detail.

“This step addresses the problem that the image encoder is initialized by a model trained to classify objects in images, whereas the goal of the captioning system is to describe the objects in images using the encodings produced by the image model,” Shallue explained. “For example, an image classification model will tell you that a dog, grass and a frisbee are in the image, but a natural description should also tell you the color of the grass and how the dog relates to the frisbee.”

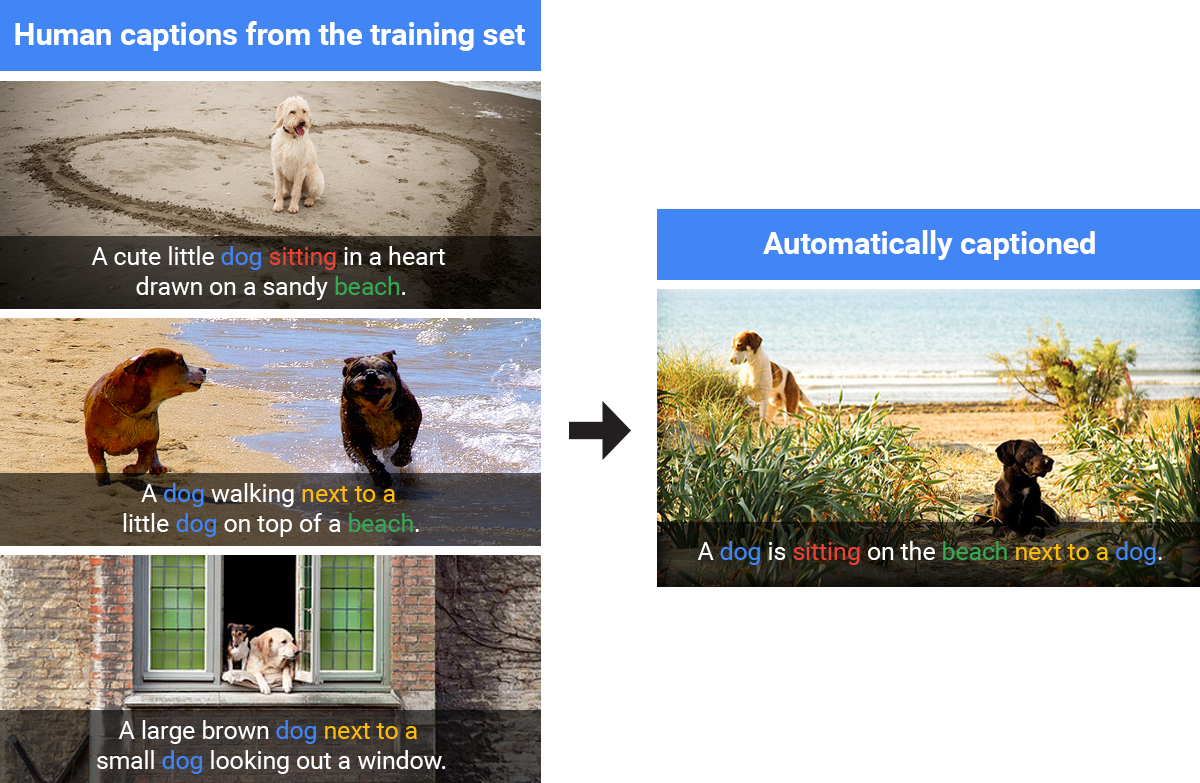

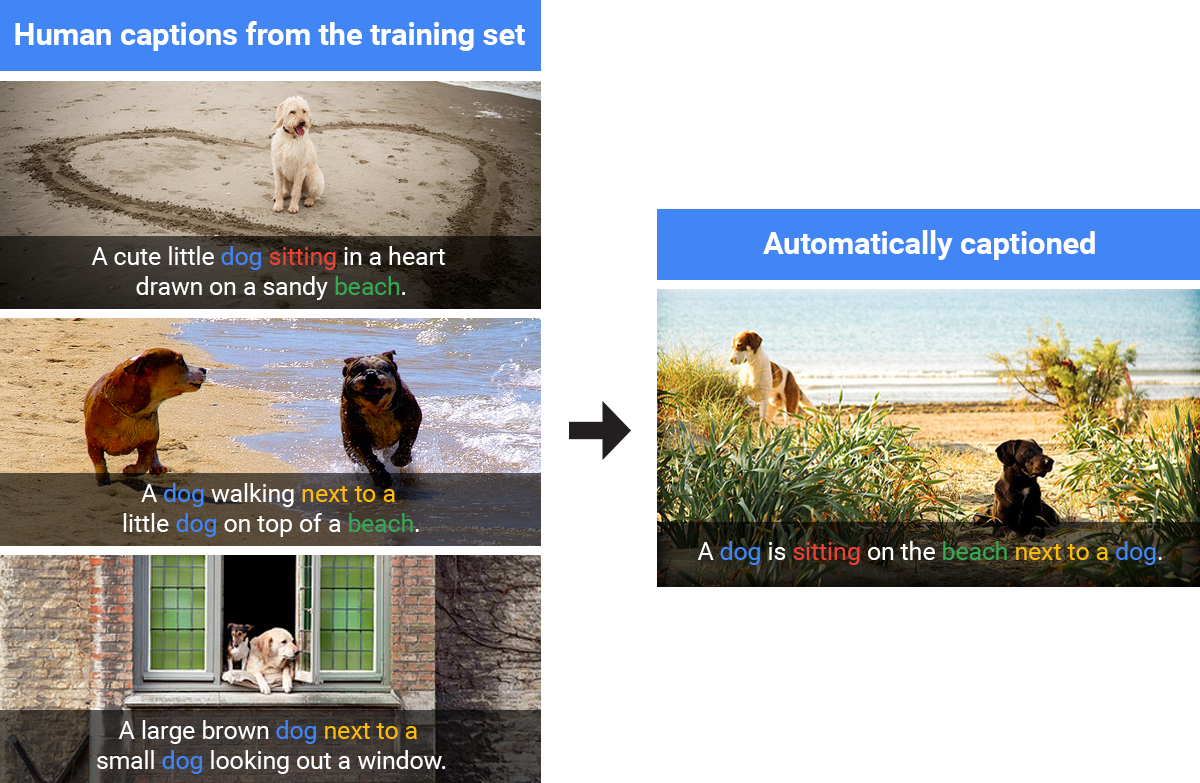

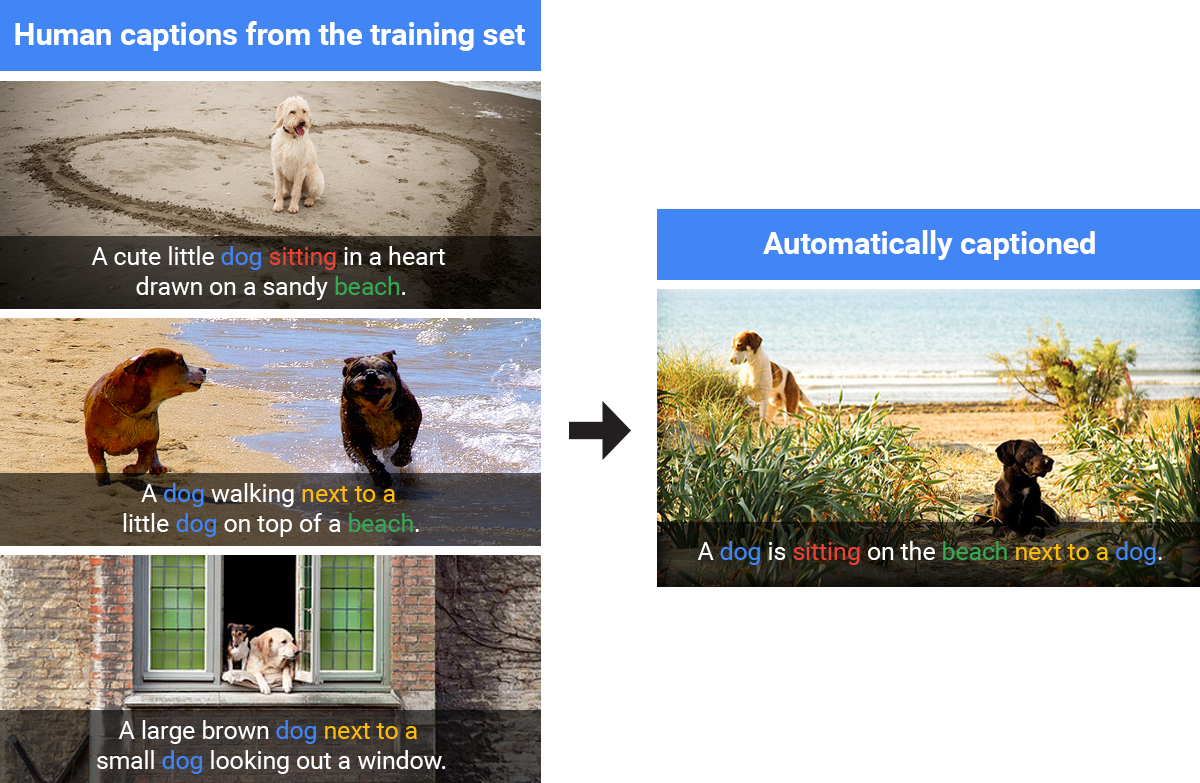

According to Shallue, the research team trained the new fine tuning step by feeding the AI with human-generated captions for images, which included descriptions like “a man riding a wave on top of a surfboard.” Through machine learning, the AI gradually learned to recognize these more intricate relationships, enabling it to not only recognize objects in an image, but also to describe their specific features and their relationship to one another in the photo.

Thanks to this training, Inception V3 is now able to generate fairly complex descriptions of images that go beyond simply describing what is in a picture and actually manages to describe what is happening in a picture, and important distinction when it comes to complicated computer vision tasks.

Google’s image captioning technology is now available through its open source machine learning library, TensorFlow, and Shallue said that the company hopes this move will allow the AI community to push computer vision and image captioning research forward.

Computer vision has many more uses besides labeling photos for better image search or auto-tagging your friends on Facebook.

For example, one of the hottest subjects in computer vision research today is its use in self-driving vehicles. While autonomous vehicles have a wide range of sensors, including both radar and LIDAR, computer vision is what allows the car’s computer to distinguish between pedestrians, other cars, curbs and any number of other things you might see on the road.

Computer vision is also used in a number of more creative ways, such as measuring foot traffic for use in urban planning. In manufacturing, computer vision can be used for quality control, checking for product defaults and other problems.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.