CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

As Google Inc. aims to catch up in cloud computing with leaders Amazon Web Services and Microsoft Azure, it’s doubling down on what is arguably its biggest technology advantage: the branch of artificial intelligence called machine learning.

Today the search giant announced a series of new machine learning services and features available through its cloud service. It also revealed new hardware power available via the Google Cloud that applies graphics processing units from Nvidia Corp. and Advanced Micro Devices Inc. Those chips are responsible for powering much of the recent work in machine learning, which allows computers to learn without being explicitly programmed.

Google also announced the formation of a new machine learning unit inside its cloud operation that’s intended to develop services specific to business use cases. The unit will be headed by two heavyweight machine learning researchers: Fei-Fei Li, director of Stanford University’s Artificial Intelligence Lab and the Stanford Vision Lab, and Jia Li, head of research at Snap Inc. (the former Snapchat).

![]() All the announcements are aimed at large corporate enterprises that know they need the machine learning capabilities that have driven recent advances in consumer services such as language translation, speech recognition and image recognition, but want a much faster and simpler way to apply them to their businesses. To date, using machine learning has required deep expertise most companies don’t have.

All the announcements are aimed at large corporate enterprises that know they need the machine learning capabilities that have driven recent advances in consumer services such as language translation, speech recognition and image recognition, but want a much faster and simpler way to apply them to their businesses. To date, using machine learning has required deep expertise most companies don’t have.

Diane Greene, Google’s senior vice president in charge of its cloud and enterprise businesses, said at the company’s “machine learning day” in San Francisco that the company wants to democratize machine learning and AI to make it easy for any company to use it — and, not least, give enterprises more reasons to use Google’s cloud. “Any company in the world would be remiss not to have a footprint in the Google Cloud,” Greene said.

Google’s machine learning work dates back close to a decade, but Chief Executive Sundar Pichai has made it central to nearly every product and service at the company, from search to Google Photos to targeted advertising. Today, the company focused on its creation of a series of application programming interfaces that make it easier to make use of machine learning via Google’s cloud for specific tasks such as vision, natural language interfaces and language translation.

The vision API, which now runs on its own Tensor Processing Unit chips, is currently about three or four years ahead of GPUs in performance for the price, Google said. The API also got a price cut of up to 80 percent for large deployments.

The natural language API, previously in a limited release, is now available for general use with new features such as more precise text sentiment analysis by sentence rather than an entire document and better recognition of company- or industry-specific words and phrases. And the language translation API now has a “premium” version that can better handle tasks such as translation of websites, video streams and articles.

Translation in particular is using a new “neural machine translation” model that provides a 55 percent to 85 percent reduction in error rates from the existing API. “This launch brings us closer to our vision of a universal language translator,” said Google Vice President and Engineering Fellow Fernando Pereira.

The company clearly aims to spread machine learning into customers’ operations as much as it has into its own. To that end, it provided a detailed demonstration of a cloud API for finding jobs and job candidates, a rather narrow task that Google suggested was just the start of a series of still-undefined APIs for many other specific business and industry uses.

The Cloud Jobs API is still in early alpha test mode, but it’s being used by Federal Express with its software partner Jibe as well as job boards CareerBuilder and Dice. Essentially, it uses machine learning to match job titles to the jobs people are looking for as well as their skills, location and seniority. “We were making it really hard for job applicants to find the job they’re looking for,” said Judy Edge, FedEx’s corporation vice president of human relations. Using the jobs API helped FedEx process thousands more applications a day leading up to its holiday crunch time.

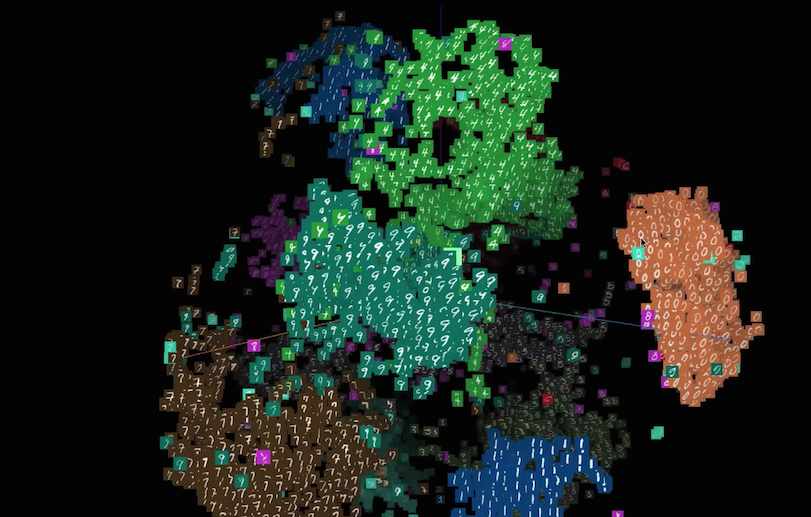

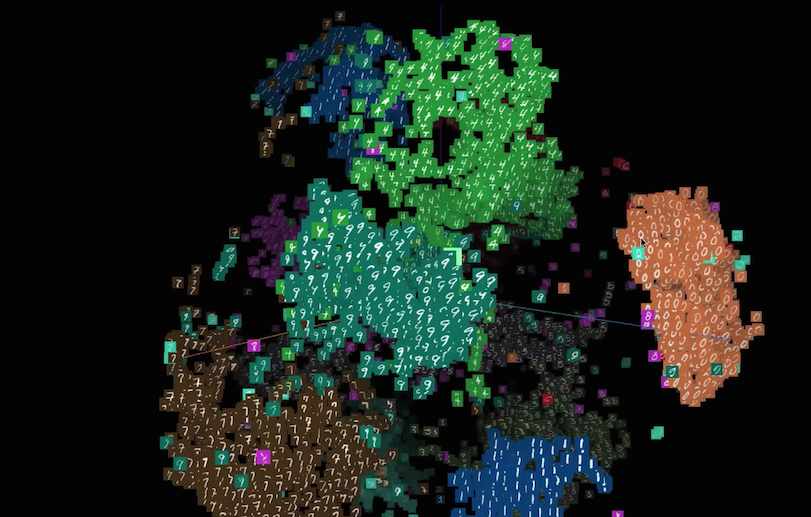

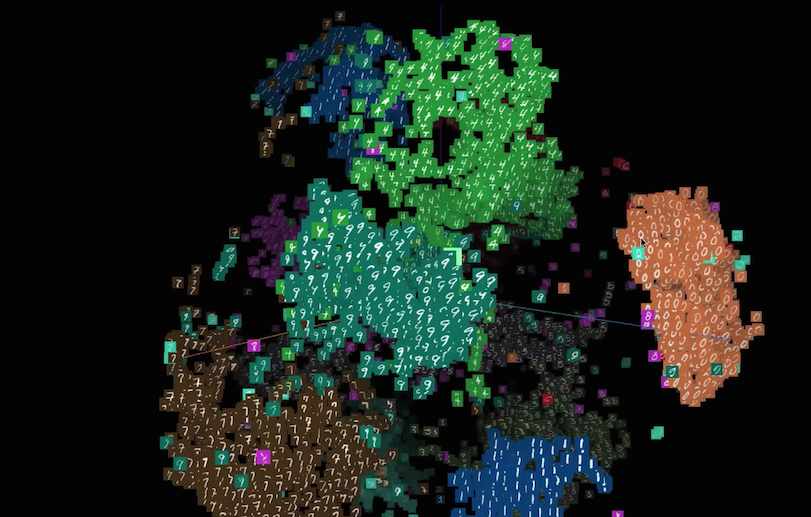

Google provided demos of other business applications of machine learning tailored for specific businesses as well, such as an auto accident loss prediction system for AXA Insurance and an image classification system for the Japanese used-car site Aucnet. And the company unveiled a set of machine learning demonstrations called AI Experiments that were built along with scientists, musicians and others to show how machine learning can be applied by mere mortals to a wide variety of uses. There’s one on bird sounds and another (shown above) on visualizing machine learning.

Despite the enterprise focus, Google also highlighted some new consumer applications of machine learning, such as an app released today, PhotoScan, that enables a smartphone to copy and even clean up paper photos, and new editing features for Google Photos.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.