APPS

APPS

APPS

APPS

APPS

APPS

Microsoft Corp. added three new Cognitive Services building blocks to the Azure Portal application management console Wednesday, hoping to spur more apps that can see, hear, and interpret human modes of communication.

The three services are Computer Vision API (application programming interface), Content Moderator and Face API. Microsoft has been building out its stock of Cognitive Services application programming interfaces for some time, and currently offers around two dozen of them. The services are designed for developers who would like to add new artificial intelligence features such as speech recognition, language understanding, and sentiment detection to their apps.

The company has designed its Cognitive Service APIs to work with the Microsoft Bot Framework, so developers can add the AI capabilities to their chat bots, though they can also be used independently. To date, Microsoft reckons over 420,000 developers in 60 countries have begun using its Cognitive Services.

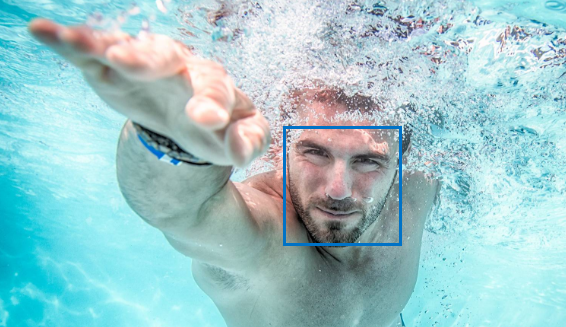

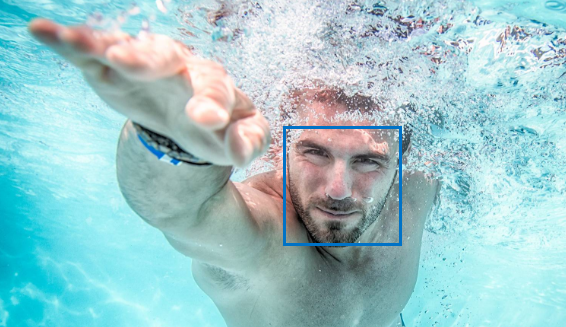

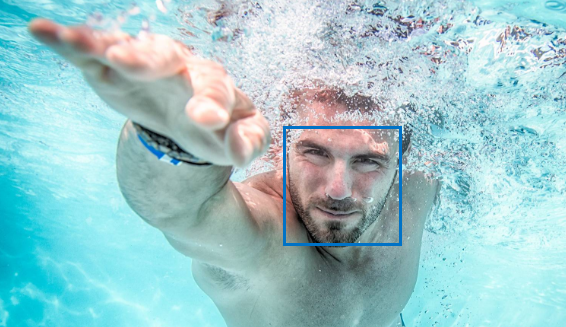

As for the new additions, the Face API does more or less what you would expect. It’s a tool for detecting and comparing human faces, and can organize them into groups based on visual similarities. It can also identify people who have been previously tagged in other images, Microsoft says.

Computer Vision API (pictured) is another image detection tool that aims to identify what appears in any image. According to Microsoft: “It creates tags that identify objects, beings like celebrities, or actions in an image, and crafts coherent sentences to describe it.” The service is also able to detect handwriting in images, as well as famous landmarks, Microsoft said.

As for Content Moderator, this can be used to flag images, text or videos before they’re published, so moderators can review the content and decide if it’s fit for publication.

The new services are available now and can be tested for free, Microsoft said. The company also provided pricing information, available on its Cognitive Services home page.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.