EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

One of the main stumbling blocks in using artificial intelligence to glean insights from large datasets is that most computer systems aren’t designed to handle these kinds of resource-intensive workloads.

IBM Corp. researchers are trying to overcome these deficiencies with a new hybrid concept that could, in theory, make it much easier to perform analytics and train artificial intelligence systems.

In a paper published Tuesday in the Nature Electronics journal, IBM’s researchers noted that most of today’s computers are based on what’s called the “von Neumann architecture,” which requires that data is transferred between memory and processing units in order to be processed. But this is an inefficient process, IBM argues.

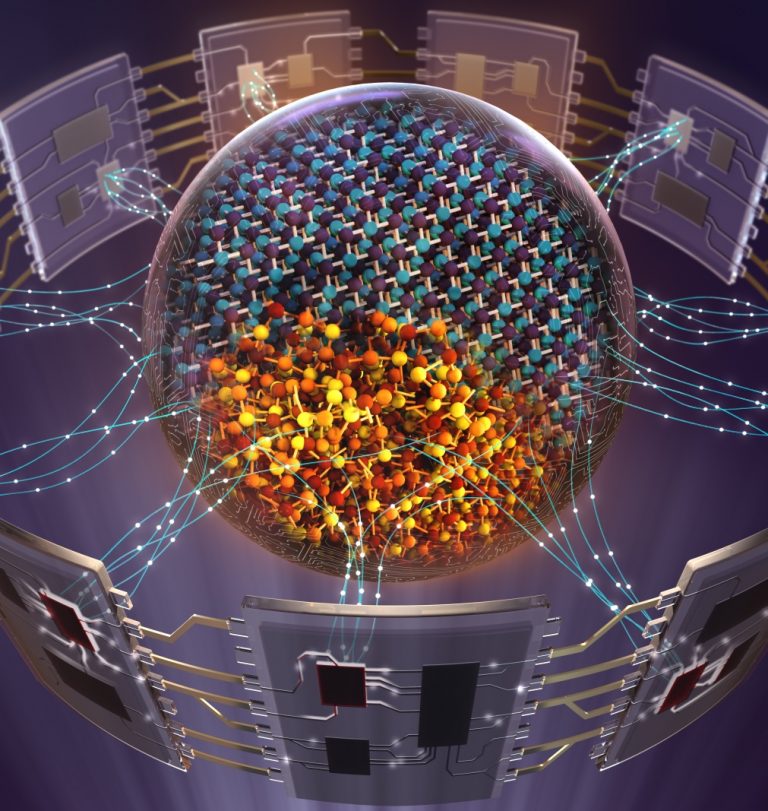

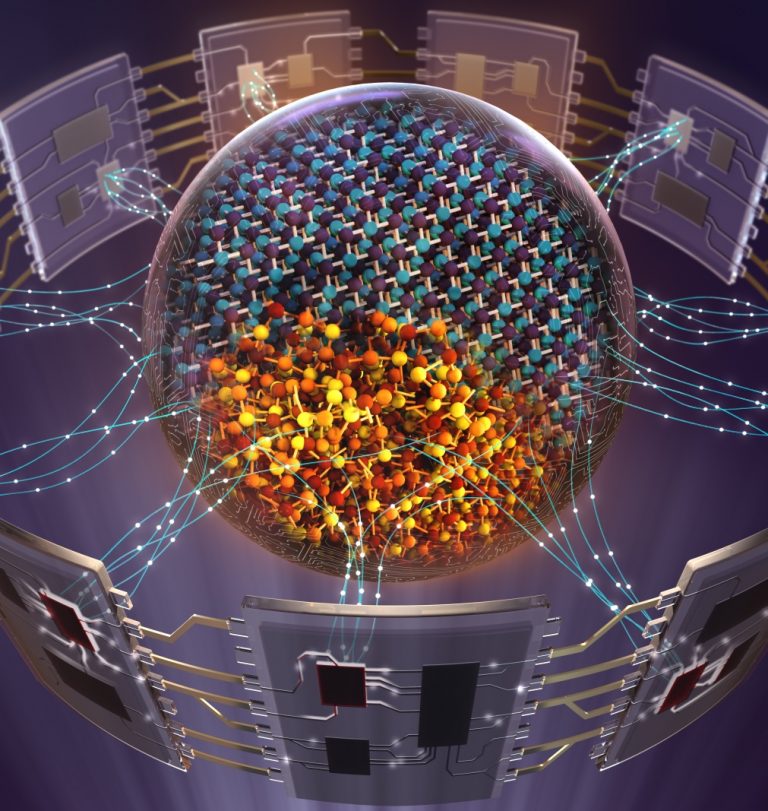

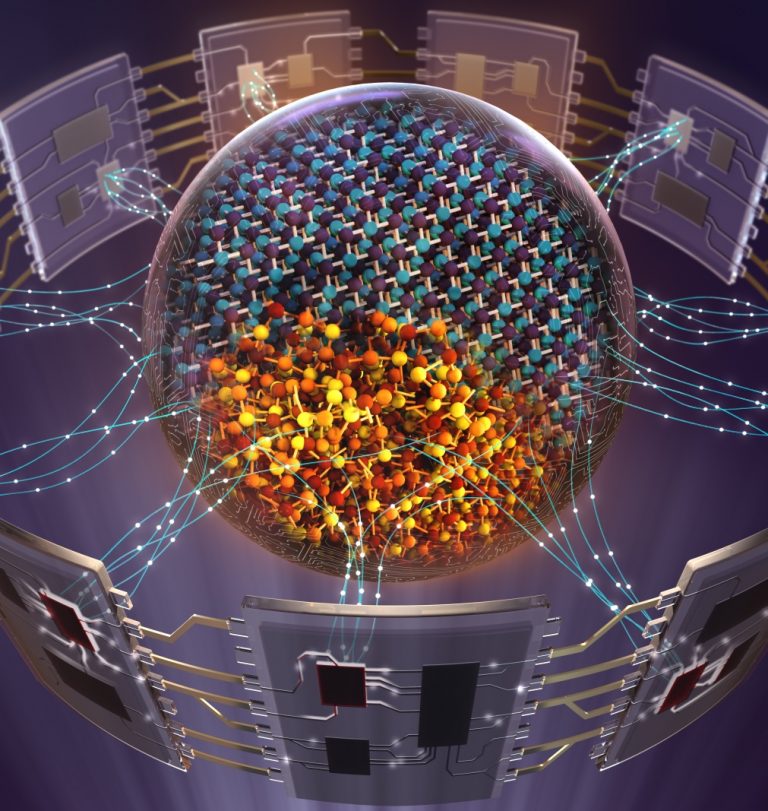

To remedy this problem, IBM has come up with a new concept called “mixed-precision in-memory computing,” which integrates a computational memory unit with a von Neumann machine. The design enables the computational memory unit to carry out most of the data processing tasks, while the von Neumann machine’s resources are used to improve accuracy.

“The system therefore benefits from both the high precision of digital computing and the energy/areal efficiency of in-memory computing,” IBM’s researchers said in a blog post. IBM said its approach could be an alternative to the hardware accelerators used by Microsoft Corp. and Google LLC for their high-performance computing and machine learning applications.

Mixed-precision in-memory computing relies on “phase change memory” devices that are programmed to reach a specific level of conductivity. These PCM devices can handle most data processing without needing to transfer it to a standard central processing unit chip or a graphics processing unit, which means both faster processing and lower power consumption, IBM said.

“The fact that such a computation can be performed partly with a computational memory without sacrificing the overall computational accuracy opens up exciting new avenues towards energy-efficient and fast large-scale data analytics,” IBM’s research team said. “This approach overcomes one of the key challenges in today’s von Neumann architectures in which the massive data transfers have become the most energy-hungry part.”

The research “is another good proof point of the depth of IBM’s research and development abilities,” said Holger Mueller, vice president and principal analyst at Constellation Research Inc. “It’s good to see alternatives in computing infrastructure to dedicated hardware such as GPUs and FPGA architectures that are prominent at the moment to power AI infrastructures.”

For now, the mixed-precision in-memory computing concept is still in its infancy. The prototype PCMs can only store 1 megabyte of memory, and they’ll need gigabytes more in order to be useful for data center applications. However, IBM says it may be able to overcome this challenge by having several PCMs work together at once, or by building larger arrays of the devices.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.