AI

AI

AI

AI

AI

AI

Google LLC is augmenting its search engine with natural-language processing features that it said represent the most significant update of the past five years.

The company detailed the changes in a blog post published this morning. Google is adding new NLP models to its search engine that use a technique called Bidirectional Encoder Representations from Transformers, or BERT, to analyze user queries. The method allows artificial intelligence algorithms to interpret text more accurately by analyzing how the words in a sentence relate to one another.

The concept is not entirely new. NLP algorithms capable of deducing sentence context have been around for a while and even boast their own term of art: transformers. But whereas traditional NLP software can look at a sentence only from left to right or right to left, a BERT-based model does both at the same time, which allows it to gain a much deeper understanding of the meaning behind words. The technique was hailed as a breakthrough when Google researchers first detailed it in an academic paper last year.

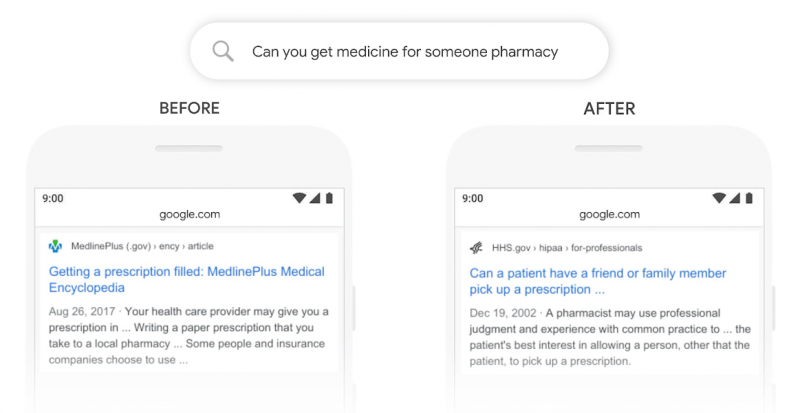

The company said the update will allow it to return more relevant results for about one in every 10 English-language requests made from the U.S. The biggest improvement will be for the queries that were previously most likely to trip up Google: those that contain prepositions such as “for” and “to” or are written in a conversational style.

Google is also using the technology to improve the featured snippets that show up above some search results. And over time, the company plans to expand availability to additional languages and regions, which should open the door to even more search improvements. That’s because on top of their ability to understand complex sentences, BERT-based AI models can transfer knowledge between languages.

“It’s not just advancements in software that can make this possible: we needed new hardware too,” Pandu Nayak, Google’s vice president of search, detailed in the post announcing the changes. “Some of the models we can build with BERT are so complex that they push the limits of what we can do using traditional hardware, so for the first time we’re using the latest Cloud TPUs to serve search results.” TPUs, or Tensor Processing Units, are the internally designed machine learning chips that Google offers through its cloud platform.

Search is just one of the areas where the company is working to apply BERT. Last month, Google researchers revealed VideoBERT, an experimental algorithm that harnesses the technique to predict events likely to happen a few seconds into the future.

THANK YOU