AI

AI

AI

AI

AI

AI

Artificial intelligence research outfit OpenAI Inc. has published a new machine learning framework that can generate its own music after being trained on raw audio.

The new tool is called Jukebox, and the results are pretty impressive. Although the songs it made don’t quite sound like the real thing, they’re very close approximations to the originals.

In a blog post, OpenAI said it chose to work on music because it’s an incredibly difficult subject for AI.

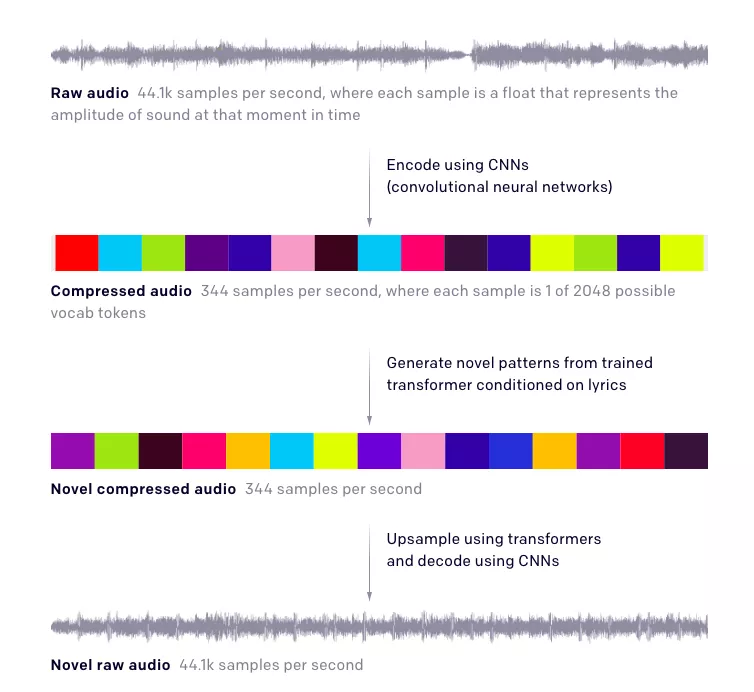

OpenAI’s researchers said they used raw audio to train the Jukebox model, which then spits out raw audio in return, as opposed to the “symbolic music” that piano players might use, as that doesn’t include voices. The researcher used convolutional neural networks to encode and compress the raw audio used for training the framework first of all. Then, they employed what’s called a “transformer” to generate new, compressed audio that’s later transformed back into newly generated raw audio.

Here’s a chart that tries to illustrate how it all works:

This isn’t OpenAI’s first stab at making music. Last year, it created an AI model called MuseNet that can generate new music featuring up to 10 instruments in 15 different styles, including that of classical composers such as Mozart, and contemporary artists such as Lady Gaga. But whereas MuseNet was unable to generate its own lyrics, Jukebox can.

The Jukebox model was trained on a raw dataset of 1.2 million songs, including 600,000 in English language, and used metadata and lyrics from LyricWiki to recreate singer’s voices. Jukebox does have its limitations though, as the researchers admitted.

“While Jukebox represents a step forward in musical quality, coherence, length of audio sample, and ability to condition on artist, genre, and lyrics, there is a significant gap between these generations and human-created music,” the OpenAI team wrote. “For example, while the generated songs show local musical coherence, follow traditional chord patterns, and can even feature impressive solos, we do not hear familiar larger musical structures such as choruses that repeat.

The experiment could have some legal implications for OpenAI, as several Twitter users pointed out.

Did Kanye West, Katy Perry, Lupe Fiasco and the estates of Aretha Franklin, Frank Sinatra and Elvis Presley give OpenAI permission to use their audio recordings as training material for a voice-synthesis/musical-composition/lyric-writing algorithm? My guess is no.

— Cherie Hu (@cheriehu42) April 30, 2020

That said, Jukebox is an impressive achievement that once again pushes the boundaries of what might be possible with AI technology. Indeed, the project is yet more proof that AI is no longer taking baby steps, but rather, some very big leaps, Constellation Research Inc. analyst Holger Mueller told SiliconANGLE.

“Researchers are not shying away from the big frontiers that are left in AI research, around creativity,” Mueller said. “An AI model that can compose music with lyrics is a big step forward into a realm that was thought to be off-limits. Creativity remains a big frontier for AI, but we have come a lot closer with OpenAI’s Jukebox.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.