INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

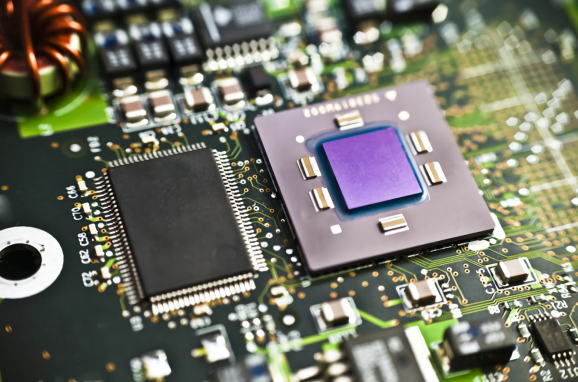

Arm Ltd., the influential chip design firm Nvidia Corp. has proposed to acquire for $40 billion, on Tuesday detailed two chip designs for the server market that promise to deliver a big jump in performance.

U.K.-based Arm’s semiconductor blueprints form the basis of the processors inside most of the world’s smartphones and countless other devices. Over recent years, the firm has built up a respectable presence in the data center as well.

The Neoverse V1 and N2 chip designs debuted this week can be used by semiconductor makers as the basis for server-grade central processing units. They’re the followup to the Neoverse N1, Arm’s current-generation entry into this market, which Amazon Web Services Inc. has adopted in its cloud platform. Counting AWS, Arm claims that the Neoverse N1 is used by four of the seven largest operators of so-called hyperscale data centers.

The V1 and N2 offer up to 50% and 40% better performance than the current-generation N1, respectively. Arm says that V1 is designed for building CPUs that provide a high amount of performance per thread while the N2 is for CPUs with high core counts. Which of these two chip types is preferable mainly depends on what applications a company is running: Software products that are billed per CPU core, for example, are best run on CPUs with modest core counts and high per-thread performance.

Arm says both the V1 and the N2 are compatible with the latest five-nanometer manufacturing process being adopted in the chip industry. They also support a number of other technologies that should give a boost to certain niche use cases.

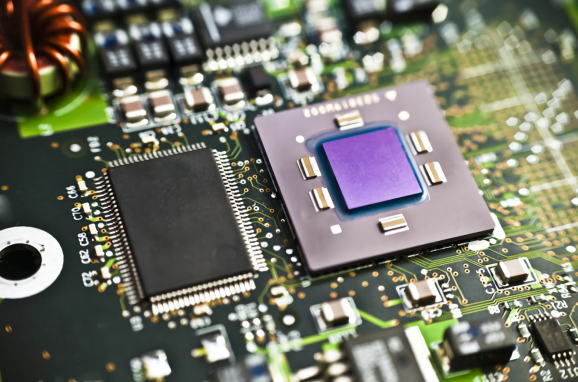

One addition is SVE, a technology that will benefit scientific simulations and other workloads deployed on supercomputers. Such applications often store the data they process in the form of a data unit known as a vector. SVE allows chip makers to customize the maximum size of the vectors their CPUs can handle based on their customers’ needs, flexibility that Arm says will make it possible to create more efficient and fine-tuned processors.

Also new is support for the Compute Express Link interconnect. Compute Express Link is commonly used to attach machine learning accelerators and other specialized chips to servers. It’s an important addition because the use of machine learning accelerators is rising in tandem with the growing enterprise adoption of artificial intelligence, an important industry trend that Arm should now be better positioned to address.

The N2, the new chip design for building high-core-count CPUs, targets more than just servers. Arm says that the N2 can serve as the foundation for the processors of other data center devices as well, including routers and switches.

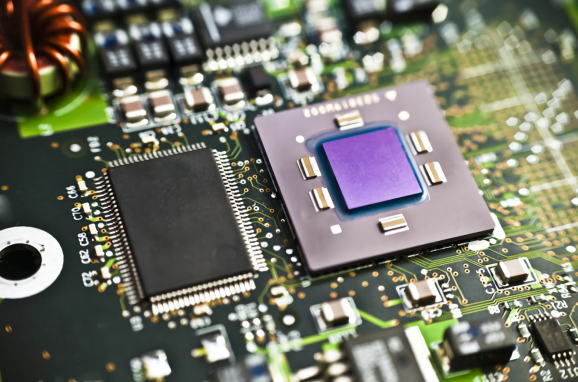

The N2 is currently in testing with early customers and is expected to become fully available next year, while the V1 has already been released.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.