AI

AI

AI

AI

AI

AI

Nvidia Corp. is targeting more demanding artificial intelligence workloads with the launch of its first-ever Arm-based central processing unit for the data center.

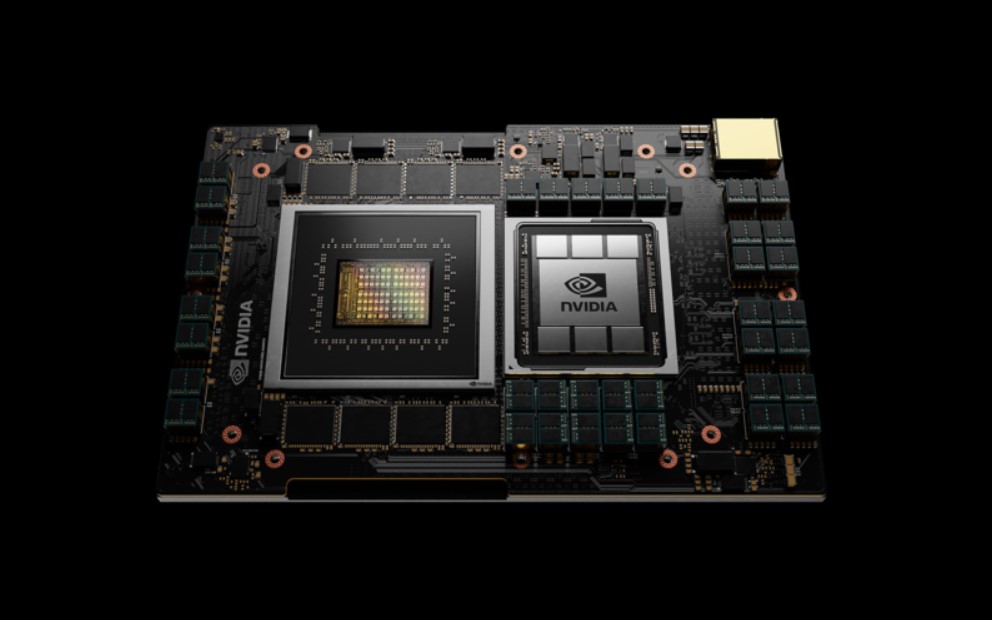

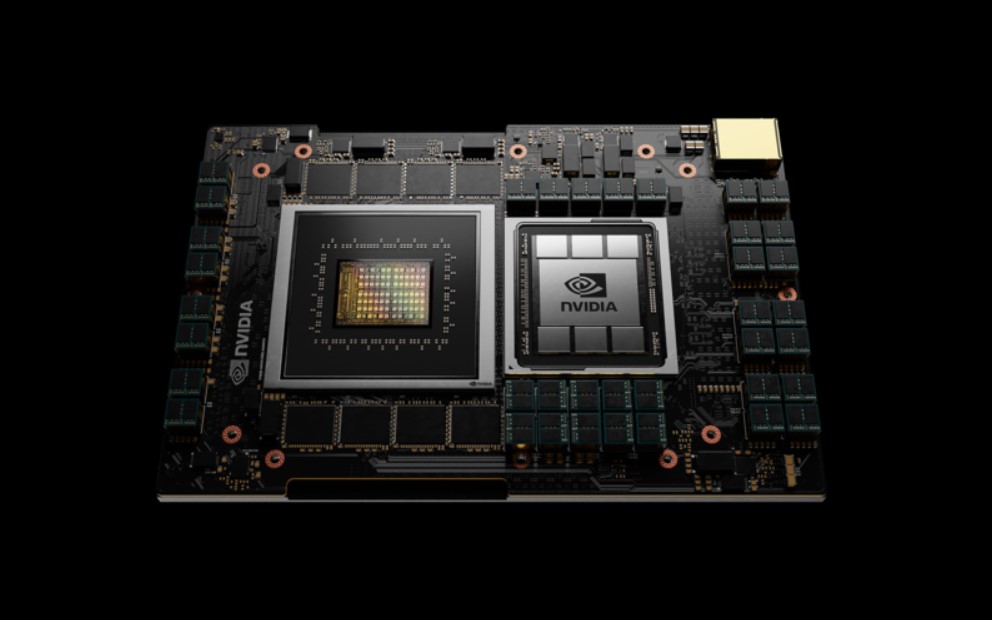

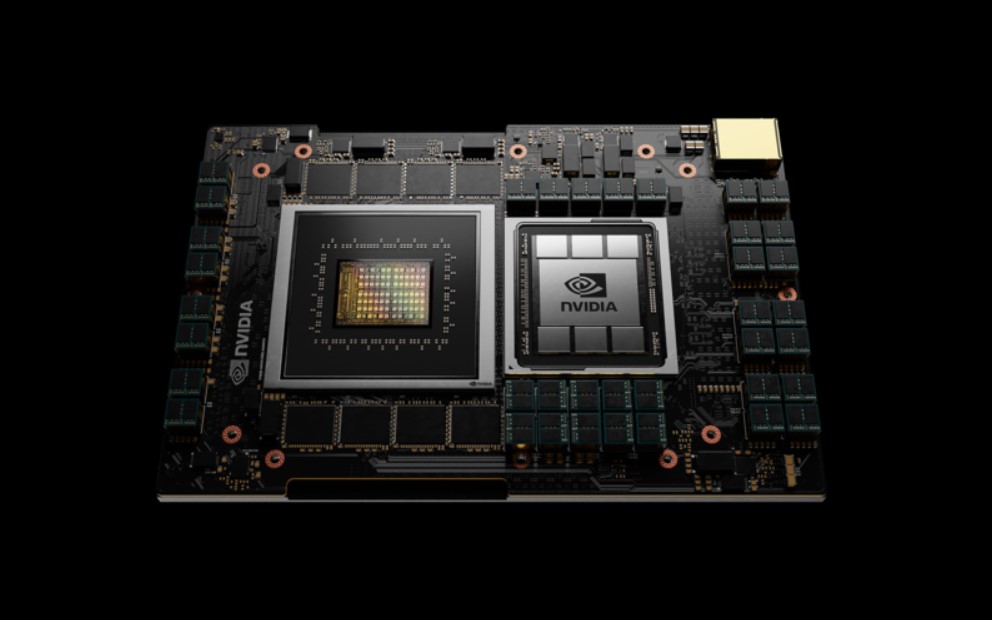

Called “Grace,” the new CPU (pictured) launched today at the Nvidia’s GPU Technology Conference virtual event is said to be the result of a combined 10,000 years of engineering work. It’s designed to enable the enormous compute requirements of the most powerful AI applications, including natural language processing, recommendation systems and AI supercomputer-powered drug discovery.

Nvidia said Grace is designed to work best in systems that combine its better-known graphics processing units, such as the Nvidia A100 GPU. When tightly coupled with Nvidia GPUs, executives said, a Grace-based system will deliver 10 times the performance of its current DGX-based systems running on Intel Corp.’s x86 CPUs.

The Nvidia Grace CPU is named after the American computer programming pioneer Grace Hopper and is designed to power a new breed of supercomputers, the company said. One of the first examples will be the new Alps supercomputer at the Swiss National Computing Center, or CSCS.

Alps is currently being built by Hewlett-Packard Enterprise Co. and will be based on that company’s Cray EX supercomputer product line and powered by the Nvidia HGX supercomputing platform, which includes the A100 GPUs, the Nvidia High-Performance Computing software development kit and the new Grace CPUs. Once it’s up and running, Alps will be able to train GPT-3, the world’s biggest natural language processing model, in just two days, which is seven times faster than the 2.8-AI exaflops Selene supercomputer that’s currently recognized by MLPerf as the world’s fastest supercomputer for AI.

In a press briefing, Paresh Kharya, senior director of Accelerated Computing at Nvidia, said Grace is the first CPU that’s designed to address the exploding size of the world’s most powerful AI models today. He noted that the GPT-3 model for example has passed more than 100 billion parameters, and said that existing CPU architectures simply cannot cope with this any more.

“Giant models are pushing the limits of existing architecture,” Kharya said. The problem is that they can’t fit into GPU memory, only system memory, which is slower.

Kharya explained that the Grace CPUs were built to advance computing architectures to handle AI and HPC better. “We have innovated from the ground up to create a CPU to deliver on that promise,” he said. “It’s one that’s tightly coupled to GPU to provide a balanced architecture that eliminates bottlenecks.”

With the Alps supercomputer, CSCS researchers are aiming to tackle a wide range of scientific research that can benefit from natural language understanding, for example analyzing thousands of scientific papers and generating new molecules that can be used to aid drug discovery.

”Nvidia’s novel Grace CPU allows us to converge AI technologies and classic supercomputing for solving some of the hardest problems in computational science,” said CSCS Director Thomas Schulthess.

Nvidia said the Grace CPUs in its new systems will be linked to its GPUs through its Nvidia NVLink interconnect technology. It provides a blazing-fast 900 gigabits per second connection that will enable a 30 times higher aggregate bandwidth than other computer servers, the company added.

The Grace CPUs are further supported by an LPDDR5x memory subsystem that enables double the bandwidth and 10 times more energy efficiency compared with DDR4 memory, Nvidia said. Of course, it will also support the Nvidia HPC SDK and the company’s full suite of CUDA and CUDA-X libraries of GPU applications.

Analyst Holger Mueller of Constellation Research Inc. told SiliconANGLE that Nvidia is opening a new chapter in its long corporate history with the launch of Grace. He said the company is announcing the first complete AI platform based on Arm technology to address one of the biggest challenges of AI.

“It enables Nvidia to move data fast and efficiently to an array of GPUs and data processing units,” Mueller said. “It’s a natural extension of Nvidia’s AI portfolio and will be one that gives other, cloud-based AI and ML workloads a run for their money, on-premises. That’s great for companies that want more choice on where to run those workloads.”

Analyst Patrick Moorhead of Moor Insights & Strategy said Grace is the biggest announcement by far at GTC 2021.

“Grace is a tightly integrated CPU for over 1 trillion parameter AI models,” Moorhead said. “It’s hard to address those with classic x86 CPUs and GPUs connected over PCIe. Grace is focused on IO and memory bandwidth, shares main memory with the GPU and shouldn’t be confused with general purpose datacenter CPUs from AMD or Intel.”

Nvidia Chief Executive Jensen Huang said in a keynote that “unthinkable” amounts of data are being used in AI workloads today. The launch of Grace means his company now has a third foundational technology for AI alongside its GPUs and data processing units, giving it the ability to re-architect the data center completely for those workloads. “Nvidia is now a three-chip company,” he said.

Nvidia said the Grace CPUs are slated for general availability sometime in 2023.

In the meantime, companies will still be able to get their hands on a very capable AI supercomputing platform in the form of Nvidia’s next-generation, cloud-native Nvidia DGX SuperPOD AI hardware.

The new Nvidia DGX SuperPOD (pictured, below) for the first time features the company’s BlueField-2 DPUs. Those are data processing units that offload, accelerate and isolate data to connect users securely to its AI infrastructure. The BlueField-2 DPUs are combined with a new Nvidia Base Command service to make it possible for multiple users and teams to access, share and operate the DGX SuperPOD infrastructure securely, Nvidia said. Base Command can be used to coordinate AI training and operations for teams of data scientists and developers located around the world.

The basic building block of the new SuperPODs is Nvidia’s DGX A100 appliance. It pairs eight of the chipmaker’s top-of-the-line A100 data center GPUs with two central processing units and a terabyte of memory.

“AI is the most powerful technology the world has ever known, and Nvidia DGX systems are the most effective tool for harnessing it,” said Charlie Boyle, vice president and general manager of DGX systems at Nvidia. “The new DGX SuperPOD, which combines multiple DGX systems, provides a turnkey AI data center that can be securely shared across entire teams of researchers and developers.”

Nvidia said the cloud-nativeDGX SuperPODS and Base Command will be available in the second quarter through its global partners.

Nvidia also announced a new DPU, the BlueField-3 chip that has 10 times the power of the previous generation. It also provides real-time network visibility, detection and response for cybersecurity threats. The company added that it acts as the monitoring or telemetry agent for Nvidia Morpheus, an AI-enabled, cloud-native cybersecurity platform, which was also announced today.

Nvidia isn’t letting up on the software side either. As part of its efforts to encourage greater adoption of its new AI infrastructure, the company today announced availability of the Nvidia Jarvis framework. It provides a raft of pre-trained deep learning models and software tools for developers. They can use them to create interactive conversational AI services that can be adapted for use in a variety of industries.

Available on the Nvidia GPU cloud service, the deep learning models in the Jarvis framework are trained on billions of hours of phone calls, web meetings and streaming broadcast video content. They can be used for what the company says is “highly accurate” automatic speech recognition and “superhuman” language understanding. They can also be applied to real-time translation in multiple languages and create new text-to-speech capabilities that can be used to power conversational chat bots, Nvidia said.

The Jarvis models are exceptionally fast as well. Nvidia said that through GPU acceleration, it can run the end-to-end speech pipeline in under 100 milliseconds in order to listen, understand and then create a response faster than you can blink. Nvidia said developers will be able to use the Nvidia Tao framework to train, adapt and optimize the Jarvis models using their own data for almost any task in any industry and on any system they use.

Possible applications of the Jarvis deep learning models include new digital nurse services that can help to monitor patients, easing the burden on overloaded human medical staff, or online assistants for e-commerce that can understand what consumers are looking for a provide useful recommendations. Real-time translation also can help enable better cross-border work collaboration.

“Conversational AI is in many ways the ultimate AI,” said Huang. “Deep learning breakthroughs in speech recognition, language understanding and speech synthesis have enabled engaging cloud services. Nvidia Jarvis brings this state-of-the-art conversational AI out of the cloud for customers to host AI services anywhere.”

Nvidia said the Jarvis deep learning models will be released in the second quarter as part of the ongoing open beta program.

With reporting from Robert Hof

THANK YOU