AI

AI

AI

AI

AI

AI

Artificial intelligence researchers from Facebook Inc., Princeton University and the Massachusetts Institute of Technology have teamed up to publish a new manuscript that they say offers a theoretical framework describing for the first time how deep neural networks actually work.

In a blog post, Facebook AI research scientist Sho Yaida noted that DNNs are one of the key ingredients of modern AI research. But for many people, including most AI researchers, they’re also considered to be too complicated to understand from first principles, he said.

That’s a problem, because although much progress in AI has been made through experimentation and trial and error, it means researchers are ignorant of many of the key features of DNNs that make them so incredibly useful. If researchers are more aware of these key features, it would likely lead to some dramatic advances and the development of much more capable AI models, Yaida said.

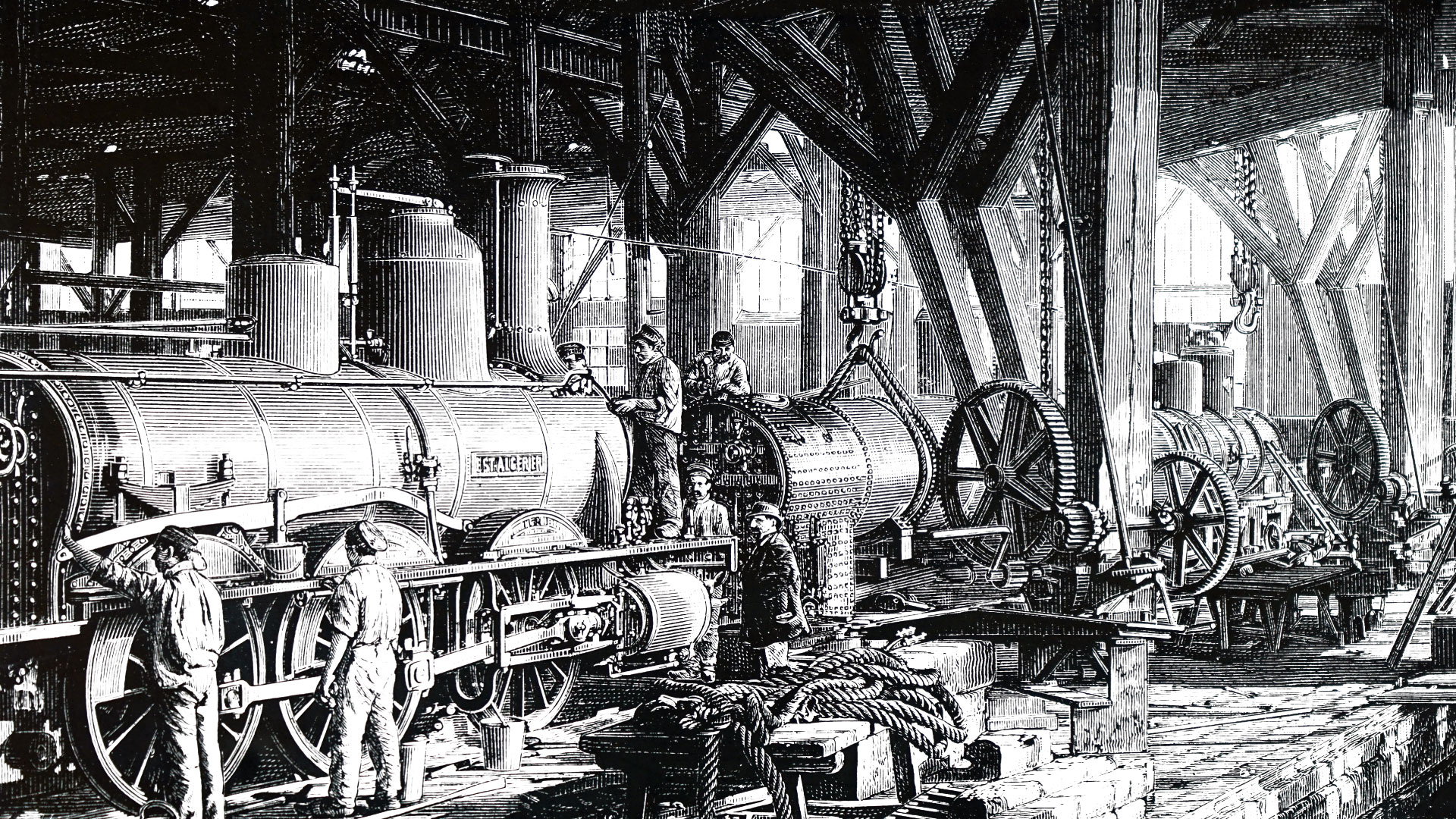

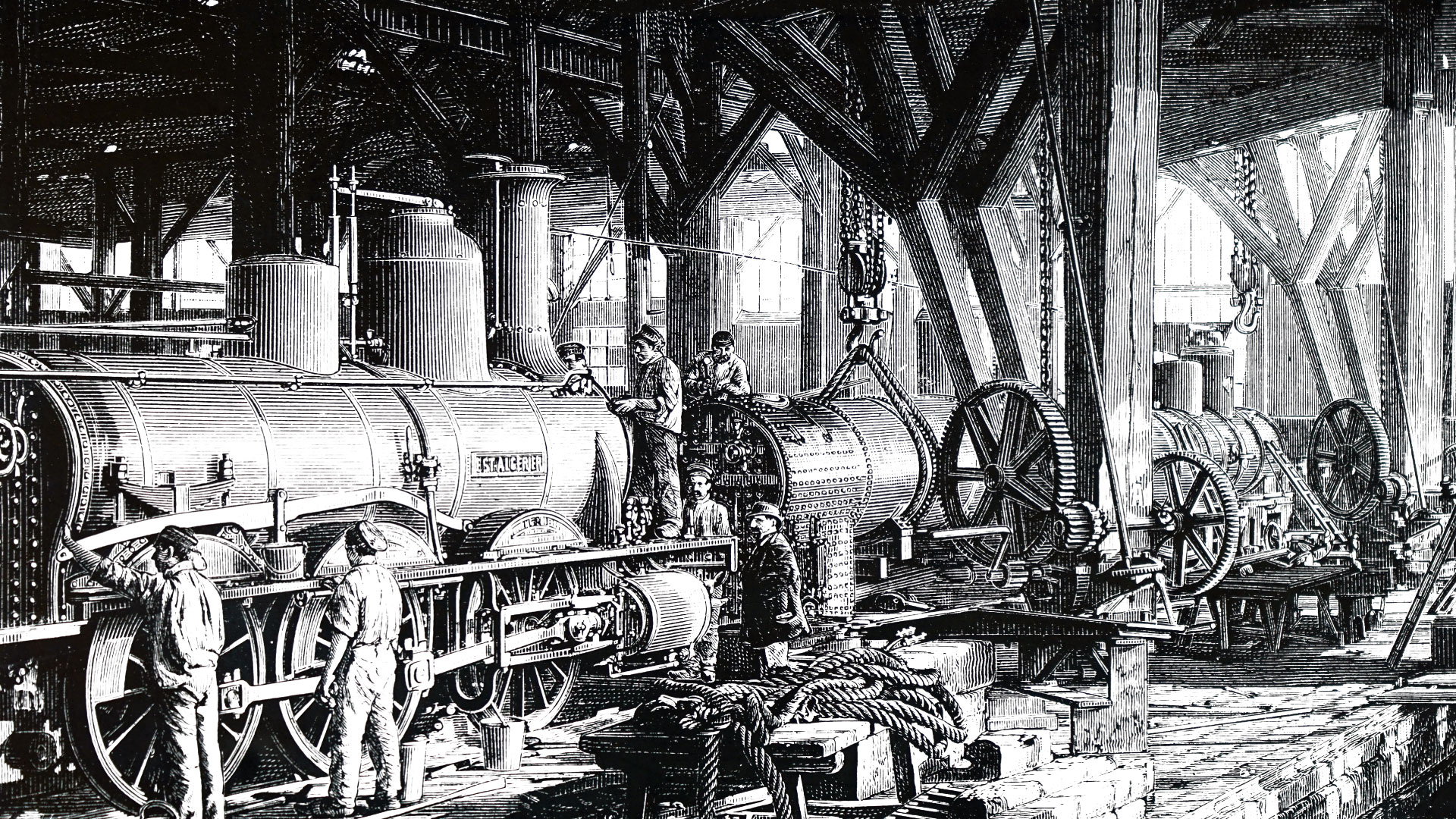

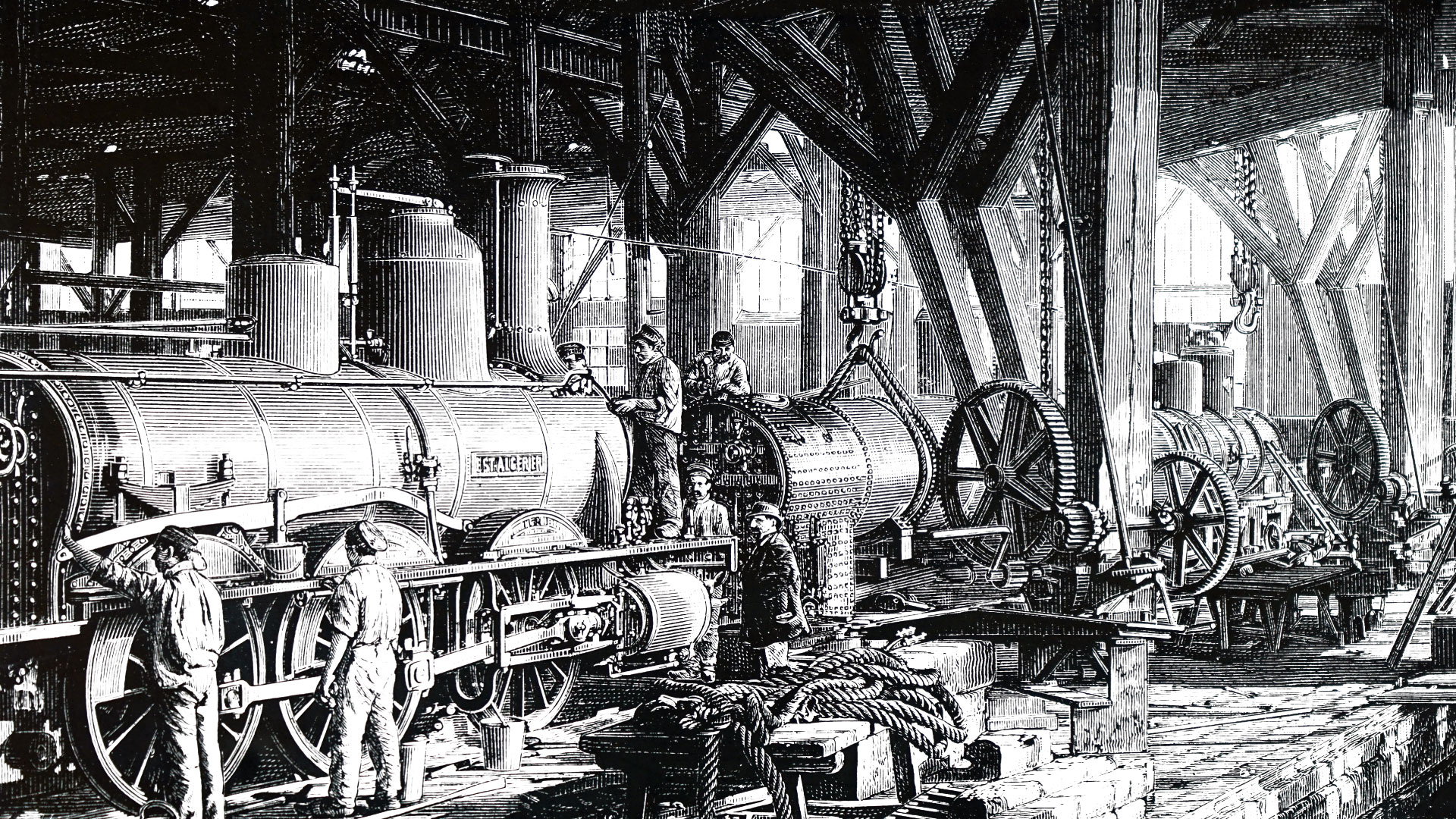

Yaida drew a comparison between AI now and the steam engine at the beginning of the Industrial Revolution. He said though the steam engine changed manufacturing forever, it wasn’t until the laws of thermodynamics and the principles of statistical mechanics were developed over the following century that scientists could fully explain at the theoretical level how and why it worked.

That lack of understanding didn’t prevent the steam engine from being improved, he said, but many of the improvements made were a result of trial and error. Once the principles of the heat engine were discovered by scientists, the pace of improvement increased much more rapidly.

“When scientists finally grasped statistical mechanics, the ramifications went far beyond building better and more efficient engines,” Yaida wrote. “Statistical mechanics led to an understanding that matter is made of atoms, foreshadowed the development of quantum mechanics, and (if you take a holistic view) even led to the transistor that powers the computer you’re using today.”

Yaida said AI is currently at a similar juncture, with DNNs treated as a black box that’s too complicated to understand from first principles. As a result, AI models are fine-tuned through trial and error, similar to how people improved the steam engine.

Trial and error isn’t necessarily a bad thing, of course, Yaida said, and it can be done intelligently, formed by years of experience. But trial and error is only a substitute for a unified theoretical language that describes DNNs and how they actually function.

The manuscript, called “The Principles of Deep Learning Theory: An Effective Theory Approach to Understanding Neural Networks,” is an attempt to fill that knowledge gap. A collaboration among Yaida, Dan Roberts of MIT and Salesforce and Boris Hanin at Princeton, it’s the first real attempt at providing a theoretical framework for understanding DNNs from first principles.

“For AI practitioners, this understanding could significantly reduce the amount of trial and error needed to train these DNNs,” Yaida said. “It could, for example, reveal the optimal hyperparameters for any given model without going through the time- and compute-intensive experimentation required today.”

The actual theory is not for the faint of heart, requiring a pretty sophisticated understanding of physics. For most, the important thing will be the ramifications it has, enabling AI theorists to push for a deeper and more complete understanding of neural networks, Yaida said.

“There is much left to compute, but this work potentially brings the field closer to understanding what particular properties of these models enable them to perform intelligently,” he said.

The Principles of Deep Learning Theory is available to download now on arXiv and will be published by Cambridge University Press in early 2022.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.