AI

AI

AI

AI

AI

AI

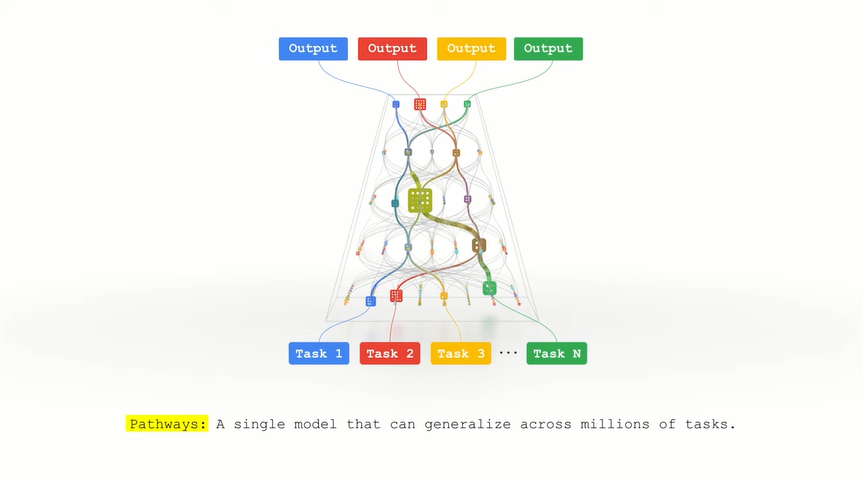

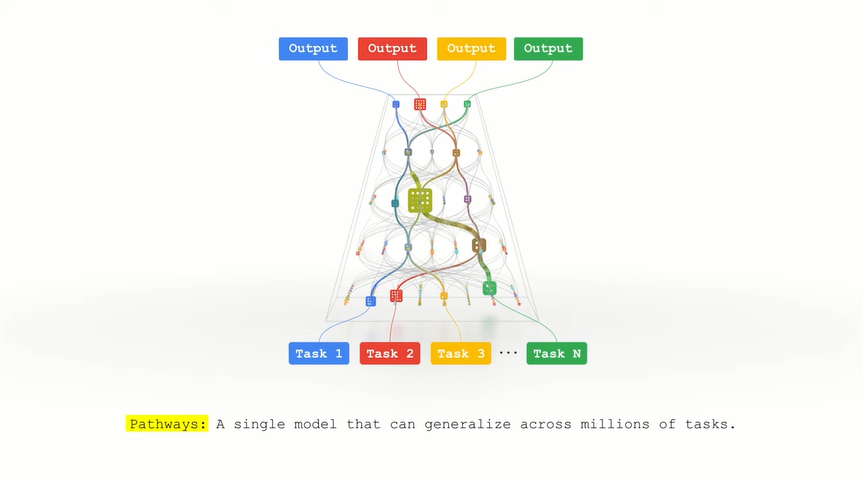

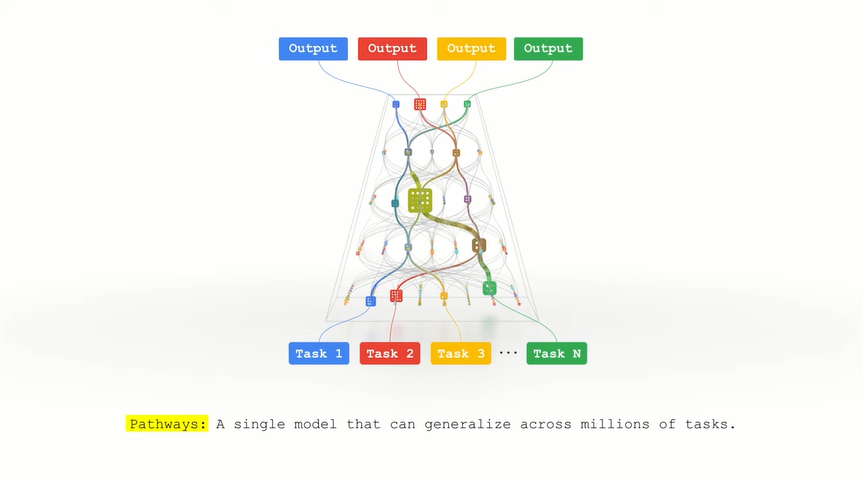

Google LLC has detailed Pathways, an internal artificial intelligence project aimed at facilitating the development of neural networks that can learn thousands and potentially even millions of tasks.

Jeff Dean, the Senior Vice President of Google Research, provided an overview of the company’s work on Pathways in a Thursday blog post. The executive described the technology as a “next-generation AI architecture” being developed by several different teams.

Today’s AI models can for the most part only be trained to perform a single task. For example, if a neural network that was originally trained to correct spelling errors is trained again to detect grammar mistakes, it’s likely to “forget” its knowledge of how to correct misspellings. Google is designing Pathways to be more versatile. The technology, Dean wrote, will make it possible to create neural networks that can learn as many as millions of different tasks.

Google’s plans for developing multipurpose AI software involve equipping neural networks with multiple “skills,” the executive wrote. The search giant believes that combining these skills in different ways would make it possible to tackle a variety of tasks. “That way what a model learns by training on one task – say, learning how aerial images can predict the elevation of a landscape – could help it learn another task — say, predicting how flood waters will flow through that terrain,” Dean explained.

Also in the interest of making AI software more versatile, Google is developing Pathways to draw on multiple types of data. Today, a typical neural network can process either text, audio or video but not all three. Google’s goal with Pathways is to create neural networks that can draw on multiple types of information to make more accurate decisions. “The result is a model that’s more insightful and less prone to mistakes and biases,” Dean wrote.

In the process, Google also hopes to make AI models more efficient.

A neural network is made up of artificial neurons, snippets of code that each perform a small part of the task that the neural network is responsible for carrying out. Often, an AI doesn’t need to use all its artificial neurons for a calculation, only a limited subset. But current AI models often nevertheless activate all their artificial neurons when running calculations, even the ones that they don’t use, which unnecessarily increases infrastructure requirements.

With Pathways, Google hopes to implement an approach that would make it possible to activate only the parts of a neural network that are strictly needed for a given task. “A big benefit to this kind of architecture is that it not only has a larger capacity to learn a variety of tasks, but it’s also faster and much more energy efficient, because we don’t activate the entire network for every task,” Dean explained.

Google has already applied the concept in a number of AI projects. Switch Transformer, a natural-language processing model detailed by the company earlier this year, uses a technique called sparse activation to limit the number of artificial neurons used for calculations. According to Dean, Switch Transformation and another AI model called GShard that uses similar technology consume one tenth the energy they would require without using the technique.

Google has been working for years to build more versatile neural networks. In 2016, the company’s researchers upgraded the AI powering Google Translate with the ability to translate text between two languages even if it wasn’t specifically trained to do so. DeepMind, the AI research arm of Google parent Alphabet Inc., has developed an AI system that can learn several different games including chess and Go.

Others in the machine learning ecosystem are also working to build multipurpose neural networks. Last year, OpenAI detailed an AI system called GPT-3 that is capable of performing tasks ranging from writing code to generating essays on business topics. Scientists at the University of Chicago, in turn, have developed an approach that makes it possible to split a neural network into multiple segments each specialized in a different task.

For Google, developing a multipurpose AI that can perform thousands or millions of tasks could unlock major business benefits.

Autonomous vehicles like the kind Google sister company Waymo is building have to perform numerous computational tasks to turn data from their sensors into driving decisions. Often, each computational task is carried out by a separate neural network. This means that an autonomous vehicle must run a large number of AI models on its onboard computer. Replacing multiple AI models with a single, multipurpose neural network like the one Google hopes to build through its Pathways initiative could increase processing efficiency and help Waymo enhance its autonomous driving stack.

In the public cloud, delivering more versatile neural networks to customers could help Google make its managed AI services more competitive. And Pathways may in the future also allow the company to enhance its consumer services, notably Google Search. The company’s search engine already makes extensive use of AI to process user queries.

“Pathways will enable a single AI system to generalize across thousands or millions of tasks, to understand different types of data, and to do so with remarkable efficiency,” Dean wrote. The goal, the executive detailed, is “advancing us from the era of single-purpose models that merely recognize patterns to one in which more general-purpose intelligent systems reflect a deeper understanding of our world and can adapt to new needs.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.