AI

AI

AI

AI

AI

AI

Facebook parent Meta Platforms Inc. is pushing the boundaries of artificial intelligence robots into the realm of touch sensitivity with two new sensors it has created.

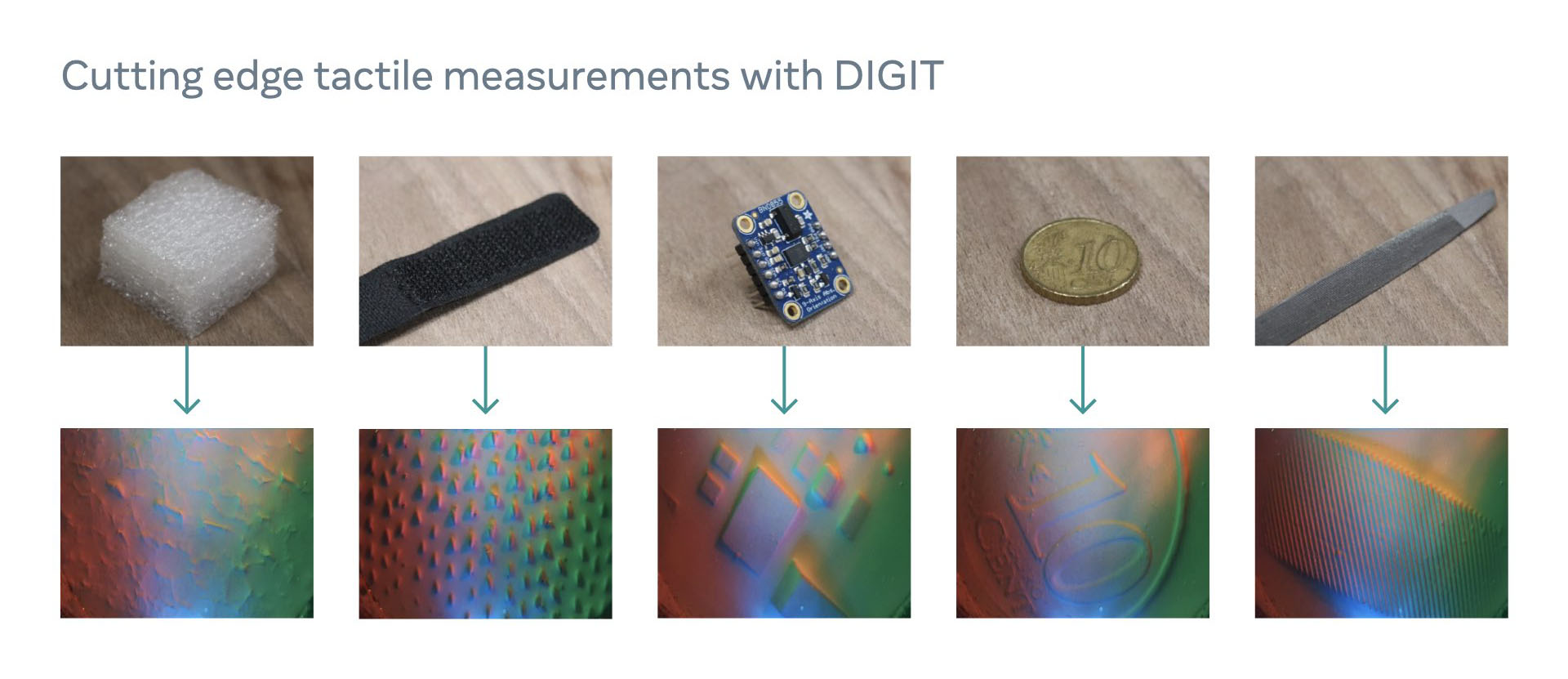

They include a high-resolution robot fingertip sensor called DIGIT (pictured) and a thin and replaceable robotic “skin,” known as ReSkin, that can help AI robots to discern information such as an object’s texture, weight, temperature and state.

The idea, according to Meta AI researchers Roberto Calandra and Mike Lambeta, is to use what’s known as “tactile sensing” to train robots to draw critical information from the things they touch, then integrate this know-how with other information to perform tasks of greater complexity.

Tactile sensing aims to replicate human-level touch in robots so that AI can “learn from and use touch on its own as well as in conjunction with other sensing modalities such as vision and audio.” Another assumed benefit is that robots with tactile sensing capabilities will be gentler and safer when handling other items or objects, for example.

To enable AI robots to use and learn from tactile data, they first need to be equipped with sensors that can collect information from the things they touch.

“Ideally, touch-sensing hardware should model many of the attributes of the human finger,” Calandra and Lambeta said. “They should be able to withstand wear and tear from repeated contact with surfaces… [they] also need to be of high resolution with the ability to measure rich information about the object being touched, such as surface features, contact forces, and other object properties discernible through contact.”

Meta open-sourced the blueprints of such a sensor, DIGIT, in 2020, saying at the time it is easy to build, reliable, low-cost, compact and high-resolution. The design has already seen wide adoption by universities and research labs, and now Meta is hoping to take DIGIT to the next level by partnering with a startup called GelSight Inc. to manufacture the sensor commercially to make it more widely available to researchers and speed up innovation.

As for ReSkin, it’s an entirely new sensor that acts like an artificial skin for robot hands, Meta explained.

“A generalized tactile sensing skin like ReSkin will provide a source of rich contact data that could be helpful in advancing AI in a wide range of touch-based tasks, including object classification, proprioception, and robotic grasping,” Meta AI researchers Abhinav Gupta and Tess Hellebrekers wrote in a blog post. “AI models trained with learned tactile sensing skills will be capable of many types of tasks, including those that require higher sensitivity, such as working in health care settings, or greater dexterity, such as maneuvering small, soft, or sensitive objects.”

The advantage of ReSkin is that it’s extremely inexpensive to produce, with Meta claiming 100 units can be built for less than $6, or even more cheaply at larger quantities. ReSkin is 2 to 3 millimeters thick and has lifespan of 50,000 interactions, with a high temporal resolution of up to 400Hz and a spatial resolution of mm with 90% accuracy.

Such specifications make it ideal for a range of purposes, such as robot hands, tactile gloves, arm sleeves or even dog shoes. Thus, it should enable researchers to collect vastly different types of tactile data that were previously impossible, or at least, very difficult and expensive to gather. Further, ReSkin also provides high-frequency, three-axis tactile signals that can enable fast manipulation tasks such as throwing, slipping, catching and clapping.

To aid the tactile sensing research community further, Meta has created and open-sourced a simulator called TACTO that will enable experimentation in the absence of hardware. Simulators are key to advancing AI research in most fields as they enable researchers to test and validate hypotheses without performing time-consuming experiments in the real world.

The idea with TACTO is to help researchers to simulate vision-based tactile sensors with different form factors mounted onto different types of robots.

“TACTO can render realistic high-resolution touch readings at hundreds of frames per second, and can be easily configured to simulate different vision-based tactile sensors, including DIGIT,” Calandra and Lambeta said.

Having all of that tactile sensing data is one thing, but researchers also need a way to process it and gain insights. Ever helpful, Meta is aiding this area too with the creation of a library of machine learning models called PyTouch that can translate raw sensor readings into high-level properties that, for example, can detect slip or recognize the material the sensor has come into contact with.

Researchers can use PyTouch to train and deploy various models across different sensors that provide basic functionality such as detecting touch, slip and estimating object pose.

“Ultimately, PyTouch will be integrated with both real-world sensors and our tactile-sensing simulator to enable fast validation of models as well as Sim2Real capabilities, which is the ability to transfer concepts trained in simulation to real-world applications,” Calandra and Lambeta said.

Eventually, it’s hoped, PyTouch will help researchers use advanced machine learning models dedicated to touch sensing “as-a-service.” The idea is that researchers will be able to connect a DIGIT sensor, download a pre-trained model and then use this as the basic building block for their robotic application.

Meta AI said that for all the progress it has made so far in tactile sensing there is much work to be done. Calandra and Lambeta explained that in order to develop robots with true human-like touch sensitivity, more hardware is needed – for example sensors that can detect the temperature of objects. They also need to develop a better understanding of which touch features are most important for specific tasks and a deeper knowledge of the right machine learning computational structures for processing touch information.

“Improvements in touch sensing can help us advance AI and will enable researchers to build robots with enhanced functionalities and capabilities,” the researchers wrote. “It can also unlock possibilities in AR/VR, as well as lead to innovations in industrial, medical, and agricultural robotics. We’re working toward a future where every single robot may come equipped with touch-sensing capabilities.”

THANK YOU