INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. announced the next generation of its powerful graphics processing units at its 2022 GPU Technology Conference today.

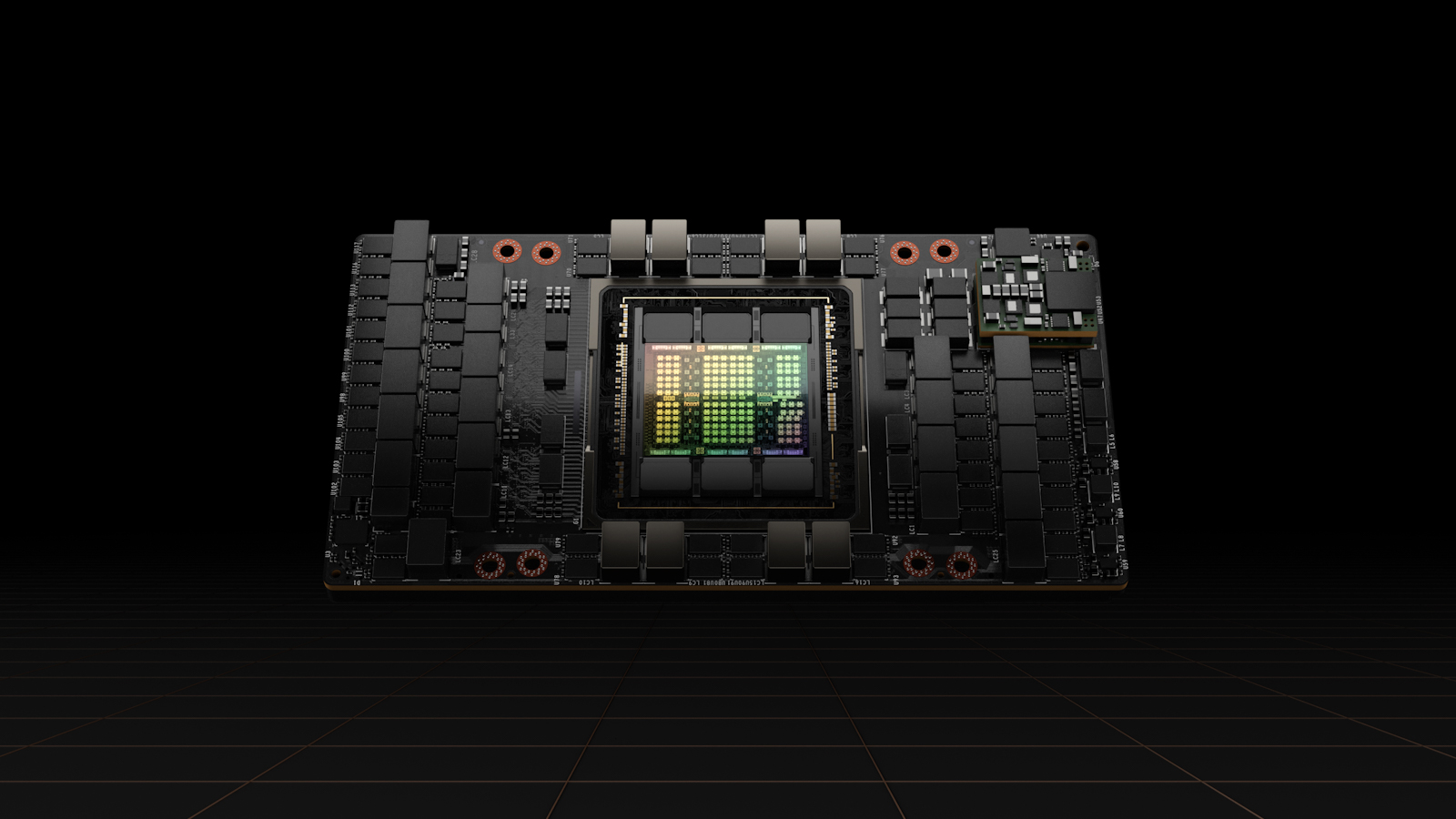

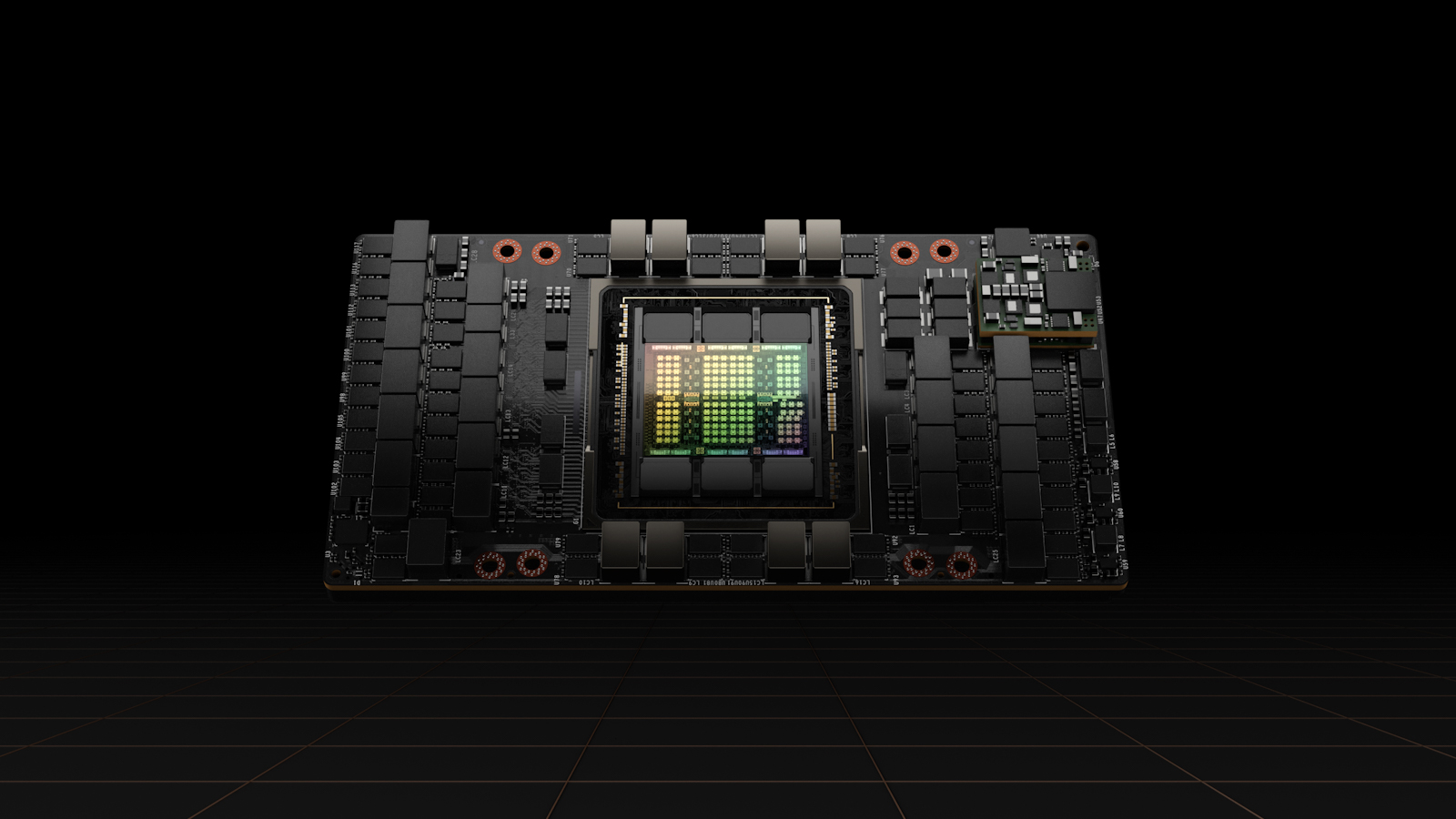

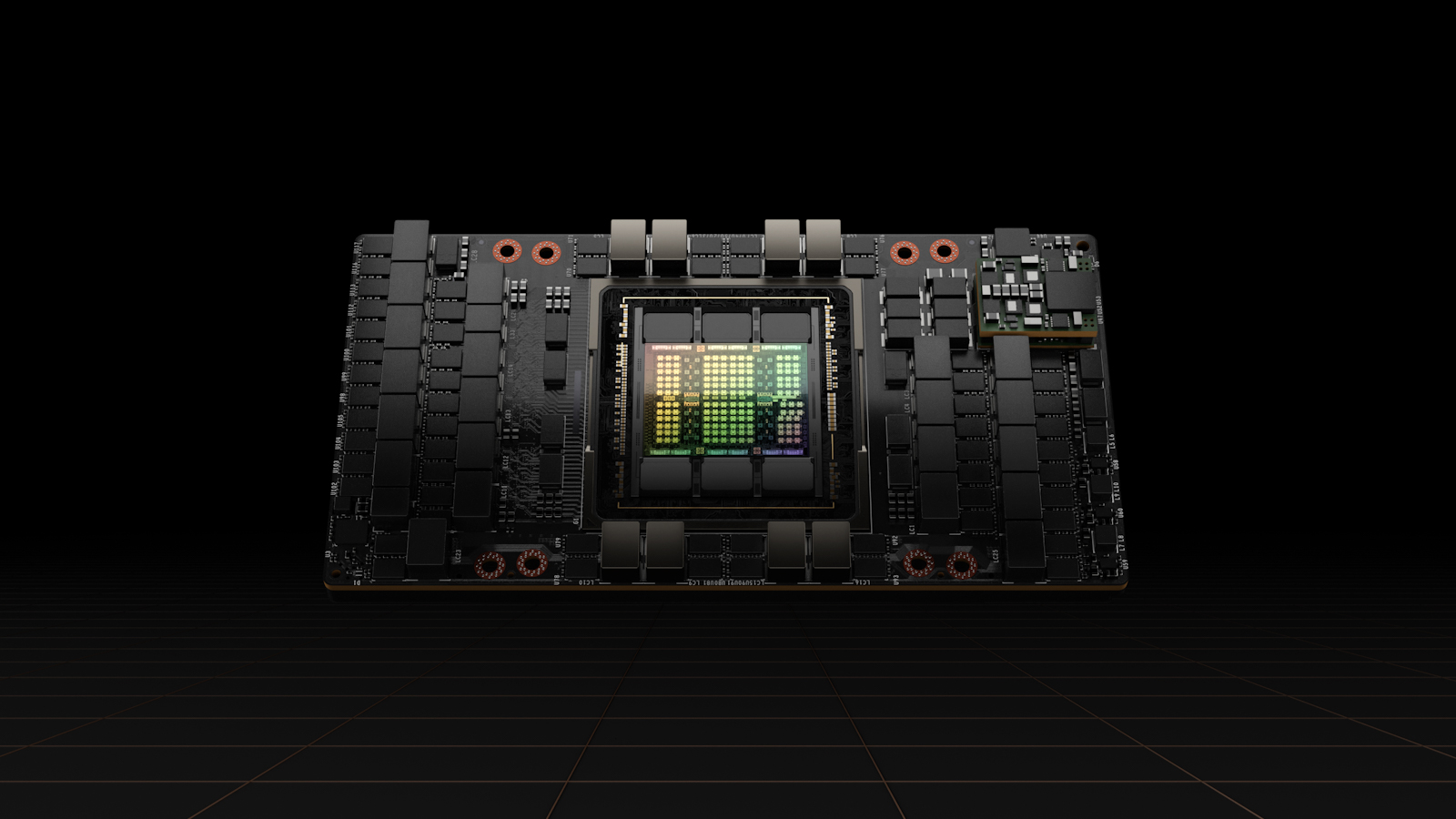

The new GPUs are based on the Nvidia Hopper architecture (pictured) and the company is promising an order of magnitude performance leap over its previous-generation Ampere chips, which debuted two years ago.

The first GPU built on the Hopper architecture is the Nvidia H100, a powerful accelerator that’s packed with 80 billion transistors and new features including a Transformer Engine and a more scalable Nvidia NVLink interconnect for advancing large artificial intelligence language models, deep recommender systems and more besides.

Nvidia said the H100 GPUs are so powerful that it would take only 20 of them to sustain the world’s entire internet traffic, making them ideal for the most advanced AI workloads, including performing inference on data in real-time. They’re also the first GPUs to support PCIe Gen5 and the first to use HBM3, which means they pack more than 3 terabytes of memory bandwidth.

Critically, the Nvidia H100 GPU has been updated with a new Transformer Engine that can speed up Transformer-based natural language processing models by up to six times what the previous generation A100 GPU could do. Other improvements include a second-generation secure multi-instance GPU technology that makes it possible for a single H100 chip to be partitioned into seven smaller, fully isolated instances, meaning it can handle multiple workloads at once.

The H100 is also the first GPU to possess confidential computing capabilities that protect AI models and their date while they’re running. Moreover, the chips boast new DPX instructions that enable accelerated dynamic programming, a technique that’s commonly used in many optimization, data processing and omics algorithms, by up to 40 times what the most advanced central processing units are capable of, Nvidia said. So the chips will be able to accelerate the Floyd-Warshall algorithm that’s used by autonomous robot fleets in warehouses, or the Smith-Waterman algorithm used for sequence alignment of DNA and protein classification.

Nvidia said the H100 GPUs are designed to power immersive, real-time applications using giant-scale AI models. They’ll enable more advanced chatbots to use the most powerful monolithic transformer language model ever created – Megatraon 530B – with up to 30 times greater throughput than the A100 GPUs. Researchers will also be able to train other large AI models much faster than before, the company promised.

Besides powering more advanced AI models, Nvidia said the H100 GPUs will have a big impact in areas such as robotics, healthcare, quantum computing and data science.

Customers will have a range of options available to them when the H100 becomes available in the third quarter. For instance, Nvidia said its fourth-generation DGX system, the DGX H100, will pack 8 H100 GPUs, meaning it can deliver a maximum of 32 petaflops of AI performance. The H100 chips will also be provided by cloud service providers that include Amazon Web Services Inc., Google Cloud, Microsoft Azure, Oracle Cloud, Alibaba Cloud, Baidu AI Cloud and Tencent Cloud, all of which are planning to offer H100-based instance.

There will also be a range of server options available from the likes of Dell Technologies Inc., Hewlett Packard Enterprise Co., Cisco Systems Inc., Atos Inc., Lenovo Group Ltd. and others.

The new H100 GPUs were launched alongside Nvidia’s first Arm Neoverse-based discrete data center CPUs, which are designed for artificial intelligence infrastructure and high-performance computing.

The Nvidia Grace CPU Superchip, as it’s called, comprises two CPUs connected over NVLink-C2C, a new high-speed and low-latency chip-to-chip interconnect and is designed to complement the company’s first CPU-GPU integrated module, the Grace Hopper Superchip that debuted last year. They pack 144 Arm cores in a single socket, with support for Arm Ltd.’s new generation of vector extensions and all of Nvidia’s computing software stacks, including Nvidia RTX, Nvidia HPC, Nvidia AI and Omniverse.

They’re also due to launch in the third quarter, and will deliver the performance required for the most demanding HPC, AI, data analytics, scientific computing and hyperscale computing applications, Nvidia said.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.