INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

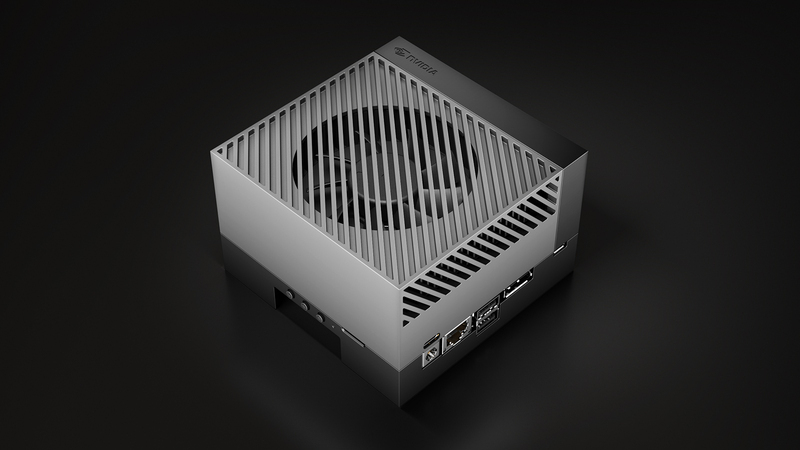

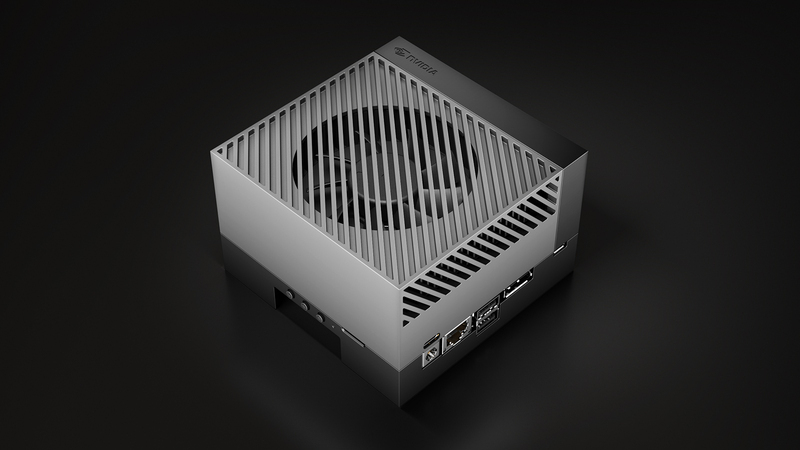

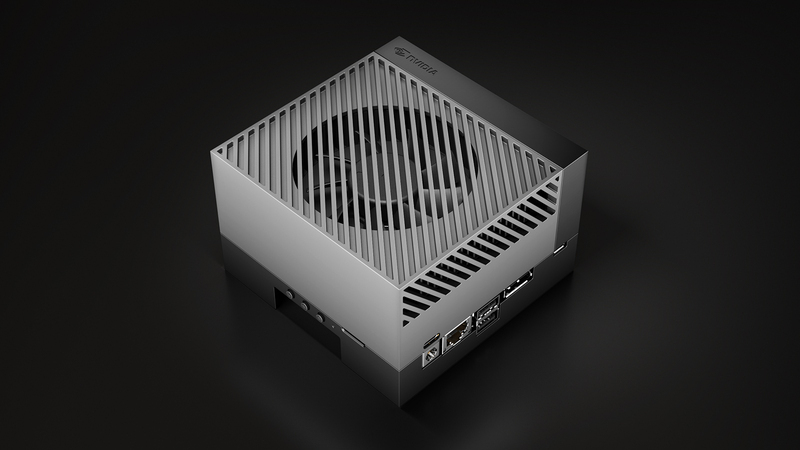

Nvidia Corp. is boosting its robotics capabilities with the launch of its new Nvidia Jetson AGX Orin developer kit, a powerful, compact and energy-efficient artificial intelligence supercomputer designed to power autonomous machines and robots.

Announced at the Nvidia GTC conference today, the Jetson AGX Orin is powered by a single Nvidia A100 graphics processing unit and multiple Arm Cortex-A78AE central processing units. It also comes with Nvidia’s latest deep learning and vision accelerators and boasts high-speed interfaces, ultra-fast memory bandwidth and multimodal sensor support, Nvidia said.

All in all, it can deliver 275 trillion operations per second. That’s about eight times more powerful than its predecessor, the Nvidia Jetson AGX Xavier.

Nvidia said the AGX Orin platform is designed to power a new generation of autonomous mobile robots. It’s easy to understand why Nvidia is attacking this market. ABI Research says the AMR industry will be generate $46 billion a year in revenue by 2030, up from just $8 billion in 2021.

Although AMRs already exist today, Nvidia said the old method of designing a compute and sensor stack from the ground up is proving too costly. Rather, it believes what’s needed is an existing platform for manufacturers to tap into, so they can focus on building the right software stack for each application.

To that end, Nvidia announced a new compute and sensor reference platform based on AGX Orin, called Isaac Nova Orin, which includes advanced sensor technologies and high-performance AI compute capabilities.

Nvidia said Nova Orin possesses all of the compute and sensor hardware companies need to create AMRs with a high degree of autonomy. Nova Orin is powered by two Jetson AGX Orin units, which together provide 550 trillion operations per second of AI compute to enable perception, navigation and human-machine interaction, Nvidia said. The modules can process data in real-time from the AMR’s central nervous system, which is a sensor suite of up to six cameras, three lidar sensors and eight ultrasonic sensors.

Nova Orin also comes with tools companies can use to simulate their AMR designs in Nvidia Omniverse, a highly realistic 3D design platform, Nvidia said.

Nvidia said Jetson AGX Orin and Nova Orin will be available later this year, along with new software simulation capabilities it says are designed to accelerate AMR deployments. These include some new hardware-accelerated modules, known as Isaac ROS GEMs, that will help robots to navigate, avoid obstacles and plan a pathway through their environment.

TIRIAS Research analyst Jim McGregor said that almost every industry stands to benefit from AI and robotics in the coming years. “Combined with Nvidia pretrained AI models, frameworks like TAO toolkit and Isaac on Omniverse, and supported by the Jetson developer community and its partner ecosystem, Jetson AGX Orin offers a scalable AI platform with unmatched resources that make it easy to adapt to almost any application,” McGregor said.

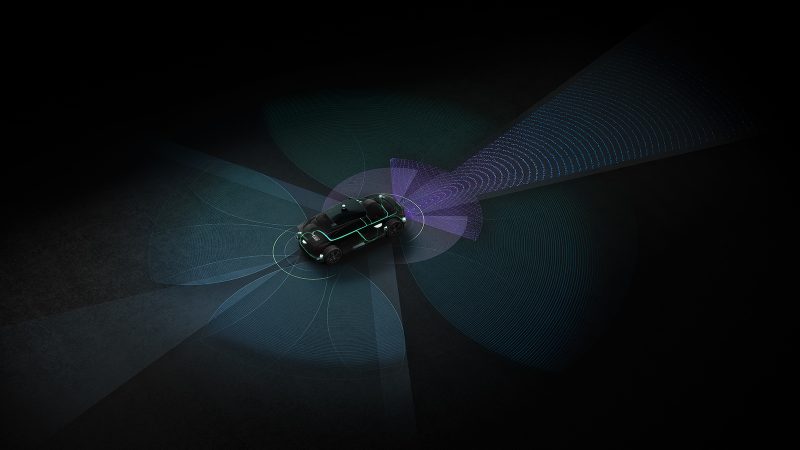

Alongside robotics, Nvidia is staking its claim to be the key player in the emerging autonomous driving market. At GTC, it announced the latest edition of its autonomous vehicle platform, Nvidia DRIVE Hyperion 9, built atop multiple DRIVE Atlan computers.

Nvidia said the platform will enable both intelligent driving and unprecedented in-cabin functionality for autonomous vehicles. It encompasses the required computer architecture and sensor set, plus Nvidia’s DRIVE Chauffer and Concierge applications for automated and assisted driving. It’s based on an open and modular design too, so customers will be able to use only the components they need.

The key advance with DRIVE Hyperion 9 relates to its computing power. The latest generation DRIVE Atlan system-on-a-chip offers more than twice as much power as the current DRIVE Orin platform, Nvidia said, meaning it will be capable of level 4 autonomous driving, which doesn’t require human interaction in driving.

Nvidia said the DRIVE Atlan SoC fuses Nvidia’s best capabilities in AI, automotive, robotics and safety, providing more than enough compute horsepower for the deep neural networks needed to take command of vehicles on the road. DRIVE Atlan can therefore be thought of as the brain of autonomous vehicles, while DRIVE Hyperion 9 will act as the central nervous system.

DRIVE Hyperion 9’s upgraded sensor suite is said to include surround imaging radar capabilities, as well as enhanced cameras with higher frame rates, two additional side lidar sensors and an improved undercarriage sensor, plus three internal cameras and an internal radar for occupant sensing.

Nvidia said DRIVE Hyperion 9 will begin production in 2026.

THANK YOU