AI

AI

AI

AI

AI

AI

The artificial intelligence research firm OpenAI Inc. today released an updated version of its text-to-image generation model DALL-E, with higher resolution and lower latency than the original system.

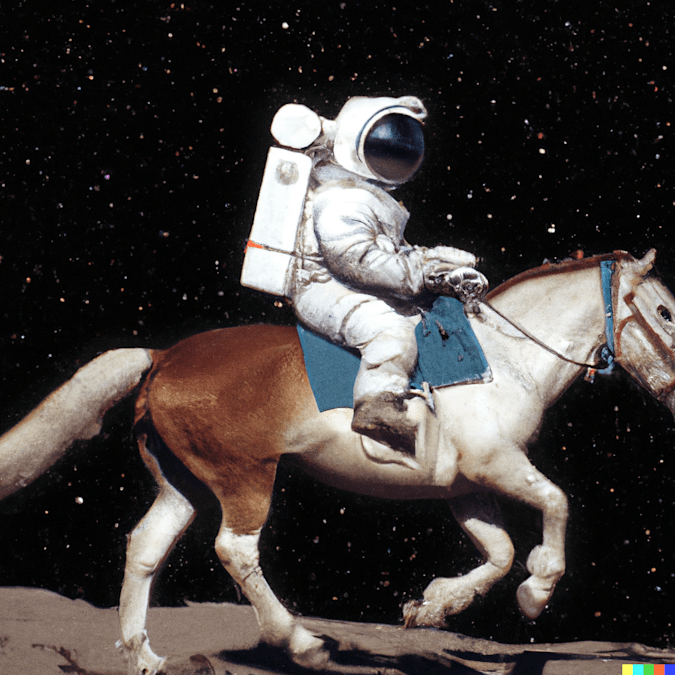

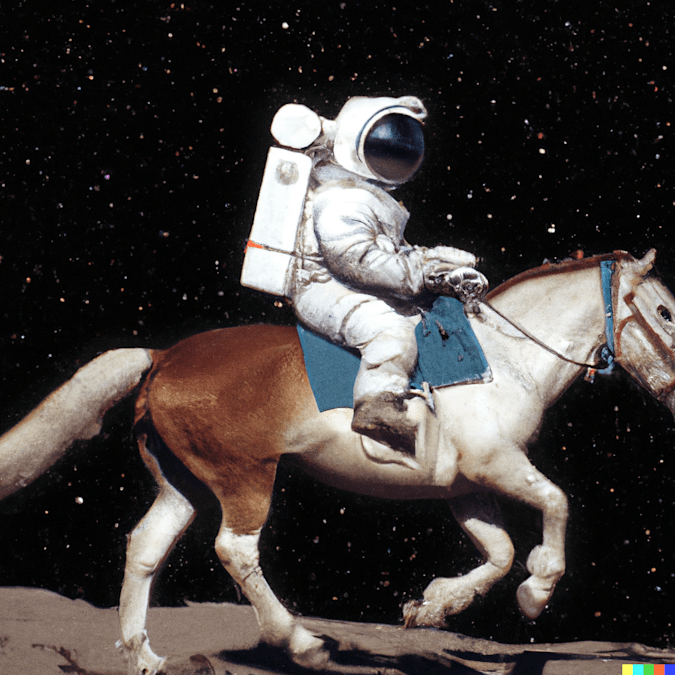

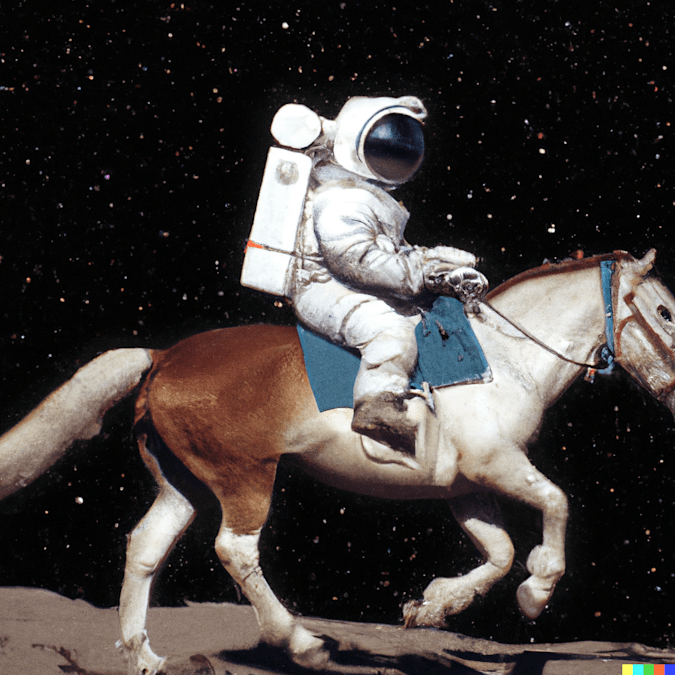

DALL-E 2, like the original DALL-E, can produce pictures, such as the image above, from written descriptions and comes with a few new capabilities, such as being able to edit an existing picture. As usual with OpenAI’s work, DALL-E 2 isn’t being open-sourced, but researchers are encouraged to sign up to test the system, which may one day be made available to use by third-party applications.

“DALL-E” is a portmanteau of the iconic artist Salvador Dali and the robot WALL-E from the computer-animated science fiction film of the same name. Unveiled in January 2021, it provided a fascinating example of AI-based creativity.

The model was able to create depictions of anything from mundane mannequins to flannel shirts and even a “giraffe made of turtle.” OpenAI said at the time that it was continuing to build on the system, while being careful to examine dangers such as bias.

The result of that ongoing work is DALL-E 2, which includes a new “inpainting” feature that applies the original model’s text-to-image prowess on a more granular level, OpenAI explained. With the updated model, it’s possible to start with an existing picture, select a part of it and tell the model to edit it.

Thus someone could ask DALL-E 2 to block out a picture hanging on a wall and replace it with a new one, or add a glass of water to a table. The precise nature of the model means it can remove an object from an image while taking into account how that would affect details such as shadows. In the example below, the pink flamingo was added to an existing image:

A second new feature in DALL-E 2 is variations, which allows users to upload an image and then have the model create variations of it. It’s also possible to blend two existing images, generating a third picture that contains elements of both.

DALL-E 2 is built on CLIP, a computer vision system created by OpenAI. CLIP is based on Generative Pre-trained Transformer 3, a so-called autoregressive language model that uses deep learning to produce human-like text, only instead it generates images.

CLIP was originally designed to look at images and summarize the content of what it saw in human language, similar to how humans do. Later, OpenAI iterated on this to create an inverted version of that model called unCLIP, which begins with the summary and works backward to recreate the image.

DALL-E 2 has some built-in safeguards. OpenAI explained that it was trained on data where potentially objectionable material was first weeded out, so as to reduce the chance of it creating an image that some might find offensive.

Its images also contain a watermark indicating that DALL-E 2 created it. OpenAI has also ensured it can’t generate any recognizable human faces based on a name, so apparently it wouldn’t be able to draw a portrait of, say, Donald Trump, if it were asked. Even so, the possibilities are virtually limitless, as this example of “a bowl of soup that looks like a monster, knitted out of wool,” demonstrates.

Andy Thurai of Constellation Research Inc. said DALL-E 2 is an exciting but somewhat risky initiative that represents one of the first times that AI has gone cross boundary. “Reading text and understanding the meaning is one thing, but to enable critical thinking and provide results based on that is something else,” he said. “It’s getting into potentially dangerous territory.”

Society is already struggling with fake news and things such as “deepfakes” that are generated by AI, Thurai said, and if DALL-E 2’s technology becomes more widely available it could complicate things in that area.

“An AI program, written in plain text, can be instructed to edit a picture in a malicious way and post it online, which could damage the reputation of both individuals and companies,” he said. “So care should be exercised along with governance or some way of showing this is an AI-generated image. Though Open AI claims the DALL-E 2 model was built on an unbiased, clean data set, proof needs to be provided to the market about how this was done. The claim that only non G-rated images can be manipulated needs to be proven as well.”

Thurai’s colleague Holger Mueller was more forgiving, saying DALL-E 2 shows AI is rapidly maturing as a technology. “It is no longer up to AI to make sense of the real world, but rather create the real world based on directions we give it,” he said. “DALL-E 2 is a major step forward that can generate incredibly complex pictures from text and will likely have real implications for developers in terms of value creation and next-generation user interface use cases.”

OpenAI said that unlike the original DALL-E, DALL-E 2 will be made available for testing only by vetted partners, and there will be some restrictions in place. Users will not be allowed to upload or generate images that aren’t “G-rated” or could cause harm or upset. That means no nudity, hate symbols or obscene pictures will be allowed.

OpenAI said it hopes to add DALL-E 2 to its application programming interface toolset once it has undergone extensive testing, meaning it could one day be made available to third-party apps.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.