AI

AI

AI

AI

AI

AI

Meta Platforms Inc.’s artificial intelligence research division Meta AI is embarking on a long-term research project to study how the human brain processes language in order to build better language models.

The project was announced today and will be done in collaboration with neuroimaging center NeuroSpin and the Inria, the French National Institute for Research in Digital Science and Technology. With the participation of these two foundations, Meta AI will be comparing how AI language models and human brains respond to the same spoken or written sentences.

“Understanding the origins of human intelligence is the grand challenge for science in the 21st century,” said Stanislas Dehaene, director of NeuroSpin. “Artificial neural networks for language are getting closer and closer to mimicking the activity of the human brain, and thereby shedding new light on how thought might be implemented in neural tissue.”

Currently, AI models that most closely model human language do so by systematically breaking down sentences through observing context and attempting to predict the next word based on a type of machine learning. Although these systems might provide a superficial sense of “humanness” to users, they use broad datasets of how numerous other sentences proceeded to predict the next word.

Human brains, however, anticipate words and ideas far ahead including everything that the sentence or thought might represent.

For example, giving an AI model “Once upon,” and having it predict the next word is a one-off process of predicting the word “time.” The brain of a person who had been raised on fairy tales, on the other hand, would hear “Once upon,” and their brain would do more than just predict “time” as the next word, it would also bring to mind all of the magical notions that come with it, such as wicked witches, dragons, castles, heroes and other things of cultural significance.

When brains make these predictions, they generate specific “brain states” that can be seen during brain imaging. Meta AI is using functional magnetic resonance imaging and magnetoencephalography scanners in order to take snapshots of brain activity while volunteers are reading or listening to a story.

As researchers began to use machine learning on brain scans recorded from public data sets combined with new fMRI and MEG scans, they discovered something interesting. The research revealed that language processing in the human brain tends to resemble organized hierarchies similar to how AI language models work.

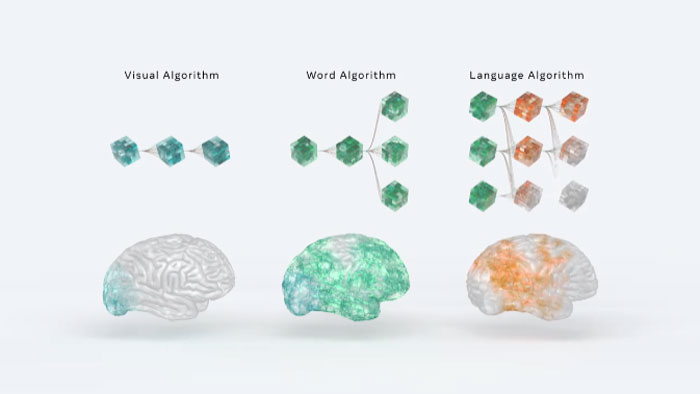

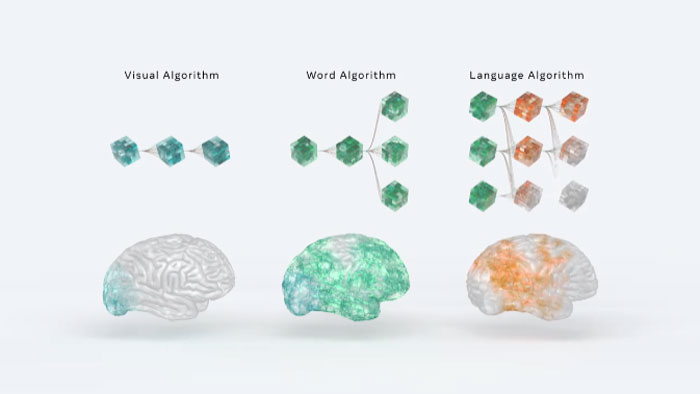

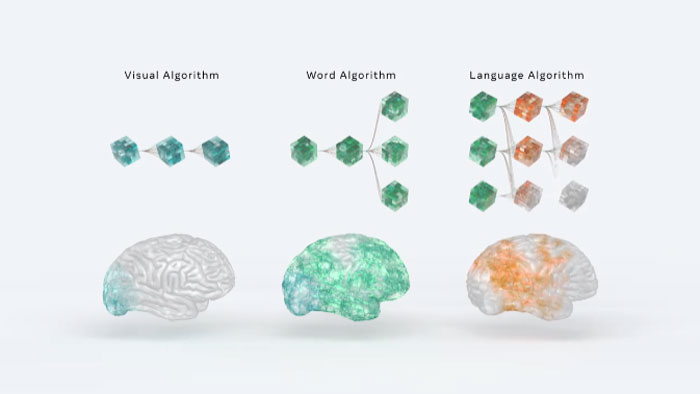

For example, there are areas in the brain that are similar to algorithms for visual processing that activate when words trigger visual stimulus, there are areas similar to algorithms that would represent word understanding and entire networks that appear to behave similarly to AI language transformers.

In brain studies, it’s already known that particular brain regions are part of visualization and language processing, and their interaction forms networks for constructing narratives and representations for understanding. Meta AI’s results showed that certain brain regions, such as the prefrontal and parietal cortices – located frontward and middle of the brain – best-represented language models enhanced with far-off future word predictions.

“We found that the better the algorithm is at predicting the next word the more it resembles brain activity and this is quite important because it suggests that the internal representations that are shared between brains and algorithms are useful for the algorithm to process language,” said Charlotte Caucheteux, a Ph.D. student working at Meta AI.

The Meta AI researchers and their collaborators believe they are on a likely route to success, since this discovery was verified quickly after analyzing brain activations of 200 volunteers in a simple reading test. Then, about a week later, a team at the Massachusetts Institute of Technology independently completed a similar study and came to very similar conclusions.

With these studies, Meta AI hopes to create quantifiable similarities between human brains and AI models and use these similarities to generate new insights into brain functions. By delivering AI that can act and react more in tune with human language use, it will be able to better interact with people in a natural way.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.