AI

AI

AI

AI

AI

AI

Amazon.com Inc.’s Alexa speakers are capable of myriad amazing voice capabilities backed up by artificial intelligence, and during the company’s re:MARS event in Las Vegas Wednesday, the company revealed an upcoming feature: the ability to mimic voices.

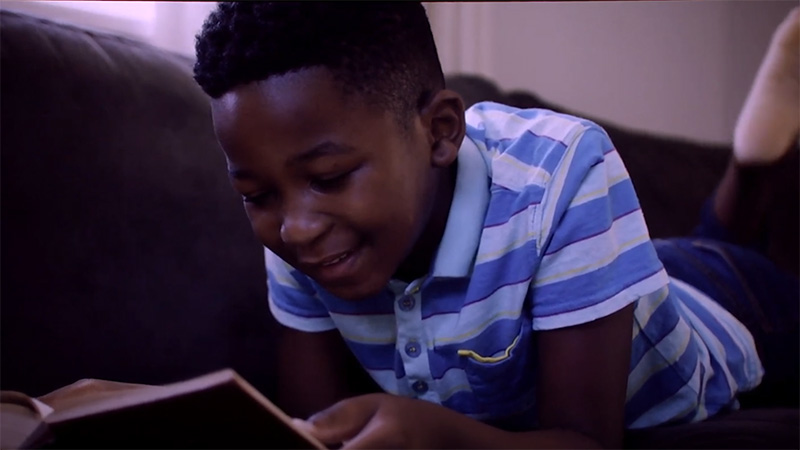

To demonstrate the feature, Rohit Prasad, Alexa’s senior vice-president and head scientist, played a clip of Alexa copycatting the voice of a child’s recently deceased grandmother reading “The Wizard of Oz.”

Prasad explained that the company has been seeking ways to make AI more empathetic and compassionate to human needs, in light of the “companionship relationship” the people have with Alexa. He specifically harkened to people losing people that they love.

“While AI can’t eliminate that pain of loss, it can definitely make the memories last,” he said.

Prasad explained that in the demonstrationm Alexa had learned to speak with the child’s grandmother’s voice with less than a minute of a high-quality voice recording of the grandmother’s voice versus hours of studio recordings required for other AI models.

During the presentation, he explained that it does have some technical challenges, however, because it required approaching it as a voice conversion task instead of a speech generation task done by the AI. Voice conversion is a method for generating synthetic speech from recorded speech through filtering as described in a white paper published by Amazon’s research team.

“We are unquestionably living in the golden era of AI, where our dreams and science fiction are becoming a reality,” Prasad added.

Amazon has given no details on how long it will take to develop and deploy this particular feature.

Security experts and AI ethics experts have long questioned the emergence and role of AI audio “deepfakes.” Although its use is somewhat rare in scam calls currently, the technology could have wide-ranging concerns if it were released as part of consumer-grade technology.

“The phone attacking implications of this tool are not good at all — this will likely be used for impersonation,” tweeted Rachel Tobac, chief executive of SocialProof Security. “I know this technology already exists, I’ve talked about this risk with other orgs tools. But the easier it is to use, the more it will be abused. And this sounds like it may be pretty user friendly.”

For example, most hacking doesn’t operate the way that it’s portrayed in cinema, with fingers tapping rapidly on keyboards and scripts flying across screens. Often, information for breaking into networks is extracted from people inside organizations who have special access through social engineering by pretending to be someone else who they should trust. If you can sound like someone’s boss asking for a password or access, it’s that much easier.

One recent example of an audio deepfake was used by fraudsters to steal $35 million from a United Arab Emirates company in the second known use of the technology to pull this sort of heist.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.