AI

AI

AI

AI

AI

AI

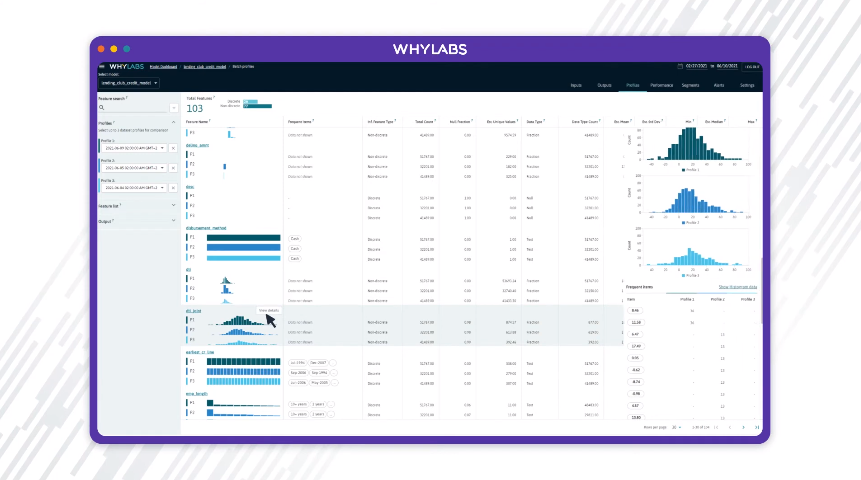

Startup WhyLabs Inc. today released LangKit, an open-source toolkit designed to help companies monitor their large language models for safety issues and other risks.

Seattle-based WhyLabs is backed by $14 million in funding. Its investors include Madrona Venture Group, Bezos Expeditions and AI Fund, a venture capital firm led by artificial intelligence pioneer Andrew Ng. The company provides a platform that helps companies monitor their AI models and training datasets for technical issues.

WhyLabs’ new LangKit toolkit is designed to perform the same task, but with a particular focus on large language models. The company says the software can spot a wide range of common issues in such models.

“We have been working with the industry’s most advanced AI/ML teams for the past year to build an approach for evaluating and monitoring generative models; these efforts culminated in the creation of LangKit,” said co-founder and Chief Executive Officer Alessya Visnjic.

A core selling point of LangKit is its ability to detect so-called AI hallucinations. That’s the term for the phenomenon where a language model makes up the information it includes in a response. LangKit can also detect toxic AI output, as well as spot cases when a model might accidentally leak sensitive business information from its training dataset.

Another set of monitoring features in LangKit focuses on helping companies track a model’s usability. According to the company, the toolkit can monitor the relevance of AI responses to users’ questions. Furthermore, LangKit evaluates those responses’ readability.

WhyLabs says the toolkit can help companies monitor not only model output but also user input. In particular, the software is designed to spot malicious prompts sent to a language model as part of AI jailbreaking attempts. AI jailbreaking is the practice of tricking a neural network into generating output its built-in guardrails usually block.

Because LangKit is open-source, users with advanced requirements can extend it by adding custom monitoring metrics. That customizability allows companies to monitor aspects of an AI model the toolkit doesn’t track out of the box.

Users can configure LangKit to generate alerts when certain types of technical issues emerge. For added measure, the software visualizes the error information it collects in graphs. Administrators can consult the graphs to determine whether an language model’s accuracy might be decreasing over time, a phenomenon known as AI drift.

Another task LangKit promises to simplify is the process of testing AI code updates. Using the toolkit, a software team may input a set of test prompts into a model both before and immediately after a code change. By comparing the responses the AI generates, developers can determine whether the update increased or inadvertently decreased response quality.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.