AI

AI

AI

AI

AI

AI

Smartphone chip maker Qualcomm Inc. took to the stage at the annual IEEE/CVF Conference on Computer Vision and Pattern Recognition today to announce its latest advancements in running generative artificial intelligence at the edge.

The company showcased various new generative AI applications, including a new image generation model, a large language model-based fitness coach and a 3D reconstruction tool for extended reality, that run entirely on a mobile device.

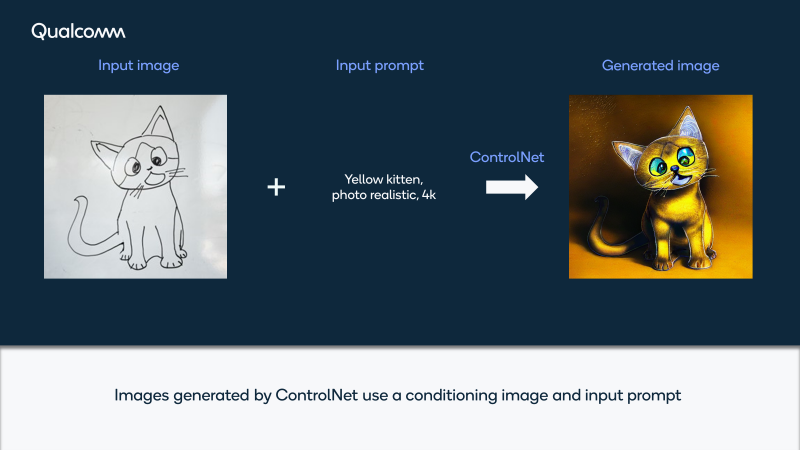

Qualcomm’s pièce de résistance was ControlNet, a 1.5 billion parameter image-to-image model that runs on a typical midrange smartphone. The company explained that ControlNet is a class of generative AI algorithms known as language-vision models that enable precise control over image generation by conditioning an input image together with an input text description.

In a demo onstage, Qualcomm showed how ControlNet could generate new images in less than 12 seconds, simply by uploading a photo and adding a plain English text that describes how it should be edited. One of the examples saw it upload a basic sketch of a kitten, along with the description “yellow kitten, photorealistic, 4k.” Within seconds, the mobile device running ControlNet transformed that sketch into something far more impressive.

Qualcomm explained that ControlNet is powered by a full stack of AI optimizations across its model architecture, along with specialized AI software such as the Qualcomm AI Stack and AI Engine, and neural hardware accelerators on the actual device.

In another example, Qualcomm showed how it used an LLM similar to OpenAI LP’s ChatGPT to create a digital fitness coach capable of natural, contextual interactions in real time. The users simply videos themselves as they’re exercising, and this data will be processed on the device by an action recognition model, Qualcomm explained.

Then, based on the recognized actions, a stateful organizer translates that into prompts that are fed into the LLM, enabling the digital fitness coach to provide feedback to the user as their workout progresses. Qualcomm explained that three new innovations made this possible: a new vision model trained to detect fitness activities, a language model trained to generate language grounded in visual concepts, and the orchestrator, which coordinates the interaction between those two modalities to facilitate live feedback.

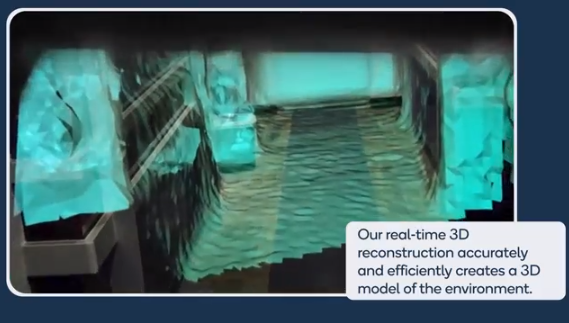

The 3D construction tool for XR, a catch-all term that refers to augmented reality, virtual reality and mixed reality, enables developers to create highly detailed 3D models of almost any environment that can run entirely on a mobile device. Qualcomm said it works by generating depth maps from individual images (pictured) and combining them into 3D scene representations.

The accurate 3D maps it creates can be used in a range of AR and VR applications, Qualcomm said. In an example of such an application, Qualcomm created an AR scenario that allows users to shoot virtual balls against real objects, such as walls and furniture, and see them bouncing off those objects in a realistic way, based on accurate physics calculations.

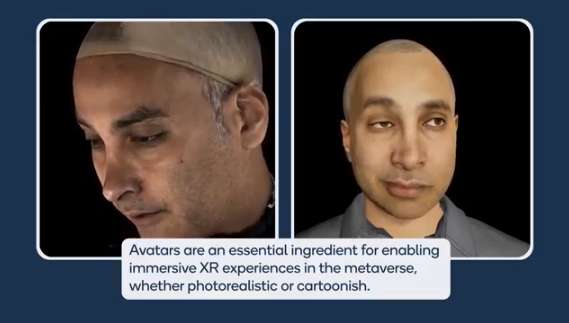

Qualcomm further applied generative AI to facial avatar creation for XR environments. It showed off a model that can take one or more 2D photos of someone’s face, apply a personalized mesh and texture to that image, and transform it into a 3D face avatar.

The avatars can even render users’ actions in real-time through the use of headset cameras that track the movements of their eyes and mouths, and recreate these in the avatar itself. Qualcomm explained that its goal with this model is to enable users to create a digital human avatar on its Snapdragon XR platform for use in the metaverse and human-machine interfaces.

Finally, Qualcomm showed how it’s using AI to improve its driver monitoring technology. In this case, it created a computer vision model that can detect dangerous driving conditions, and combined this with active infrared cameras that monitor the driver’s status in real time, including signs of distraction or drowsiness. The system, which runs on the Snapdragon Ride Flex system-on-chip, can then warn the driver whenever it detects dangerous driving, Qualcomm said.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.