SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

Just two months after OpenAI LLC released its ChatGPT large language model into an open beta test, researchers at WithSecure Corp. published a report demonstrating how the chatbot could be used to write convincing email messages that encouraged recipients to share corporate secrets, create tweets that hyped once-in-a-lifetime investment opportunities and even attack individuals through social media.

At about the same time, researchers at CyberArk Software Ltd. demonstrated how ChatGPT could be used to produce polymorphic, or mutating, malware that is almost impossible for security tools to detect. “The possibilities for malware development are vast [and]… can present significant challenges for security professionals,” they wrote.

With the arrival of ChatGPT and a host of other new generative artificial intelligence models, cybersecurity today stands at a crossroads. Never before have such powerful tools existed to create reams of code in a blink of an eye. On the plus side, chatbots and LLMs can be used to help identify threats decisively and defensively and neutralize them all with minimal human effort and intervention. But they can also quickly spin fictional narratives, create code that hides malware and devise highly targeted “spear phishing” emails at large scale.

That is the dark side of the riches these gifts have bestowed. And that is the challenge at hand.

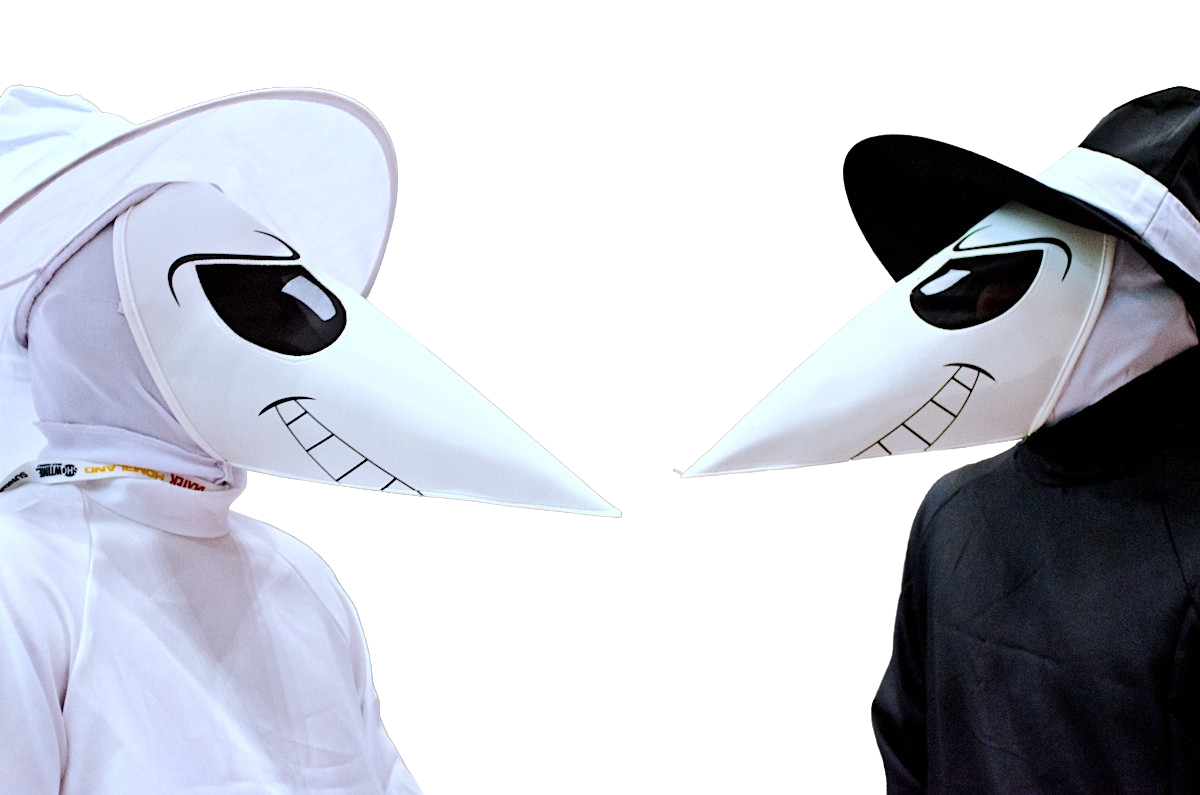

Trusting our tools has always been the bane of cybersecurity defenders. Network packet analyzers can reveal intrusions but also be used to find vulnerable servers. Firewalls can segregate and isolate traffic but can also be compromised to allow hackers access. Backdoors and virtual private networks can serve both good and evil purposes. But never before have the tools been so superlatively good and bad at the same time — a sort of real-life cybersecurity version of Mad magazine’s classic cartoon “Spy vs. Spy.” In the rush to develop new extensions to the chatbots, we have also created the potential for future misery.

Gartner’s Litan: “Bad guys have been using AI for a long time.” Photo: Gartner

SiliconANGLE posed a simple question to numerous security experts: Will artificial intelligence ultimately be of greater benefit to cyber criminals or those whose mission is to foil them? Their responses ran the gamut from neutral to cautiously optimistic. Although most said AI will simply elevate the cat-and-mouse game that has characterized cybersecurity for years, there is some reason to hope that generative models can be of greater value to the defenders than the attackers.

They noted, in particular, that AI can help relieve the chronic shortage of security professionals that makes cybersecurity a constant firefight and automate at-scale processes that are now frustratingly manual.

However, they’re also painfully aware that cybercriminals have been experimenting with large language models since the day they were introduced, that there is already a bustling market on the dark web for tools that turn LLMs into attack machines and that generative AI models could easily be used to spread malware through software supply chain attacks, which have grown more than 740% annually since 2019 with open-source packages being favorite targets.

“Bad guys have been using AI for a long time,” said Avivah Litan, a distinguished analyst at Gartner Inc. “Generative AI is just upping the ante. Now they can use generative AI to do that and construct their attacks much more quickly and effectively.”

There is plenty of evidence that the black hats are already hard at work. Palo Alto Networks Inc.’s Unit 42 research group recently reported “a boom in traditional malware techniques taking advantage of interest in AI/ChatGPT.” The group also said it has seen a 910% increase this year in monthly registrations for domain names with variations on OpenAI or ChatGPT that are intended to lure people looking for the chatbot to a malicious destination.

SIDEBAR: How organizations can combat AI-equipped attackers

A survey of 1,500 information technology executives by BlackBerry Ltd. early this year found that half predict that a successful attack credited to ChatGPT will occur within the next year and 74% are worried about potential threats the technology poses.

The biggest fear is that AI could expand the population of adversaries by empowering aspiring attackers will little coding skill to up their game. “By asking the right questions, attackers can receive a full attack flow without needing to be an expert in multiple hacking methods,” said Yotam Segev, chief executive of data protection firm Cyera Ltd. “This lowers the barrier to entry for launching sophisticated cyberattacks.”

But there is also reason to hope that AI can relieve the plight of beleaguered cybersecurity professionals who spend much of their time responding to false alerts and attempting to keep up with a never-ending stream of advisories and patches.

Cyera’s Segev: AI “lowers the barrier to entry for launching sophisticated cyberattacks.” Photo: LinkedIn

“Generative AI is a supercharger for cyber defenders, whether that’s analyzing malicious code, assisting in playbook creation for incident response, or providing the commands and queries necessary to assess a broad swath of digital infrastructure,” said Robert Huber, chief security officer and head of research at exposure management firm Tenable Network Security Inc.

The analytic capabilities of AI models can tackle one of the most labor-intensive security tasks, which is combing through data, said Tenable Chief Technology Officer Glen Pendley. “Security professionals are typically not data analysts and don’t have the expertise to do these sorts of things efficiently,” he said. “Generative AI gives them the power to better understand data.”

Dan Mayer, a threat researcher at Stairwell Inc. maker of a continuous intelligence, detection and response platform, notes that “the ability to extract millions of features and train a neural network using tagged examples of good and bad activity means that soon AI analysts may be able to replicate the more nuanced analysis human security professionals perform.”

At the same time, said Anudeep Parhar, chief operating officer at identity management firm Entrust Corp., “AI can identify vulnerabilities and apply patches automatically and consistently even across different cloud platforms and departments.” In addition, he said, “tools like ChatGPT have the potential to create more intuitive interfaces that will enable cybersecurity teams to engage with the applications using natural language commands.” That could help put a dent in the shortage of cybersecurity professionals, which is estimated to be between 1 million and 3 million jobs globally.

Entrust’s Parhar sees AI as making it easier for cybersecurity professionals to engage with the applications they rely upon. Photo: Entrust

Although cybersecurity firms have been using deep learning and machine learning techniques for years, ChatGPT has elevated public awareness of the capabilities of intelligent machines to emulate human conversation and introduced a powerful new element with its ability to write and analyze code, experts said.

Stairwell’s Mayer used ChatGPT to identify an encryption algorithm in disassembled and decompiled code. “It actually did an all right job as just a regular large language model,” he said. “If someone trained a model specifically for this kind of work I think that it could be quite powerful for accelerating malware analysis and simplifying obfuscated code.”

That can aid developers in building more secure software. “Tools like ChatGPT and Microsoft’s Copilot [AI-powered coding assistant] can pop up and figure out what that developer is trying to do, sort of like autocomplete on steroids,” Parhar said. “If trained correctly, these tools can help ensure that applications are designed more securely.”

They can also improve software testing by generating more effective test scripts and identifying security vulnerabilities using a technique called “fuzzing,” which uses intentionally malformed, corrupted or otherwise invalid test data to root out hidden vulnerabilities, said Jaime Blasco, co-founder and chief technology officer at Nudge Security Inc., the developer of a platform for securing software-as-a-service applications.

Ultimately, the ability of machine learning models to sort through large volumes of information and spot patterns will likely have the greatest impact. “People in security operations centers are getting thousands and thousands of notifications,” said John Hernandez, president of Quest Software Inc.’s Microsoft product management business. “AI allows them to better identify the needles in the haystack.”

Stairwell’s Mayer: AI “soon may be able to replicate the more nuanced analysis human security professionals perform.” Photo: Stairwell

“The problem with our security defenders is that they are using 10 fingers to plug 12 holes,” said Sridhar Muppidi, chief technology officer at IBM Corp.’s security division. “We can use AI to focus on the high-priority threats so we can find the 10 holes that matter.”

AO Kaspersky Lab uses machine learning to process telemetry data for managed detection and response “to filter out mundane events and send more interesting ones to professional human analysts for further analysis,” said Vladislav Tushkanov, lead data scientist at the security software firm. “Machine learning shines where you have to deal with millions of events, and it is just impossible for humans to process the amount of information,” he said.

Artificial intelligence has already proved to be highly effective in fraud detection and prevention where machine learning’s pattern recognition capabilities can identify suspicious activities, detect fraudulent patterns and help mitigate financial risks.

“AI has been proven to catch sequences or transactions of behavior — such as buying a high volume of items that cost 99 cents each — more effectively than a human can,” said Waleed Kadous, head of engineering at Anyscale Inc., developer of a framework for scaling machine learning and Python workloads.

SIDEBAR: How AI and large language models can help cybersecurity firms improve their services

Those same capabilities can apply to mapping an organization’s existing computing footprint for uses in patching and updates. “Internet of things security is about getting visibility into endpoints and that’s very hard to do that with databases of [media access control] addresses, so we use machine learning to detect 95% of devices within a day,” said Anand Oswal, senior vice president of security products at Palo Alto Networks.

The technology can also be used to automate and improve error-prone processes such as configuring equipment, Oswal said. “Ninety-nine percent of firewall breaches are due to misconfiguration,” he said. “We can take data from 80,000 customers and give you a score for the quality of your firewall configuration.”

Quest’s Hernandez: AI will enable overwhelmed security pros to “better identify the needles in the haystack.” Photo: Quest Software

Experts say that LLMs such as GPT-4 won’t give cybercriminals a big upper hand right away, but their inclusion in attackers’ toolbelts will increase efficiency and output, especially for those with less technical knowledge. Researchers have already seen ChatGPT used to create new malware and even improve the attack flows of older malware.

“ChatGPT is a force multiplier more than it is some sort of knowledge substitute,” said Mayer. “At this time you cannot merely ask ChatGPT to ‘make me malware.’ You need to understand the component parts of malware, what sort of objectives you wish to accomplish and the like.”

Earlier this year, Check Point Software Technologies Ltd. researchers discovered cybercriminals using ChatGPT to improve the code of a basic “Infostealer” malware from 2019 by bypassing the chatbot’s safeguards. Although the code isn’t complicated, it’s an example of how generative AI can be used to strengthen malware’s destructive potential.

IBM’s Muppidi: “We can use AI to focus on the high-priority threats.” Photo: IBM

OpenAI includes guardrails in its recently released GPT-4 to prevent threat actors from creating malware, but Check Point researchers quickly discovered that they were easily circumvented. In another test, they used Codex, which is OpenAI’s model for creating code from natural language prompts, to write malicious software.

Generative AI makes building simple malware easier by doing the heavy lifting for less technical users, but bespoke malware is still superior, at least for now. Machine-generated code “is not at the level of maturity to cause large-scale devastation,” said IBM’s Muppidi.

“In the immediate term, bad actors that don’t have any development knowledge are trying to use AI to build basic malicious tools,” said Sergey Shykevich, threat intelligence group manager at Check Point. “Also, it allows more sophisticated cybercriminals to make their daily operations more efficient and cost-effective.”

The real power behind ChatGPT and other generative AI models is their ability to pretend to be another person quite convincingly. That makes them a perfect vehicle for malicious spam and social engineering attacks, especially in phishing campaigns, where an attacker uses a broad net or targeted emails or messages to trick users into giving up sensitive information or downloading malware.

Palo Alto Networks uses maching learning to detect 95% of the devices on a network within a day, says Oswal. Photo: SiliconANGLE

“Many of the spam emails and other phishing attacks we see today are created by people for whom English is not a first language,” said Parhar. “These often contain spelling and grammar mistakes as well as clumsy language. AI algorithms can be used to generate more convincing and engaging fake messages, emails and social media posts.”

Phishing is one of the most common types of cybercrime, with an estimated 3.4 billion emails sent every day, according to AAG IT Services Ltd. IBM’s 2022 Cost of Data Breach report noted that phishing was the second most common cause of breaches and the most costly at $4.91 million per incident.

Although OpenAI guardrails prohibit ChatGPT from explicitly writing a phishing message, “if you give it a more generic prompt to write an email including a link to a survey or asking recipients to verify personal details, it will happily create an email for you that could be used in any phishing campaign,” said Robert Blumofe, executive vice president and chief technology officer at Akamai Technologies Inc.

One area where AI models are expected to be particularly effective is to create spear-phishing emails, which are personalized attacks targeted at individuals. Current generative AI models can engage in conversations with humanlike personalities that a threat actor could use to pass messages back and forth or even automate attacks. Employees are more likely to give up sensitive information if they believe they’re engaging with another person.

Phishing campaigns could take an even more sinister turn with AI models that can create deepfakes of images, videos and even human voices, said Ev Kontsevoy, founder and chief executive of the secure infrastructure access company Gravitational Inc. which does business as Teleport. For example, Microsoft Corp.’s VALL-E, which is the most recent iteration of this technology, can produce a convincing duplicate of a person’s speaking voice from just a few seconds of a sound sample.

Kaspersky’s Tushkanov: “Machine learning shines where you have to deal with millions of events.” Photo: Kaspersky Labs

The use of deepfakes by threat actors has been limited so far because deception can be automated more easily through text, but there have been a few prominent examples. In 2021, fraudsters stole $35 million from a United Arab Emirates company by using deepfake audio to convince an employee to release funds to a director for the purpose of acquiring another organization.

A more common tactic is to scam people out of money with faked calls from loved ones. In one case in Arizona, a scammer tried to ransom a woman’s daughter for $1 million using an AI-mimicked voice. A reporter also broke into his own bank account using a voice-cloning AI, demonstrating that even automated protection systems could be at risk.

These were one-off incidents orchestrated by individuals, but the increasing cost-effectiveness of voice synthesis combined with generative AI can plausibly be expected to create bots that could call people and hold lifelike conversations at large scale.

Akamai’s Blumofe: Generative AI can be made to “happily create an email for you that could be used in any phishing campaign.” Photo: Akamai

“[The bots] can call you and engage in a voice conversation with you pretending to be your boss or your co-worker, and they will have the full context of what is happening in the world and what is happening with your company,” said Kontsevoy.

In the past, it has been costly to pull off these sorts of attacks because of the amount of research needed to understand targets and the human effort involved in mimicking personas. With AI taking on much of the research, automating the conversation and building an army of bots, “it will be extremely cost-efficient to trick people into believing that they are talking to a human being,” Kontsevoy said.

Threat intelligence firm Cybersixgill Ltd. reported threat actors were already using generative AI to write malicious code for ransomware attacks last year. Those early efforts are only a glimpse of what’s to come.

Regardless of whether you believe generative AI is a problem or a solution for the cybersecurity field, one thing is for sure: It will be with us for the long term. Most experts who were interviewed said the technology is unlikely to favor either the white hats or the black hats in the short term, but defenders can gain an upper hand by putting it to work as quickly as possible.

“It becomes a cat-and-mouse game that moves much faster than it does now,” said Gartner’s Litan. “Whoever has the most effective generative AI cybersecurity offense or defensive capability wins in the short run. Over time the sides equalize.”

“It’s going to aid both sides and that will create new challenges,” said Allie Mellen, a senior analyst at Forrester Research Inc.

Leading experts in the AI field, including executives from the largest companies building commercial tools, have recently issued calls for government licensing and regulation of the technology. Although legislation may help head off some of the unintended negative consequences of AI as it’s put into operation, it won’t make life any easier for cybersecurity professionals. By their nature, cybercriminals don’t play by the rules.

With reporting by David Strom

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.