POLICY

POLICY

POLICY

POLICY

POLICY

POLICY

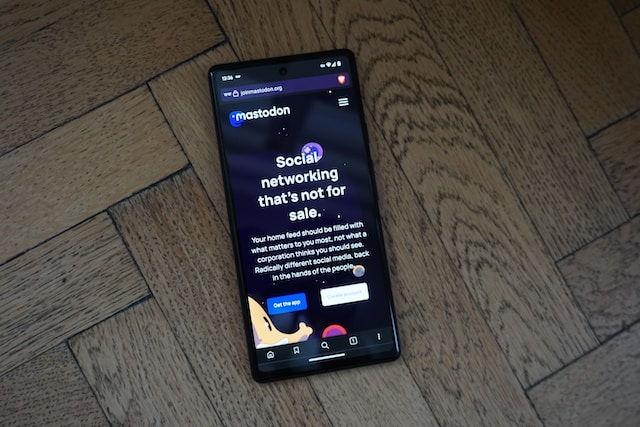

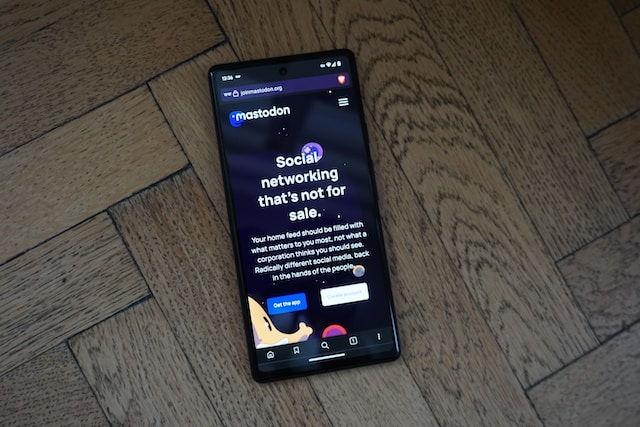

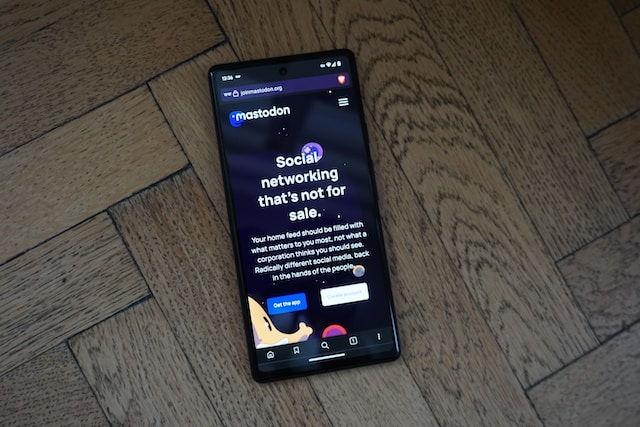

Researchers at Stanford’s Internet Observatory released a paper today revealing some worrying insights concerning the social media platform Mastodon, which they say is rife with child sexual abuse material, known as CSAM.

The researchers said in the study that the issue has become a big problem on decentralized social networks, more so than centralized networks such as Twitter, Instagram or YouTube. The researchers found that moderation tools were limited on the former, adding that there’s no “built-in mechanism to report CSAM to the relevant child safety organizations.”

In general, they said, the fediverse – decentralized, autonomous networks that run on thousands of servers all over the world – lacks safety infrastructure. Although there is an appeal in social media that’s not run in a top-down manner, there’s a certain amount of chaos in decentralization — including the proliferation of said images.

The researchers pulled up 112 instances of CSAM over 325,000 posts on Mastodon in just two days. They said they found the first image after looking for just five minutes. The images could easily be searched for. They discovered that 554 pieces of CSAM content matched hashtags or keywords that can be used by people selling such images. Some of these included children charging a few dollars for photos or videos.

This is not a problem only found on decentralized platforms, of course, but the researchers said having fewer tools to moderate than centralized platforms has created a cesspit of pedophilia activity that is particularly bad in Japan, where CSAM content is sold in both the Japanese and English language. Still, they said that all over Mastodon, the proliferation of CSAM is right now “disturbingly prevalent.”

“Federated and decentralized social media may help foster a more democratic environment where people’s online social interactions are not subject to an individual company’s market pressures or the whims of individual billionaires,” the researchers concluded. “For this environment to prosper, however, it will need to solve safety issues at scale, with more efficient tooling than simply reporting, manual moderation and defederation. The majority of current trust and safety practices were developed in an environment where a small number of companies shared context and technology, and this technology was designed to be efficient and effective in a largely centralized context.”

They believe that some decentralized networks in the fediverse can address this issue by using some of the same components used in centralized social media networks. As the fediverse grows, they said, there will need to be investment to deal with this issue.

THANK YOU