AI

AI

AI

AI

AI

AI

Graphics chip maker and artificial intelligence provider Nvidia Corp. today announced a slew of new generative AI products designed to accelerate the development of large language models and other advanced AI applications.

Today at SIGGRAPH 2023, Nvidia announced a new partnership with Hugging Face Inc., a company that develops tools for machine learning and AI, that will allow developers to deploy and use their generative AI models on the Nvidia DGX Cloud supercomputing infrastructure to amplify workloads. Developers will also be able to access a new Nvidia AI Workbench that will allow them to package up their work and use it anywhere from their PC, workstation, or the cloud, and the release of Nvidia AI Enterprise 4.0.

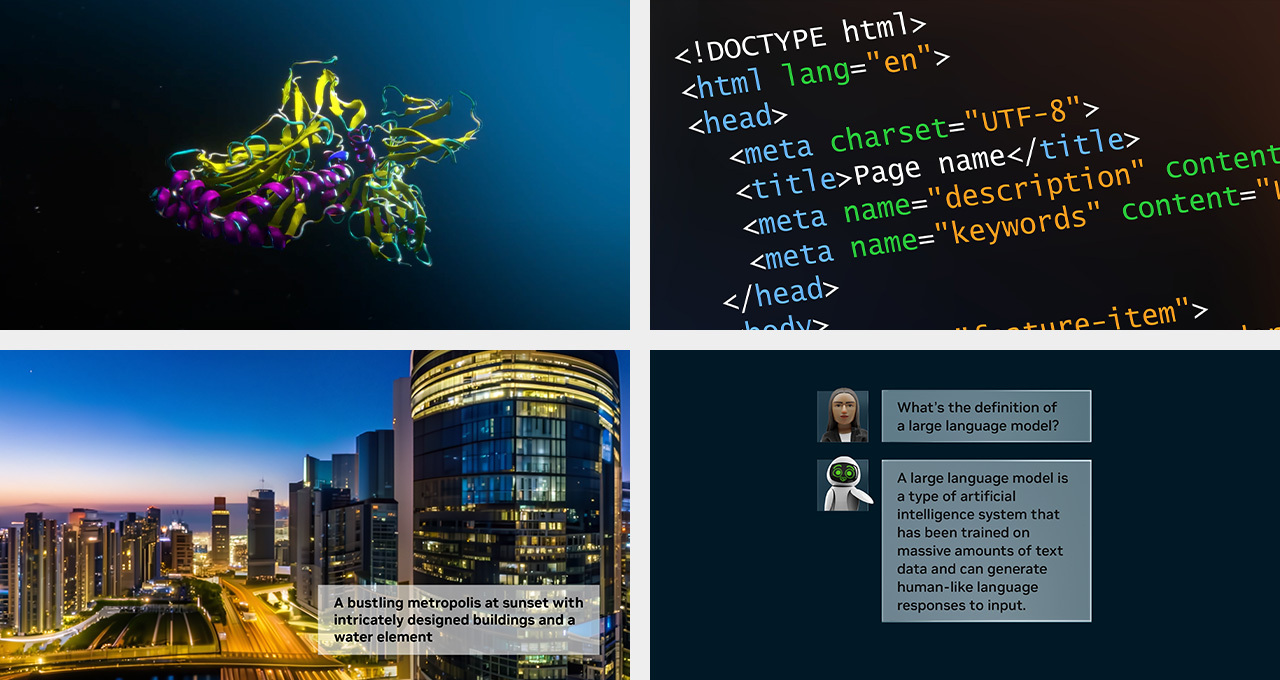

The combination of Hugging Face and DGX Cloud will enable developers to quickly train large language models that are custom-tailored using their own business data for industry-specific knowledge and applications, such as use cases for intelligent chatbots, search and summarization. Using its supercomputing power, LLMs can be trained and fine-tuned on powerful infrastructure.

“Researchers and developers are at the heart of generative AI that is transforming every industry,” said Jensen Huang, founder and chief executive of Nvidia. “Hugging Face and Nvidia are connecting the world’s largest AI community with Nvidia’s AI computing platform in the world’s leading clouds.”

The Hugging Face platform supports over 15,000 organizations and its community has shared over 250,000 AI models and over 50,000 datasets that its users have built, fine-tuned and deployed.

As part of the collaboration, Hugging Face will offer a brand-new service, called Training Cluster as a Service. It will simplify the creation and tailoring of new custom generative AI models for enterprise customers using its platform and Nvidia DGX Cloud for infrastructure with a single click.

Nvidia DGX Cloud is powered by instances that feature eight of its H100 or A100 80-gigabyte Tensor Core GPUs for a total of 640 gigabytes of GPU memory per node. This provides it extremely high-performance capabilities for training and fine-tuning of massive AI workloads.

Nvidia AI Workbench is a unified workspace for developers that’s designed to allow them to create, test and customize their own pretrained generative AI models quickly wherever they need to work on them. This means that they can sit down at their own personal computer, workstation or a virtual machine in a data center, public cloud or the Nvidia DGX cloud.

Using AI Workbench developers can just sit down and load and customize a model from any popular repository such as Hugging Face, GitHub and Nvidia NGC using their own custom data.

“Enterprises around the world are racing to find the right infrastructure and build generative AI models and applications,” said Manuvir Das, Nvidia’s vice president of enterprise computing. “Nvidia AI Workbench provides a simplified path for cross-organizational teams to create the AI-based applications that are increasingly becoming essential in modern business.”

The same interface allows developers to package up their project and move it across different instances. Developers could be working on the project on their PC and feel the need to move it into the cloud, and Workbench makes that simple by making it easy to package up and just package it up, move it and unpack it there and continue development.

Workbench AI also comes with a full complement of generative AI tools for developers, including enterprise-grade models, software development kits and libraries from open-source repositories and the Nvidia AI platform. All of this in a unified developer experience.

Nvidia said that a number of AI infrastructure providers are already embracing AI Workbench, including Dell Technologies Inc., Hewlett Packard Enterprise Co., Lambda Inc., Lenovo Ltd. and Supermicro.

Now with Nvidia AI Enterprise 4.0 businesses can access the tools needed to adopt generative AI and build on the security and application programming interfaces needed to connect them into applications at scale in production.

The newly release version of AI Enterprise includes Nvidia NeMo, a cloud-native framework for building, training and deploying LLMs in a fully managed enterprise system for creating generative AI applications. Enterprise customers looking to scale and optimize AI deployment can automate using Triton Management Service, that will automatically deploy multiple inference servers in Kubernetes with model orchestration at large scale.

Cluster management is available through the Base Command Manager Essentials software to maximize performance for AI servers across different data centers. It can also manage AI model usage across different cloud systems, including multicloud and hybrid cloud.

Customers can find Nvidia AI Enterprise 4.0 in partners marketplaces upon release, including Google Cloud.

Support our open free content by sharing and engaging with our content and community.

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.