INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Intel Corp. has revealed a host of new details about the architecture of next year’s Xeon central processing units, geared for data center servers and workstation applications.

Intel has yet even to launch the fifth generation of its Xeon CPUs, but today’s unveiling at the Hot Chips conference at Stanford University provided a decent insight into what the sixth-generation chips will bring to the table, and Intel’s hoping they will satisfy customers’ requirements for a wide range of applications.

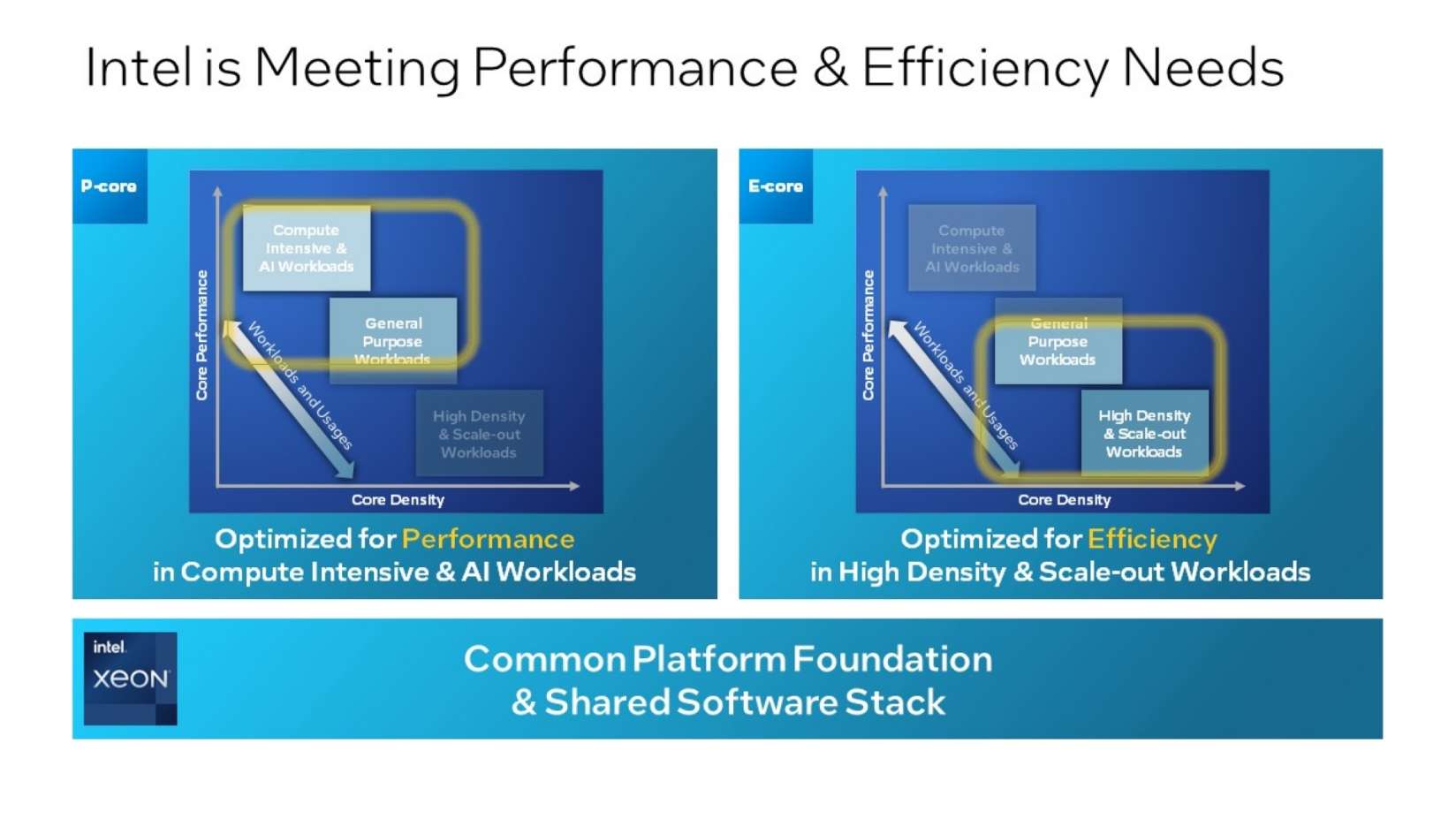

This time around, Intel’s Xeon chips will be available in two distinct flavors, with one aimed at brute force applications and a second that’s tailored to maximizing efficiency and density, Intel said. Customers will be able to choose from the long-established Xeon Performance-core (P-core) architecture and the newly designed Xeon Efficient-core (E-core) CPUs.

Intel explained that two variants are necessary, because customers increasingly have different requirements regarding their CPUs. It said the heaviest high-performance computing workloads call for strong individual cores that pack as much punch as possible, while other workloads are less intensive and can instead benefit from a more efficient architecture.

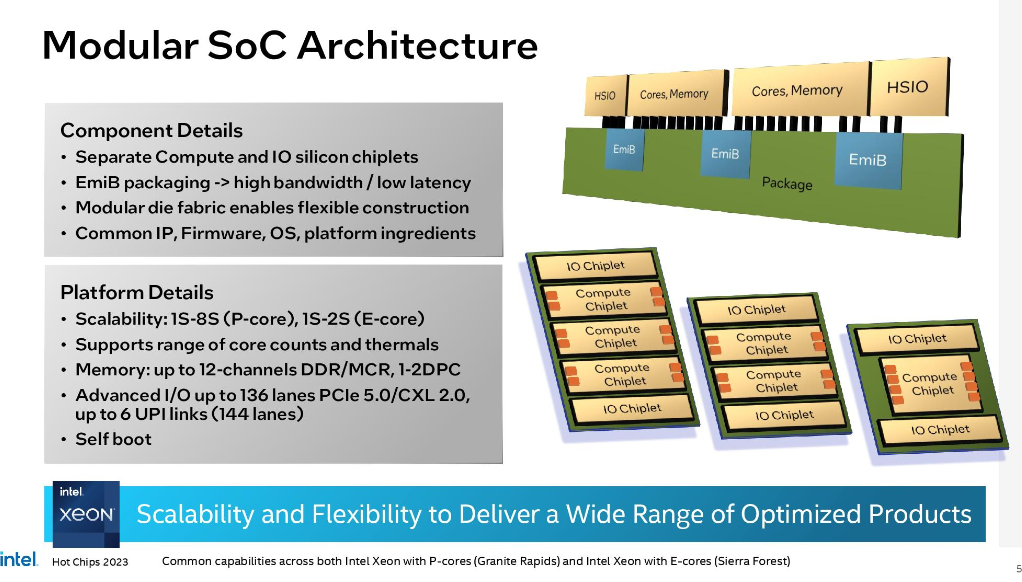

The latest Xeon processors will consist of a sum of chiplets, meaning they are essentially modular. The input/output silicon will be completely separate from the compute cores, for example.

According to Intel, both types of Xeon CPU will be made on the Intel 3 manufacturing process, which is the company’s name for its most advanced seven-nanometer process. The E-Core CPUs are codenamed Sierra Forest and will launch in the first half of 2024, while the P-Cores are called Granite Rapids, and will become available “shortly thereafter,” the company said. As is becoming normal with Intel, this means they are trailing the company’s previously stated roadmap, which originally called for a launch in the third quarter of 2023.

Intel seems to think, however, that the chips will be worth the wait. It promises a big improvement in performance per watt, especially concerning the Sierra Forest chips. Both types will run the same firmware and support the same operating systems, so the choice of which chip to use will really be dictated by the specific application users have in mind, Intel said.

Customers seeking high performance will want to go for the Granite Rapids CPUs, while those who value efficiency with the highest number of cores possible will want to look at Sierra Forest. In any case, the chipmaker promises a 250% improvement over its Gen4 Xeon CPUs in terms of the number of cores that can be squeezed into a server rack. There has been talk of up to 144 cores per CPU, but Intel has yet to specify an exact number.

Constellation Research inc. analyst Holger Mueller said Intel’s latest chips look extremely promising. And they should, for Intel has been long overdue a product that is competive with Advanced Micro Devices Inc.’s alternative silicon platform. “The task for Intel now is to deliver on its product and ensure both its quality and its reliability,” he continued. “It is a dual-pronged attack, with big improvements both in lower energy and performance.”

Of course, the question on everyone’s lips is where these new chips fit into the AI hype. Intel has praised the performance of its fourth-generation Xeon CPUs in AI workloads in the past, and is expecting even better things from the coming sixth generation. According to Intel, the 6th Gen Xeons are able to process the benchmark AI model BERT up to 5.6 times faster than rival Advanced Micro Devices Inc.’s latest EPYC server chips, meaning they will be 4.7 times more efficient.

The main improvements in the 2024 Xeon CPUs come in the areas of greater bandwidth in their memory, which is key for AI workloads. Intel explained that customers can choose between Double Data Rate 5 (DDR5) or Multiplexer Combined Ranks (MCR) dynamic random-access memory, with maximum speeds of 6,400 megatransfers per second and 8,000 MT/s, respectively.

They will support a maximum of 12 channels with theoretical bandwidth of 614.4 to 768 gigabytes per second, more than double that of the fourth generation Xeons. In addition, they will be able to handle up to 136 PCIe 5.0 lanes for connectivity to graphics processing units, NVMe storage and more.

The newest Xeon chips should help strengthen Intel’s role in AI. Server systems need both GPUs and CPUs to run AI workloads effectively, and while the most powerful generative AI applications are best-suited for GPU systems, some tasks are a poor match for GPUs because they don’t parallelize very well. Because of this, CPUs can be more effective in certain kinds of AI workloads.

Mueller said enterprises and cloud providers will likely be sold on the promised cost efficiency of Intel’s newest CPUs. “This is what they need to lower the cost of running AI workloads,” he explained. “There are a lot of folks who are hopeful the Sierra Forest and Granite Rapids chips will be a success.”

There are also many compute workloads besides AI, such as running virtual machines and large amounts of storage, that Intel’s newest CPUs should perform more effectively.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.