AI

AI

AI

AI

AI

AI

Artificial intelligence networking infrastructure startup Enfabrica Corp. said today it has raised a bumper $125 million in new funding to build out its highly efficient server fabric chip technology.

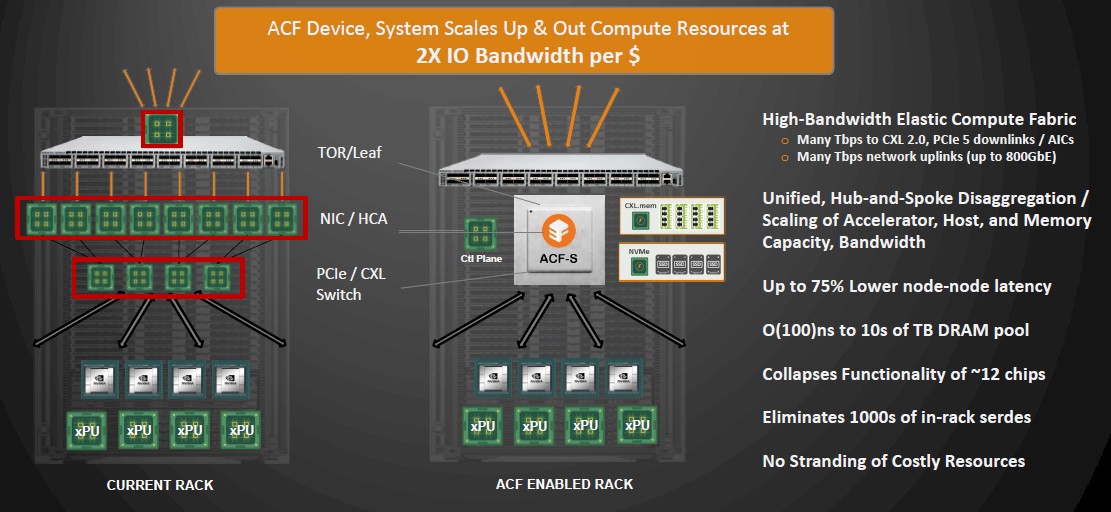

The startup reckons it can solve critical network, input/output and memory scaling problems that are increasingly problematic as AI models become more powerful and their demand for data grows. Enfabrica said it will use the money to expand its research and development and accelerate production of its new ACF-S device, which is designed to scale distributed AI and accelerate compute clusters in data centers.

Today’s Series B round was led by Atreides Management and saw participation from existing investor Sutter Hill Ventures, plus new backers including IAG Capital Partners, Liberty Global Ventures, Nvidia Corp., Valor Equity Partners and Alumni Ventures.

Enfabrica was founded back in 2020 by its Chief Executive Officer Rochan Sankar and Chief Development Officer Shrijeet Mukherjee, plus other founding engineers. Much of the time since then has been spent focusing on the development of its technology, away from prying eyes.

The company finally emerged from stealth mode earlier this year at a time when excitement around the capabilities of AI has reached a fever pitch. With the rise of ChatGPT fueling massive demand for new generative AI applications and capabilities, Enfabrica chose an opportune moment to announce what it says is a revolutionary new class of AI infrastructure interconnect chips for AI networking.

The company is the creator of an Accelerated Compute Fabric device, which is a server fabric chip that it says will provide unmatched scalability, performance and total cost of ownership for distributed AI, extended reality, high-performance computing and in-memory database infrastructure.

Enfabrica believes there will be high demand for its technology. It says the rising adoption of generative AI, large language models and deep learning-based recommendation systems is driving a huge infrastructure push in cloud computing.

It’s widely expected that the aggregate AI training and user serving capacity of the cloud will grow exponentially, putting enormous pressure on enterprises to sustain workload growth on the lowest number of graphics processing units and other processors. This, Enfabrica says, is exactly where its high-performance converged memory and network fabric chips can come in handy.

Specifically, the startup is addressing GPU network pain points and memory and storage scaling, which are all becoming pressing challenges as AI workloads become more commonplace and powerful. Enfabrica says its ACF-S chips are much more efficient, with advanced features in a terabit Ethernet network fabric that directly bridges and interconnects GPUs, central processing units and memory resources at scale with native 800-gigabit Ethernet networking. It will eliminate the need for specialized network interconnects and traditional top-of-rack communication hardware, acting as a universal data mover that overcomes the I/O limitations of existing data centers.

Sankar said the startup is tackling the most fundamental challenge of today’s AI boom, namely scaling up the infrastructure that supports it. “There is a critical need to bridge the exploding demand to the overall cost, efficiency and ease of scaling AI compute, across all customers seeking to take control of their AI infrastructure and services,” the CEO said. “Much of the scaling problem lies in the I/O subsystems, memory movement and networking attached to GPU compute, where Enfabrica’s ACF solution shines.”

Enfabrica is planning to showcase its 8-terabit per second ACF switching system at the AI Hardware and Edge Summit 2023 that kicks off today in Santa Clara, California. It said the chips are available now for pre-order, with delivery to come soon.

The ACF chips enable the direct-attach of any combination of GPUs, CPUs, CXL-attached DDR5 memory and solid-state drive storage via a multiport 800 gigabit Ethernet network. It can run atop the standard Linux kernel and userspace interfaces, and will enable companies to cut their AI compute costs by up to 50% for LLM inference and 75% for deep learning recommendation system inference, the company said.

All told, companies will benefit from two-times performance per dollar, with 10 times higher throughput than standard NICs and four times higher performance per watt with fewer chips and in-rack connections required. The system can scale up to thousands of server nodes, the company also claims.

Enfabrica could provide an interesting alternative in the area of AI networking, which is currently dominated by Nvidia with its Mellanox solutions, said Constellation Research Inc. Vice president and Principal Analyst Andy Thurai. He explained that one notable differentiator of Enfabrica is its ability to move data between GPUs and CPUs at high speed.

“This could allow more companies to exploring using CPUs instead of GPUs for AI, as the latter are in scarce supply now,” Thurai said. “Enfabrica’s unique advantage is that it uses existing inferfaces, protocols and software stacks, so there’s no need to rewire the infrastructure.”

What’s promising for Enfabrica is that the AI push, particularly generative AI, remains strong, meaning there’s going to be big demand for compute and network capabilities for some time to come, the analyst added. “Current hyperscalers are built for normal enterprise workloads rather than AI or LLM workloads, and this puts a lot of pressure on their infrastructure,” he said. “Enfabrica could potentially allow companies to create more distributed AI workloads, which is the exact opposite of the HPC concept in trying to aggregate things together.”

Thurai also expressed surprise that Nvidia is backing Enfabrica, given its potential as a rival, and speculated that the GPU giant may one day look to acquire the startup.

For now, though, Enfabrica said it remains fully focused on building out and growing its AI networking solutions.

“Our Series B funding and investors are an endorsement of our team and product thesis and enable us to produce high-performance ACF silicon and software that drives the efficient utilization and scaling of AI compute resources,” Sankar said. “As an organization, we are hyper-focused to work with the larger ecosystem of partners and customers to solve the AI infrastructure scaling problem.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.