AI

AI

AI

AI

AI

AI

SambaNova Systems Inc. today debuted a 1,040-core processor, the SN40L, that’s designed to power large artificial intelligence models with trillions of parameters.

Palo Alto, California-based SambaNova is backed by more than $1 billion in venture funding. It develops AI chips that lend themselves to both model training and inference. Customers can access the chips via a cloud service or deploy them on-premises as part of appliances supplied by SambaNova.

According to the company, its new SN40L processor is optimized to run AI modes with a large number of parameters. Those are the configuration settings that determine how a neural network turns input data into decisions. The most advanced AI models have upwards of billions of parameters.

A server rack that includes eight SN40L chips can power a model with five trillion parameters, SambaNova claims. The company says that running such a model would otherwise require 192 “cutting-edge” graphics processing units. Because the fastest GPUs on the market reportedly sell for upwards of $30,000, decreasing the number of chips necessary for an AI project can significantly reduce costs.

The SN40L is based on what SambaNova describes as a reconfigurable dataflow architecture. According to the company, the technology allows its processor to analyze an AI model and automatically map out its internal structure. Using this information, the SN40L configures itself in a manner that maximizes the model’s performance.

A neural network is made of numerous interconnected software modules called layers. Those layers regularly exchange data with another. The faster data can move between them, the faster the neural network will run.

If two layers are placed on opposite corners of a processor, information will take a relatively long time to travel between them. In contrast, two layers that are adjacent to each other can communicate almost instantaneously. The SN40L can analyze an AI model, determine which layers need to exchange data and place them next to each other to speed up the flow of information.

SambaNova’s previous flagship chip was made using a seven-nanometer manufacturing process. With the SN40L, the company has upgraded to a five-nanometer process. The enhanced manufacturing technology makes the chip faster than its predecessor.

Another contributor to the SN40L’s performance is its increased core count. The chip has 140 AI-optimized cores spread across two chiplets, or semiconductor modules. The cores are supported by three separate sets of memory circuits that can together hold more than 1.5 terabytes of data.

The first memory pool comprises 520 megabytes’ worth of SRAM that is embedded directly into the processor. AI models with a footprint of less than 520 megabytes can be stored entirely in that SRAM. This removes the need to use off-chip memory, which speeds up processing.

On-chip memory like the SN40L’s SRAM is is faster than the off-chip variety because it’s closer to the compute cores. The shorter the distance between the memory and compute circuits, the less time data takes to travel between them. The result is increased performance.

According to SambaNova, the SRAM is paired with a 1.5-terabyte pool of DDR5 memory that is attached to SN40L rather than embedded into it. The latter memory pool is useful for supporting large AI models with a high parameter count. For added measure, SambaNova has included 64 gigabytes of HBM3, a type of DRAM that is faster and more expensive than DDR5.

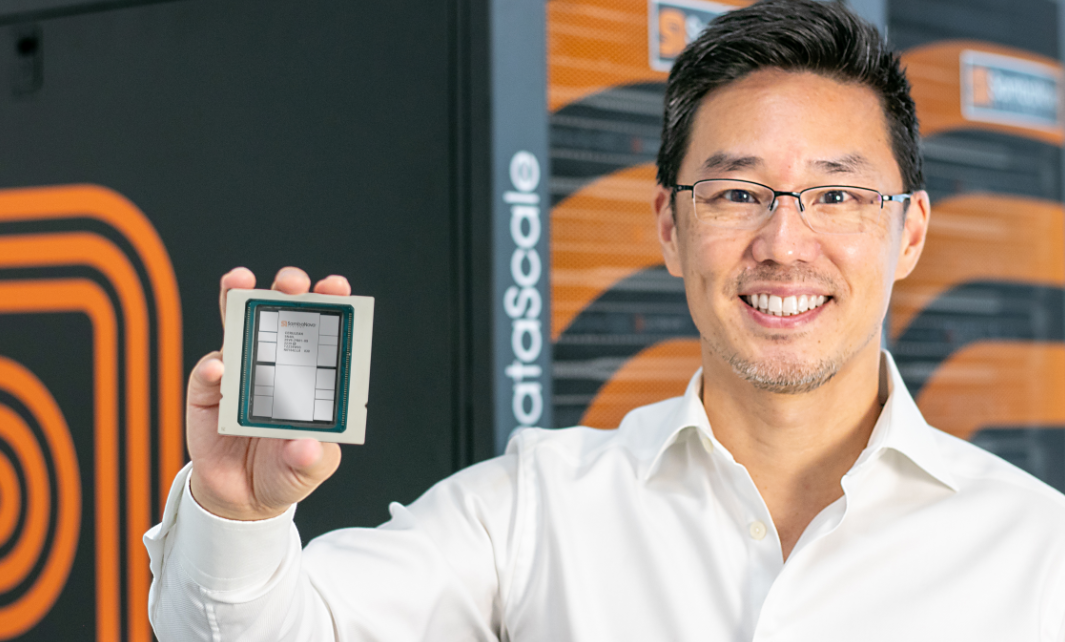

“We’re now able to offer these two capabilities within one chip – the ability to address more memory, with the smartest compute core – enabling organizations to capitalize on the promise of pervasive AI, with their own LLMs to rival GPT4 and beyond,” said SambaNova co-founder and Chief Executive Officer Rodrigo Liang (pictured).

The company will initially make the SN40L available through its SambaNova Suite. It’s a cloud platform that offers access to not only chips but also prepackaged AI models. In conjunction with the SN40L’s debut today, SambaNova added several new prepackaged neural networks including Meta Platforms Inc.’s Llama-2 large language model.

In November, SambaNova will make the chip available with its on-premises DataScale appliances. Each appliance combines several SN40L chips with networking gear, data storage devices and other supporting components. Companies with demanding AI applications can deploy multiple DataScale systems in a cluster.

THANK YOU