AI

AI

AI

AI

AI

AI

Ever since artificial intelligence and large language models became popular earlier this year, organizations have struggled to keep control over accidentally or deliberately exposing their data used as model inputs. They aren’t always succeeding.

Two notable cases have splashed into the news this year that illustrate each of those types of exposure: A huge cache of 38 terabytes’ worth of customer data was accidentally made public by Microsoft via an open GitHub repository, and several Samsung engineers purposely put proprietary code into their ChatGPT queries.

“You don’t have to be a sophisticated hacker anymore,” Bill Wong, a principal research director at Info-Tech Research Group, told SiliconANGLE. “All you have to do now is ask ChatGPT to develop an exploit or to write some code to extract your data or find a hole in your infrastructure and play the role of an ethical hacker.”

Sensitive data disclosure is rated sixth of the top 10 threats for LLMs list compiled by the Open Worldwide Applications Security Project. The authors posit a scenario wherein unsuspecting users are legitimately interacting with AI tools, and craft a set of prompts to bypass input filters and other data checks that can cause models to reveal sensitive data. “To mitigate this risk, LLM applications should perform adequate data sanitization to prevent user data from entering the training model data,” the authors of the list recently wrote.

What’s interesting is that the attackers and the defenders are about evenly matched, Shailesh Rao, president of Cortex at Palo Alto Networks Inc., said in an interview. “There has never been this level of symmetry between the weapons we have now in cybersecurity,” he said. “The bad guys have the same level and types of tools that the defenders have, plus the advantage of not needing any budget or performance reviews.”

To make matters worse, the increasing number of “hallucinations” or inaccurate responses by AI models has even prompted investigations of ChatGPT’s corporate owner OpenAI LP by the Federal Trade Commission earlier this summer. “You can’t hallucinate when it comes to evaluating malware, so you need a great deal of precision in your tools,” said Rao. “You aren’t just looking for a picture of a cat, but whether the cat will bite you or not,” he said.

Enterprise technology managers are mentioning these problems more frequently. An August survey by Gartner Inc. of 249 senior executives found that AI was the second most often mentioned emerging risk, nearly tied with risks of third-party vendor viability.

Two additional groups of worrywarts are the U.S. National Security Agency and the EU, both of whom announced earlier this month new efforts. The NSA will create a new AI Security Center that will supervise various AI software development and integrations to stay ahead of other nation’s efforts. The EU has put AI tech at the No. 2 spot of critical tech essential for EU’s economic security.

Yet governments have been slow to respond to these threats. At the beginning of the year, the National Institute of Standards and Technology assembled a general AI risk management framework and playbook, which was most recently revised in August. Another advisory workshop is planned this month to consider additional revisions. Though a good first effort, it doesn’t specifically address data leak concerns.

This and other trends in generative AI will be explored Tuesday and Wednesday, Oct. 24-25, at SiliconANGLE and theCUBE’s free live virtual editorial event, Supercloud 4, featuring a big lineup of prominent executives, analysts and other experts.

AI comes with its own special risk profiles, threat models and modes, largely because of the way these models operate and the amount of data they can quickly ingest, process, and deliver answers to users’ queries. For the past year, many organizations ignored its influence, largely because much of the publicly available training data was several years old. Those are the before times: Now, for better or worse, OpenAI-based models can incorporate the latest online data in their responses.

Added to this is another confounding element: There are numerous AI models already in use, and enterprises need to vet their toolsets carefully to ensure they understand which version is being used by each of its AI security vendors, and what specific measures have been taken to protect their data flows. They have subtle differences in how they’re trained, how they learn from user interaction, and whether these protective features are effective.

Then there is the issue of having data leak protection that is specific to AI circumstances. This is much more involved than scanning for mentions of Social Security numbers and invoices, which was done in the past with the traditional data leak protection products examining network packet flows moving across particular protocols. Data can be combined from a variety of internal and private and public cloud sources and packaged in new ways that make it harder to track and source and therefore protect. Also, unlike traditional DLP solutions, private data is first exported to create the LLM, rather than exported for a particular purpose.

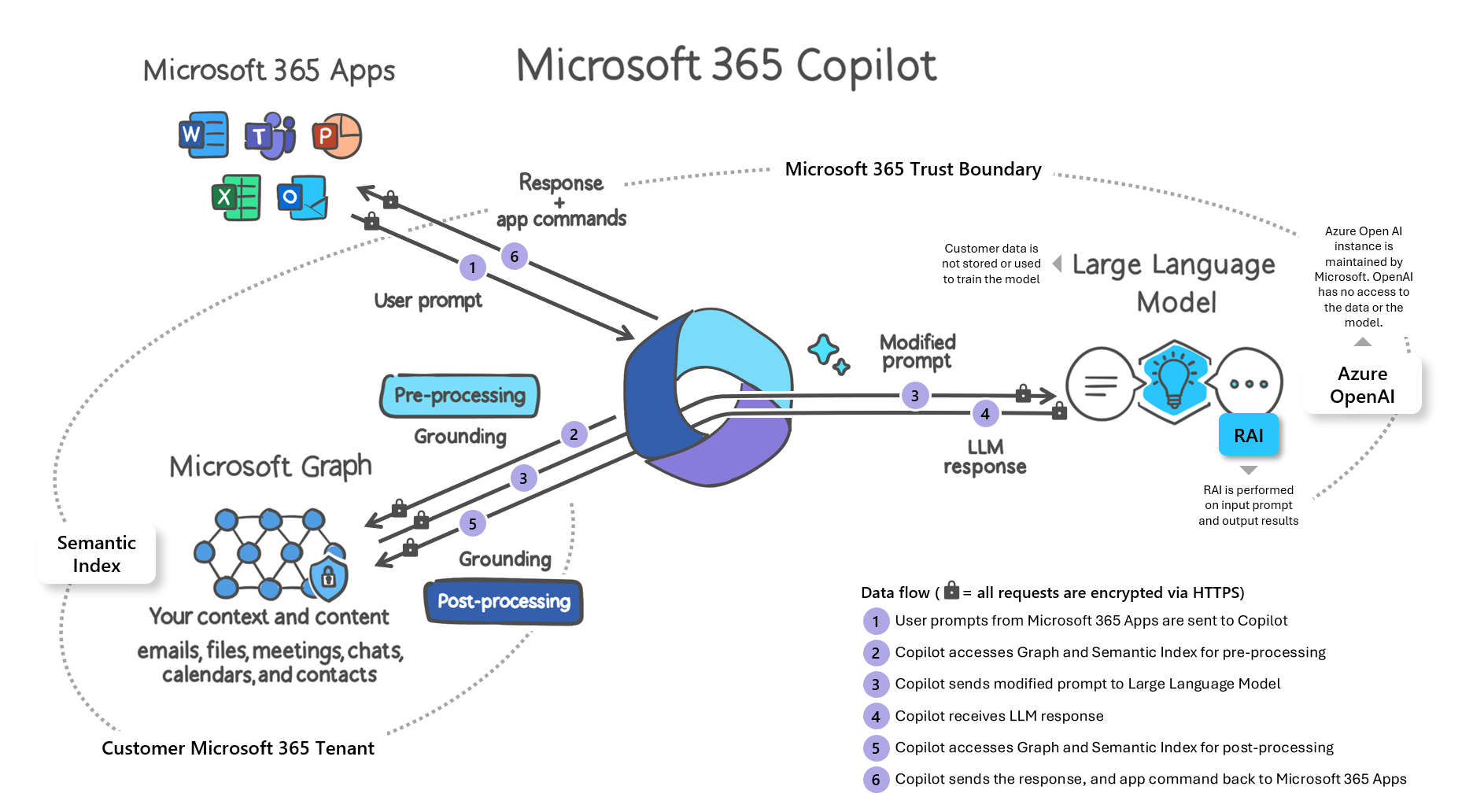

As an example, take the AI chatbot enhancement from Microsoft called Copilot. “What makes Copilot a different beast than ChatGPT and other AI tools is that it has access to everything you’ve ever worked on in 365,” Varonis Systems Inc. Chief Marketing Officer Rob Sobers wrote in an August blog post. “Copilot can instantly search and compile data from across your documents, presentations, email, calendar, notes, and contacts.”

One issue is that user permissions for 365 data are often sloppily configured, so that, according to Sobers, a 10th of all cloud data typically is available to any employee. This means that Copilot can generate new sensitive data, “pouring kerosene on the fire.” Trying to make sense of its operations (pictured below) will take some effort to understand the myriad of data flows and trust relationships.

Last month, Microsoft provided guidelines on how to keep private data private in its AI tooling, promising that Copilot “only surfaces organizational data to which individual users have at least view permissions.” But it’s a big assumption that all users are provisioned correctly when it comes to access rights. This has never been true even before cloud computing was first introduced into businesses. Microsoft does make it clear that it uses its own version of OpenAI that runs on Azure for serving up Copilot responses, rather than using any public AI service.

Another threat has been catching on: As AI adversaries get better at impersonating IT security teams, they are using better-crafted phishing lures to persuade employees to leak data, convincing them that these requests are legit. Rachel Tobac, chief executive of SocialProof Security, gave a demonstration of this on the “60 Minutes” program, where she used various AI tools to clone her interviewer’s voice and trick her assistant into giving up her passport number.

“Generative AI and particularly deepfakes have the potential to disrupt this pattern in favor of attackers,” said Jack Stockdale, chief technology officer at Darktrace Holdings Inc. “Factors like increasing linguistic sophistication and highly realistic voice deep fakes could more easily be deployed to deceive employees.”

In addition, AI can generate a lot of false-positive security alerts because of the way it parses log data, according to Varonis field CTO Brian Vecci. “AI security tooling is still very early in all three cloud platforms and being used more for convenience and productivity gains than better security,” he told SiliconANGLE.

But there’s a lot that organizations can do to do a better job of protecting their data from being spread across the AI data universe. New security tools are being developed by both startups and established providers to stop data leaks.

There have been dozens of security startups getting significant funding over the past few months, each of which claim to offer various protective features to prevent these data leaks, intentional or not, from happening. They include Calypso AI, Nexusflow.ai Inc., Aporia Technologies Ltd., Baffle Inc., Protect AI Inc. and HiddenLayer Inc. Taken together, these firms have raised more than $150 million in funding.

Some of these companies automatically encrypt or anonymize private data before entering an AI pipeline, while others can detect and block potential AI-based breaches. For example, there’s ModelScan tool from Protect AI Inc., recently released as open source. It can scan model code for vulnerabilities and potential privilege escalations, to prevent poisoned code or data theft.

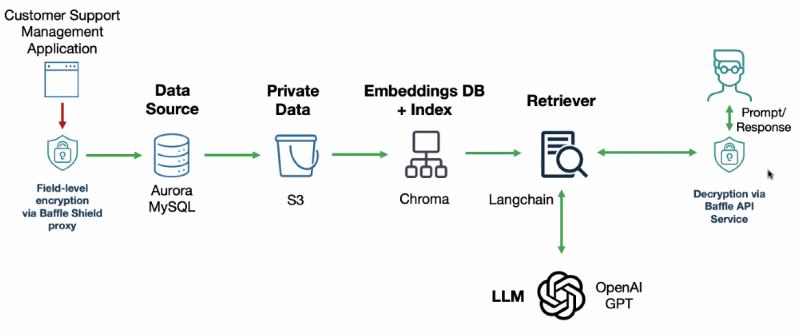

For example, Baffle has various data protection products, including one designed for AI situations. They encrypt data as it’s being ingested into an LLM, so that the data can still be manipulated and processed, using this multiple-step procedure diagrammed below.

“This is very much an educational effort,” Baffle CEO Ameesh Divatia said in an interview with SiliconANGLE. “But it means you have to understand your data infrastructure and build in the right controls into your business logic to secure things properly.” He mentioned one example where new customer service representatives are being trained, but because they are outsourced from an external company, they can’t be exposed to real customer data. Baffle is working with IBM both as an original equipment manufacturer and and as a reseller, and also has a partnership with Amazon Web Services Inc.

The trouble with these tools, like so many older security products, is that no single product can close down all avenues of a potential breach. And as more data is added to AI models, more ways to exploit that data are bound to crop up that don’t fit any of the startups’ existing solutions.

But while startups are getting plenty of attention, most of the established security providers are quickly adding AI to their own product lines, particularly with their existing offensive AI measures. Microsoft Corp.’s Azure, Google LLC’s Cloud Platform and Amazon Web Services Inc. each have beefed up their own threat detection and data leak prevention services to handle AI-sourced attacks or potential data compromises.

For example, Google this past summer rolled out its AI Workbench, consolidating several of its security products — such as Virus Total, Mandiant threat intelligence and Chronicle analytics — into a coherent offering for its cloud customers with a single portal overview. AWS also beefed up its security services this past summer, with services such as Cedar, CodeWhisperer, GuardDuty and Bedrock.

But both of these announcements show that AI protection will be a lot more complex than delivering ordinary network protection and will require numerous services and careful study to implement properly.

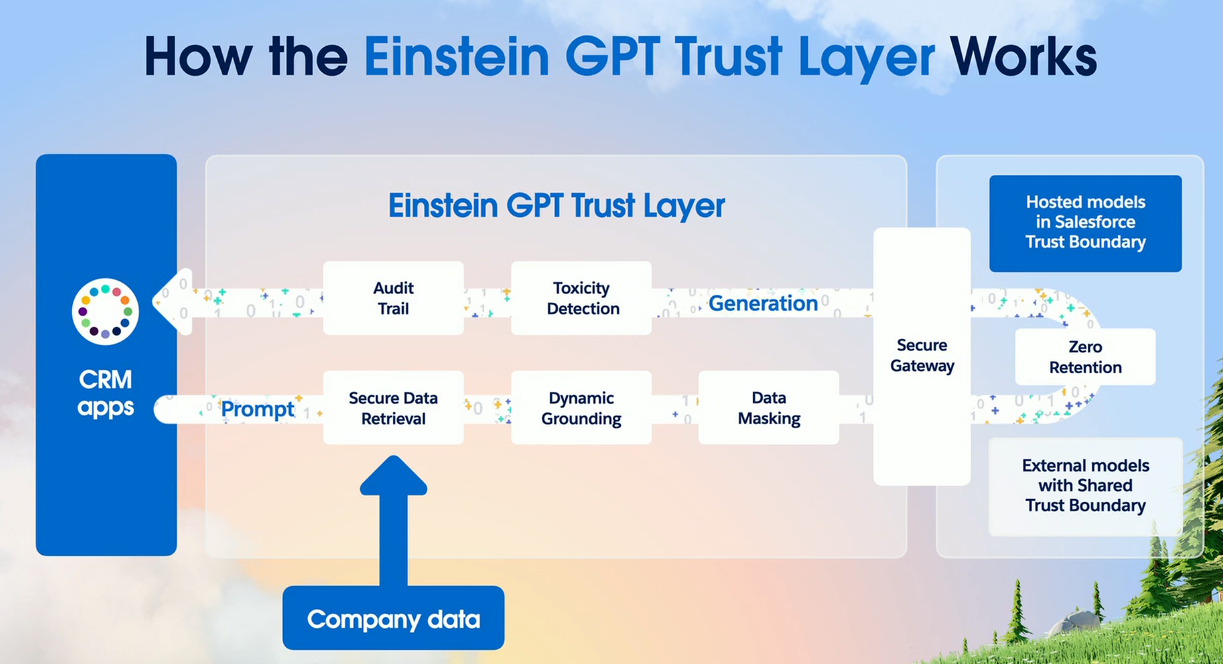

One alternative can be seen with Salesforce Inc.’s recent announcement. It’s vying to become the AI middleware, as it might be called, between the AI models and enterprises’ data. It has announced its AI Cloud and its Einstein Trust layer to separate the two.

But its protection doesn’t come cheap: The perhaps misnamed starter pack will run $360,000 annually and it comes with some other technologies it has acquired such as Slack, Tableau and MuleSoft to make it all work. The interlocking pieces and flows between the core customer relationship management of Salesforce and all of these technologies is shown in the diagram below.

Salesforce’s director of strategic content, Stephan Chandler-Garcia, goes into detail how this trust layer will work in a recent blog post. The trust relationships appear to be well-thought-out, covering how prompts can be constructed safely, how private data is protected and masked, and how the zero-retention step happens as shown in the diagram.

“The Einstein Trust Layer is a safety net, ensuring that our interactions with AI are both meaningful and trusted,” he wrote. This complexity is appealing, but it also means that Salesforce retains control over all private data transactions, which could have the potential to produce failure points that have yet to be discovered.

Finally, there are the well-known security and networking providers, which either have rushed to add AI to their products or now tell a story that they have been using AI for years, just never bothering to make it public. For example, Check Point Software Technologies Ltd. has added AI to its Threatcloud threat intelligence and detection service, to the extent that half of its 80 different scanning engines are now AI-based.

AI helps to classify potential malware, identify phishing attacks, and dive into malware campaigns to find how they entered a target’s network. The company claims AI has enabled its software to find and block new threats within a few seconds of detection. This means that data leaks can be stopped in near-real time.

Palo Alto Networks Inc. has also added AI to its Cortex managed detection and response product, including using automated incident response, and better integration across its defensive products to coordinate analytics and collecting all security data in a single place.

Cloudflare Inc. rolled out two new AI services, including Workers-AI, which runs custom workflows on a serverless GPU cloud network using Meta Platforms Inc.’s Llama AI model, and its AI gateway, which is a proxy-server-like service. Both make it easier to scale up applications by providing key AI services — including security — for developers.

And IBM Corp.recently launched its Threat Detection and Response Services, featuring new AI technologies that can help quickly escalate alert response across hybrid cloud environments. Two other tools that have been purpose-built for AI include Nvidia Corp.’s NeMo Guardrails and MITRE’s Adversarial Threat Landscape for Artificial-Intelligence Systems.

The long list of tools and services is just the tip of the AI security iceberg. Crafting an effective security policy will take some careful analysis of potential threats, understanding the interlocking pieces that are offered by both new and old providers, and whether age-old worst practices – such as least-privilege management and plugging default administrative passwords – can be finally laid to rest. AI will continue to be both the boon and the bane of security administrators.

One oft-mentioned suggestion is to create an organization-wide AI policy that “covers the basic guardrails about how to use AI, and work our way toward crafting the right policies.” That’s from one trade association information technology director who asked not to be identified for security concerns. He told SiliconANGLE of chatbot experiments to help improve his own productivity. “I have had trouble putting an effective policy in place that both regulates our use and doesn’t step on anyone’s toes.”

Another tactic is to promote the positive aspects of AI and then “get employees on your AI team to help the company gain new competitive advantages. Put them on the wall of fame, not the wall of shame,” suggests Pete Nicoletti, global chief information security officer at Check Point Software Technologies Ltd. This means promoting understanding that “ChatGPT is an open book, so employees shouldn’t put up a spreadsheet containing Social Security numbers into it,” says the trade association director.

Yet another is to be careful to keep things in-house. “Companies should be building, hosting and managing their own LLMs and leverage their proprietary data and dramatically reduce the risks from data leaks,” says Nicoletti.

John Cronin, an enterprise technology consultant, told SiliconANGLE that it’s best to “start with the assumption that any system will be breached. Given the fact AI and LLMs will we working with a lot of data and that data could be in a lot of places, these will be very inviting targets for the bad guys. This means that obfuscating and anonymizing data really has to be a core operating practice.”

“The important thing is to educate and have the right policies in place,” said Info-Tech Research’s Wong.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.