AI

AI

AI

AI

AI

AI

Microsoft Corp. has unveiled a new artificial intelligence model called Phi-2, and it demonstrates the remarkable ability to match or even exceed the performance of much larger and more established models of up to 25-times its size.

Announced in a blog post today, Microsoft said Phi-2 is a 2.7 billion-parameter language model that demonstrates “state-of the-art performance” compared with other base models on complex benchmark tests that measure its reasoning, language understanding, math, coding and common sense abilities. Phi-2 is being released now via the Microsoft Azure AI Studio’s model catalog, meaning it’s available now for researchers and developers looking to integrate it into third-party applications.

Phi-2, which was first unveiled by Microsoft Chief Executive Satya Nadella (pictured) at Ignite in November, packs an incredibly powerful punch thanks to its being trained on what the company said is “textbook-quality” data that’s focused specifically on knowledge, as well as techniques that allow for learned insights to be passed on from alternative models.

What’s interesting about Phi-2 is that, traditionally, the prowess of large language models has always been linked closely to their overall size, which is measured in parameters. Those with larger parameters typically showcase more abilities, but that has changed with Phi-2.

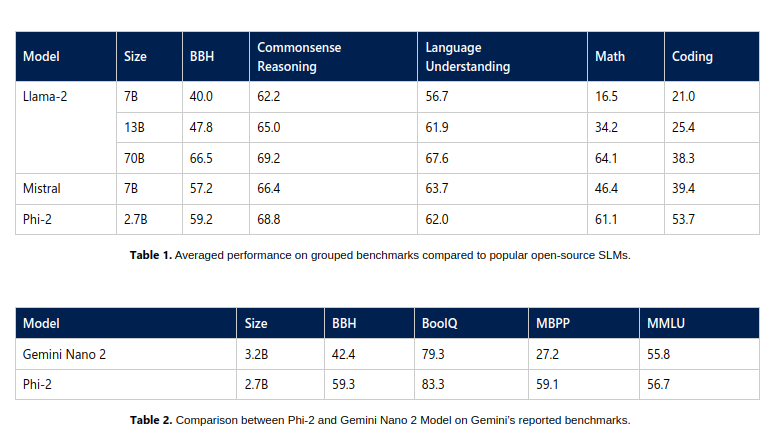

According to Microsoft, Phi-2 has shown an ability to match or even exceed the capabilities of much larger foundational models, including Mistral AI’s 7B Mistral, Meta Platforms Inc.’s 13B Llama 2 and even the 70B Llama-2 on certain benchmarks.

Perhaps the most surprising claim is that it can even exceed the performance of Google LLC’s Gemini Nano, which is the most efficient within the Gemini series of LLMs that was announced last week. Gemini Nano is built for on-device tasks and can run on smartphones to enable features such as text summarization, advanced proofreading and grammar correction and contextual smart replies.

Microsoft’s researchers said the tests involving Phi-2 were extensive, spanning language comprehension, reasoning, mathematics, coding challenges and more.

According to the company, Phi-2’s performance is thanks to its being trained on the carefully curated and textbook-quality data that was geared toward teaching reasoning, knowledge and common sense, meaning it can learn more from less information. Microsoft’s researchers also implemented techniques that allow the embedding of knowledge from smaller models.

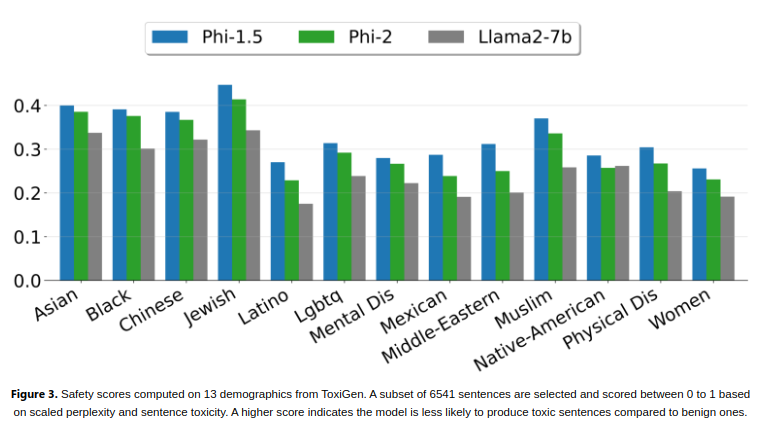

The researchers said it’s notable that Phi-2 can achieve its strong performance without the use of techniques such as reinforcement learning based on human feedback or instructional fine-tuning, which are often employed to improve the behavior of AI models. Despite not using these techniques, Phi-2 was able to demonstrate a superior performance in terms of mitigating bias and toxicity, compared to other open-source models that do use them. The company reckons this is the result of its tailored data curation.

Phi-2 is the latest release in a series of what Microsoft’s researchers term “small language models” or SLMs. The first, Phi-1, debuted earlier this year with 1.3 billion parameters, having been fine-tuned for basic Python coding tasks. In September, the company launched Phi-1.5, with 1.3 billion parameters, trained on new data sources that included various synthetic texts generated with natural language programming.

Microsoft said the efficiency of Phi-2 makes it an ideal platform for researchers who want to explore areas such as enhancing AI safety, interpretability and the ethical development of language models.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.