AI

AI

AI

AI

AI

AI

Low-code artificial intelligence development platform Predibase Inc. said today it’s introducing a collection of no less than 25 open-source and fine-tuned large language models that it claims can rival, or even outperform OpenAI’s GPT-4.

The LLM collection, called LoRA Land, is designed to cater to use cases such as text summarization, code generation and more. Predibase says it’s said to offer a more cost-effective way for enterprises to train highly accurate, specialized generative AI applications.

The company, which raised $12.2 million in an expanded Series A funding round in May of last year, is the creator of a low-code machine learning development platform that makes it easier for developers to build, iterate and deploy powerful AI models and applications at lower costs. The startup says its mission is to help smaller companies compete with the biggest AI firms, such as OpenAI and Google LLC, by removing the need to use complex machine learning tools and replacing it with an easy-to-use framework.

Using Predibase’s platform, teams can simply define what they want their AI models to predict using a selection of prebuilt LLMs, and the platform will do the rest. Novice users can choose from a variety of recommended model architectures to get started, while more experienced practitioners can use its tools to fine-tune any AI model’s parameters. Using its tools, Predibase claims, it’s possible to get an AI application up and running from scratch in just a few days.

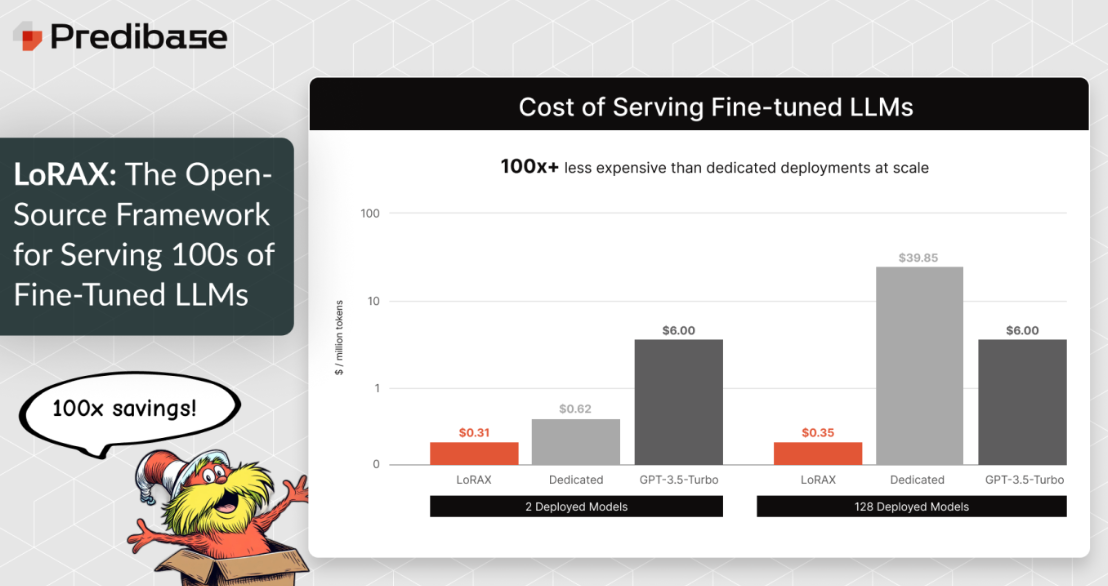

With the launch of LoRA Land, Predibase says, it’s giving companies the ability to serve multiple fine-tuned LLMs cost-effectively on a single graphics processing unit. The LoRA Land LLMs were built atop of the open-source LoRAX framework and Predibase’s serverless Fine-tuned Endpoints, with each one being specialized for a very specific use case.

Predibase argues that the cost of building GPT models from scratch or even fine-tuning an existing LLM with billions of parameters is extremely prohibitive. As a result, smaller, more specialized LLMs are becoming a popular alternative, with developers leveraging approaches such as parameter-efficient fine-tuning and low-rank adaptation to create highly performing AI applications at a small fraction of the cost.

Predibase says it has incorporated these techniques into its fine-tuning platform. So customers can simply choose the most appropriate LLM for their use case and fine-tune it in a very affordable way.

To prove its point, Predibase says the 25 LLMs in LoRA Land were fine-tuned at an average GPU cost of less than $8. Customers will therefore be able to use LoRA Land to fine-tune potentially hundreds of LLMs using a single GPU, the startup said. Not only is it cheaper, but by not waiting for a cold GPU to spin up before fine-tuning each model, companies can also test and iterate much faster than before.

The company has created a very compelling offering, given that AI is often incredibly expensive for companies no matter how they go about it, said Constellation Research Inc. Vice President and Principal Analyst Andy Thurai. He explained that although the initial experimentation costs with LLMs accessed via an application programming interface are fairly inexpensive, they quickly rise when a full-scale AI implementation is deployed.

He added that “the alternative, which involves fine-tuning open-source LLMs, can also be fairly expensive from a resources perspective, and also challenging in terms of skills, creating problems for companies that don’t have any qualified AI engineers.” The analyst said Predibase is now offering a third option with a set of 25 fine-tuned LLMs that can be further refined and deployed on a single GPU.

It’s an interesting idea that could have some big implications for smaller companies, Thurai said, as many smaller, purpose-built models have shown they can outperform mega-sized LLMs in some very specific use cases. “The desire to use open-source LLMs and the limited availability of AI skills could make this a big deal for enterprises looking at this angle,” Thurai said. “If enterprises decided to use different fine-tuned models for each use case they have in mind, Predibase’s offering could be a big hit.”

The company’s serverless fine-tuned endpoints deployment option means customers can even create AI models that run without GPU resources, meaning much cheaper running costs too, the analyst added. “While Predibase’s claim that its models outperform GPT-4 needs to be proven, it sounds like a very compelling alternative for many AI applications,” Thurai said.

Co-founder and Chief Executive Dev Rishi said a number of its customers have already recognized the advantage of using smaller, fine-tuned LLMs for different applications. One such customer is the AI startup Enric.ai Inc., which offers a platform for coaches and educators to create AI chatbots that incorporate text, images and voice.

“This requires using LLMs for many use cases, like translation, intent classification and generation,” said Enric.ai CEO Andres Restrepo. “By switching from OpenAI to Predibase, we’ve been able to fine-tune and serve many specialized open-source models in real-time, saving over $1 million annually while creating engaging experiences for our audiences. And best of all, we own the models.”

Developers can get started fine-tuning LoRA Land LLMs today through Predibase’s free trial offering. It also offers a free developer tier with capped resources, plus paid options for companies with bigger projects in mind.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.