APPS

APPS

APPS

APPS

APPS

APPS

Nvidia Corp. today announced that it’s expanding Omniverse Cloud with application programming interfaces, which will open up the platform for industrial digital twin applications and software developers.

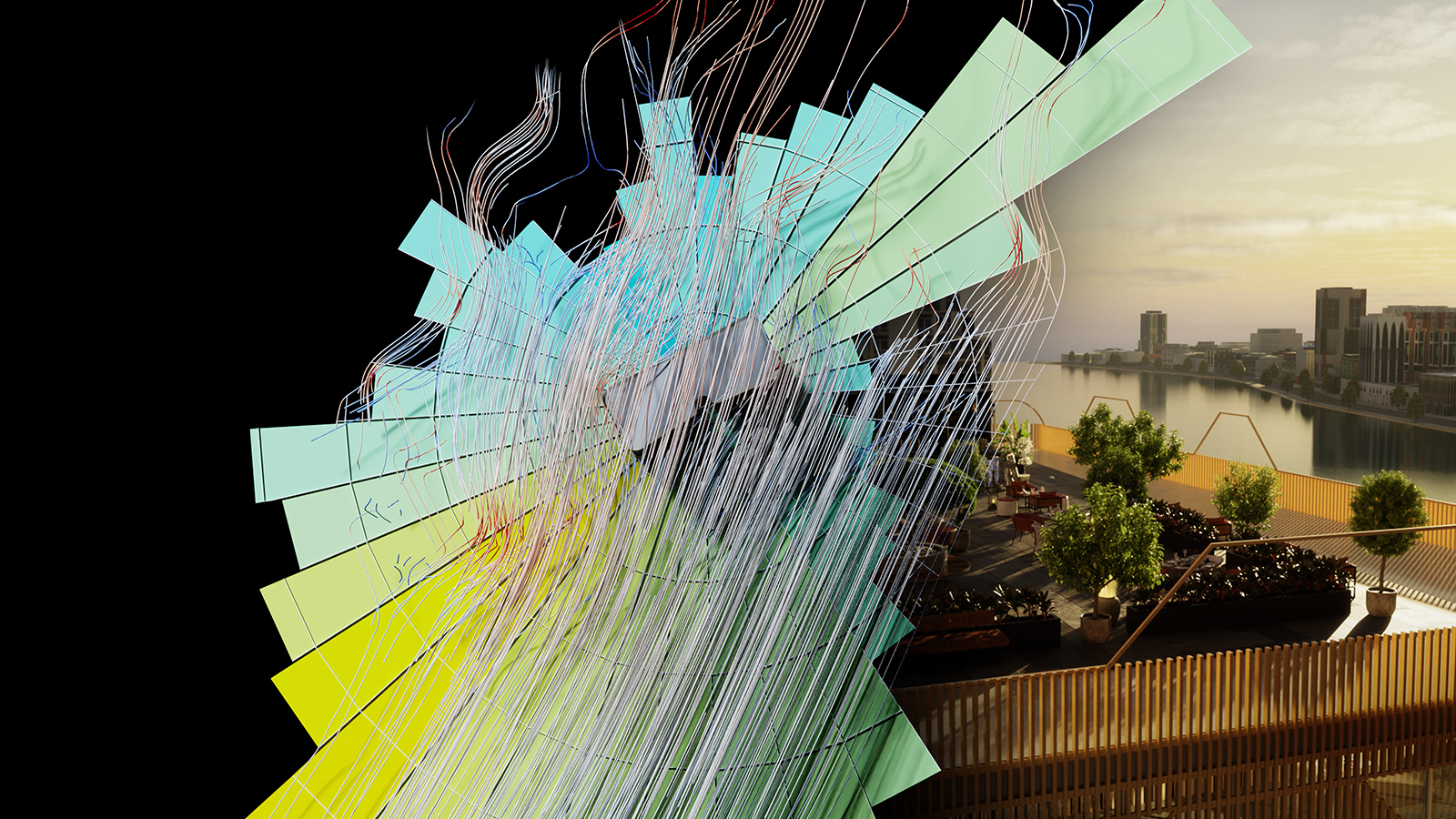

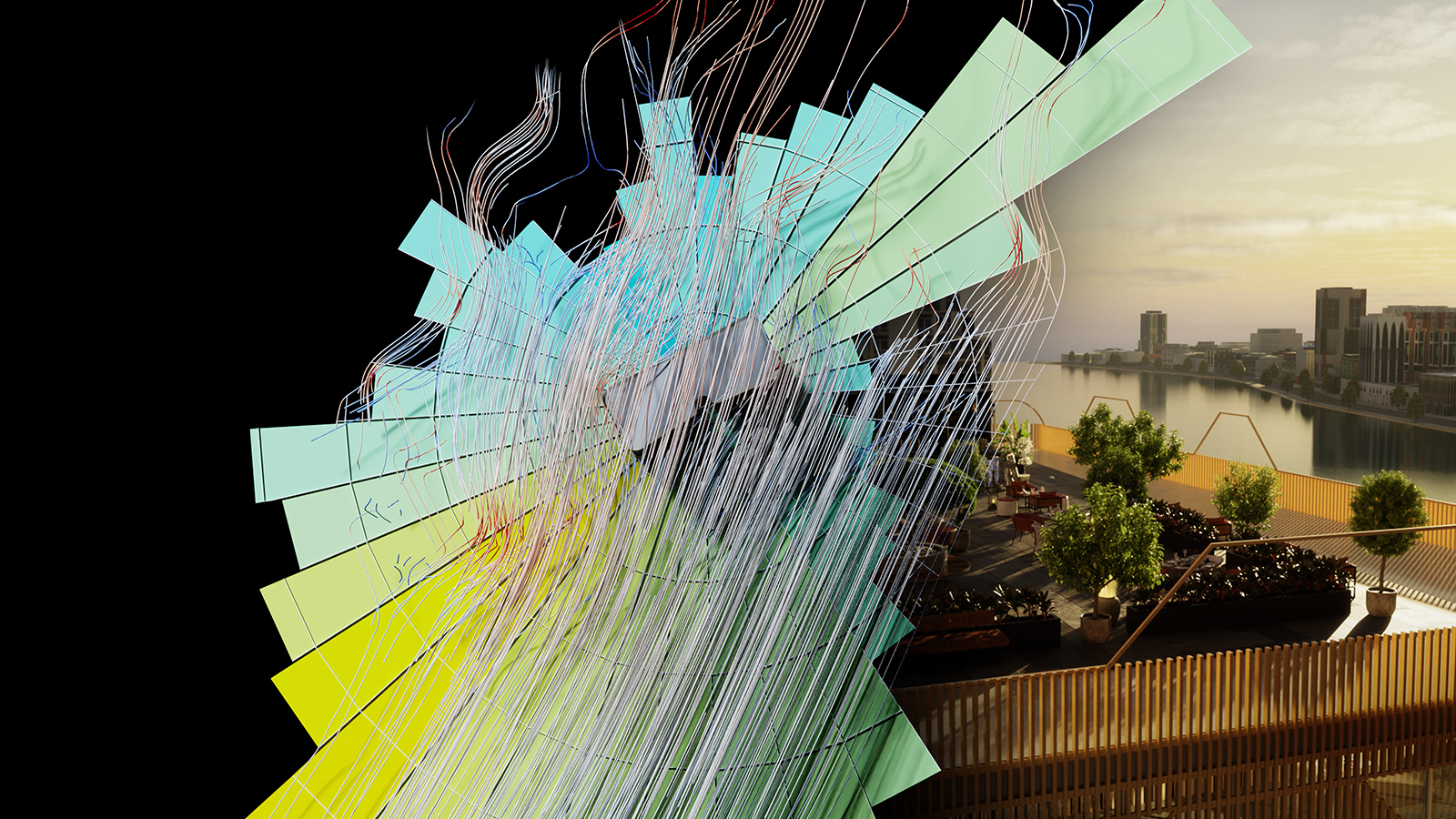

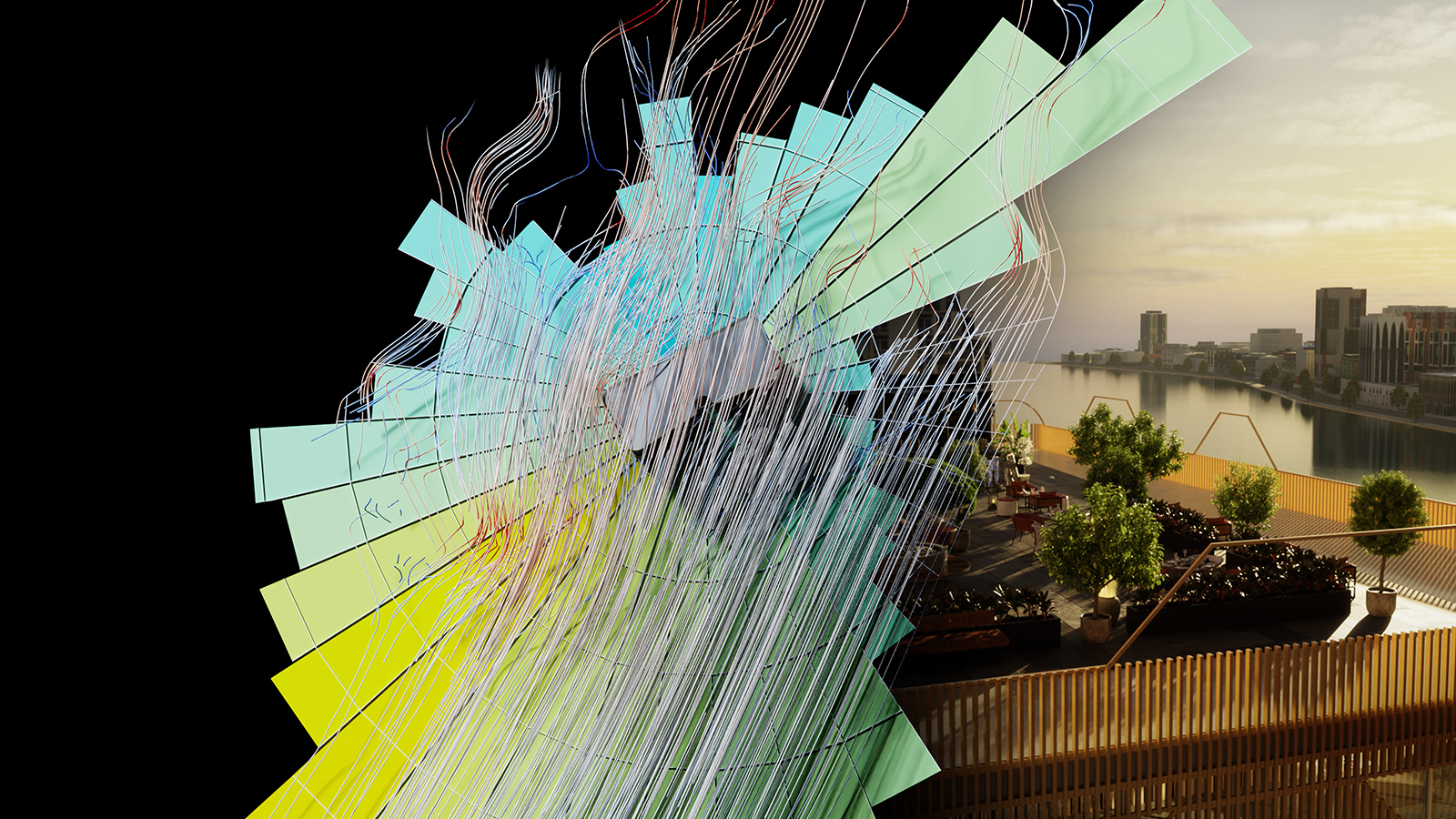

Omniverse acts as a collaborative “metaverse” platform for developers, artists and engineers to visualize and generate 3D models of projects. Objects simulated within Omniverse, also known as “digital twins,” act and react with hyperrealistic physics simulation of real-world objects and the platform provides a collaborative solution for users and enterprise teams.

Omniverse Cloud is a cloud-based software-as-a-service solution for artists, developers and teams to use the platform to design, publish and operate metaverse applications anywhere in the world.

“Everything manufactured will have digital twins,” Nvidia founder and Chief Executive Jensen Huang said ahead of the company’s annual GTC conference in San Jose, California. “Omniverse is the operating system for building and operating physically realistic digital twins. Omniverse and generative AI are the foundational technologies to digitalize the $50 trillion heavy industries market.”

At the GTC 2024 developer conference, Nvidia announced five new Omniverse Cloud APIs, which can be used individually or in combination to connect applications or workflows to the platform for interoperability using OpenUSD, a high-performance extensible format for describing animated 3D scenes for large-scale film and visual effects production.

These new APIs include USD Render, USD Write, USD Query, USD Notify and Omniverse Channel. These APIs permit the ability to generate ray-traced RTX renders with OpenUSD data, modify and interact with data, query scene data, track USD changes and connect users, tools and worlds to enable collaboration. With these capabilities, developers could create “windows” from applications into Omniverse Cloud, allowing “metaverse” scenes and digital twins to be pulled directly into software.

“Through the Nvidia Omniverse API, Siemens empowers customers with generative AI to make their physics-based digital twins even more immersive,” said Roland Busch, president and CEO of Siemens AG. “This will help everybody to design, build and test next-generation products, manufacturing processes and factories virtually before they are built in the physical world.”

The same Omniverse Cloud APIs open up the ability to stream Omniverse Cloud’s capabilities to Nvidia’s Graphics Delivery Network, a global network of data centers for streaming 3D experiences to Apple’s Vision Pro mixed reality headset.

Nvidia demonstrated how Omniverse-based workflows could be combined with Vision Pro’s high-resolution displays using the company’s RTX cloud rendering capabilities to produce visually stunning experiences with just the device and an internet connection. The cloud-based approach allows real-time physically based renderings of virtual objects, called digital twins, to be streamed directly to the Vision Pro, in high fidelity making the visuals true to reality, the company said.

The Vision Pro headset is capable of “mixed reality,” where the headset uses cameras and sensors on the front to pass through a view of what the user sees and then overlays 3D renders of objects onto the real world. The headset can also generate immersive virtual reality experiences, where the view itself is completely computer-generated. Apple Inc. CEO Tim Cook referred to the device as a “spatial computer” when it was unveiled, touting its ability to connect the virtual and the physical.

“The breakthrough ultra-high-resolution displays of Apple Vision Pro, combined with photorealistic rendering of OpenUSD content streamed from Nvidia accelerated computing, unlocks an incredible opportunity for the advancement of immersive experiences,” said Mike Rockwell, vice president of the Vision Products Group at Apple. “Spatial computing will redefine how designers and developers build captivating digital content, driving a new era of creativity and engagement.”

With the introduction of Apple Vision Pro, developers can combine remote cloud rendering and local on-device rendering. That means users can render fully interactive digital twins and experiences using Apple’s native SwiftUI and Reality Kit using Omniverse RTX Renderer streaming through GDN.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.