AI

AI

AI

AI

AI

AI

With the AI boom in full throttle, there has been no trade show in recent memory that I can recall more eagerly anticipated than Nvidia Corp.’s GTC 2024.

After five years of being virtual, the event returned to an in-person format, adding to the excitement. While the event was held at the San Jose Convention Center, the keynote was up the street at the SAP Center, home of the San Jose Sharks and a popular venue for many rock concerts, and it’s the latter that’s the appropriate comparison as it had that kind of feel. The venue was packed to see if Nvidia could meet the hype around AI today.

The keynote certainly didn’t disappoint. It started with a refreshed “I am AI” video, which certainly got the crowd in the AI mood. There were many product announcements – from its new graphics processing unit, Blackwell, to imaging and supercomputers, but I thought many key themes permeated the event. Here are my key takeaways from Nvidia GTC 2024:

Queue up Michael Buffer – “In the green corner, the undisputed and undefeated AI Champion of the world…. Nvidia.” In all my years covering tech, I do not recall another company with this much momentum built on a technology trend. Nvidia is not only building products for AI but also setting the vision and pace of innovation.

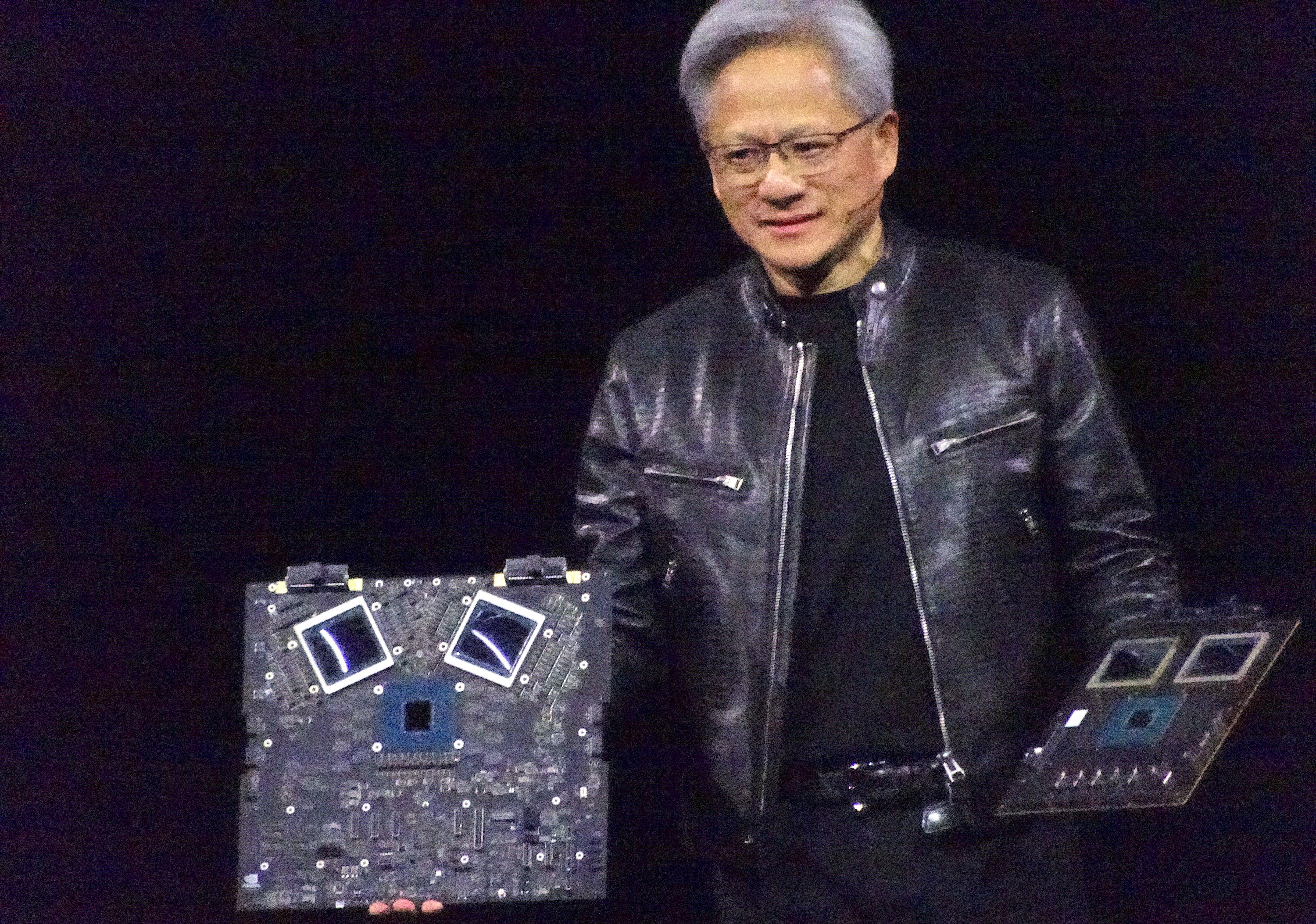

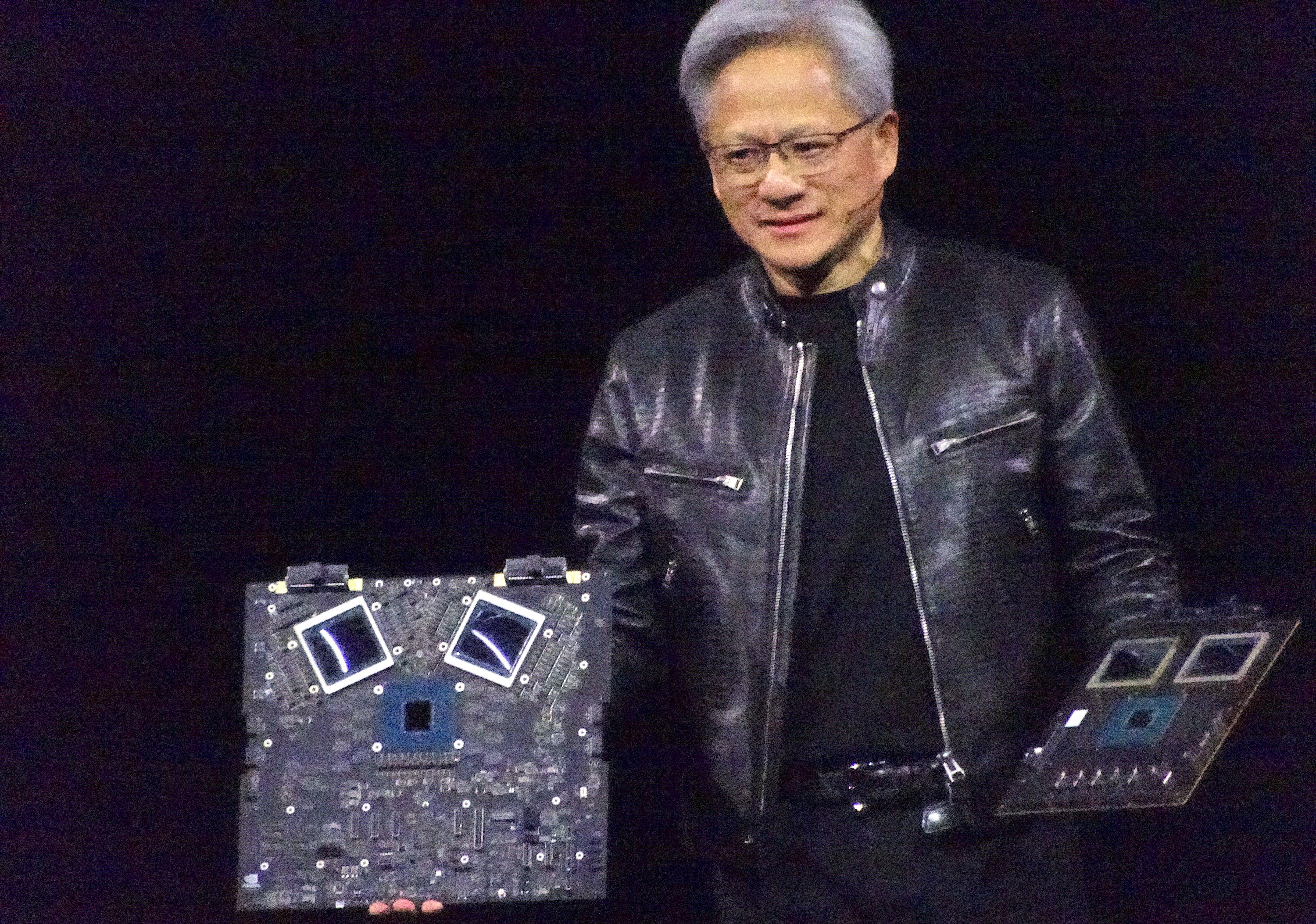

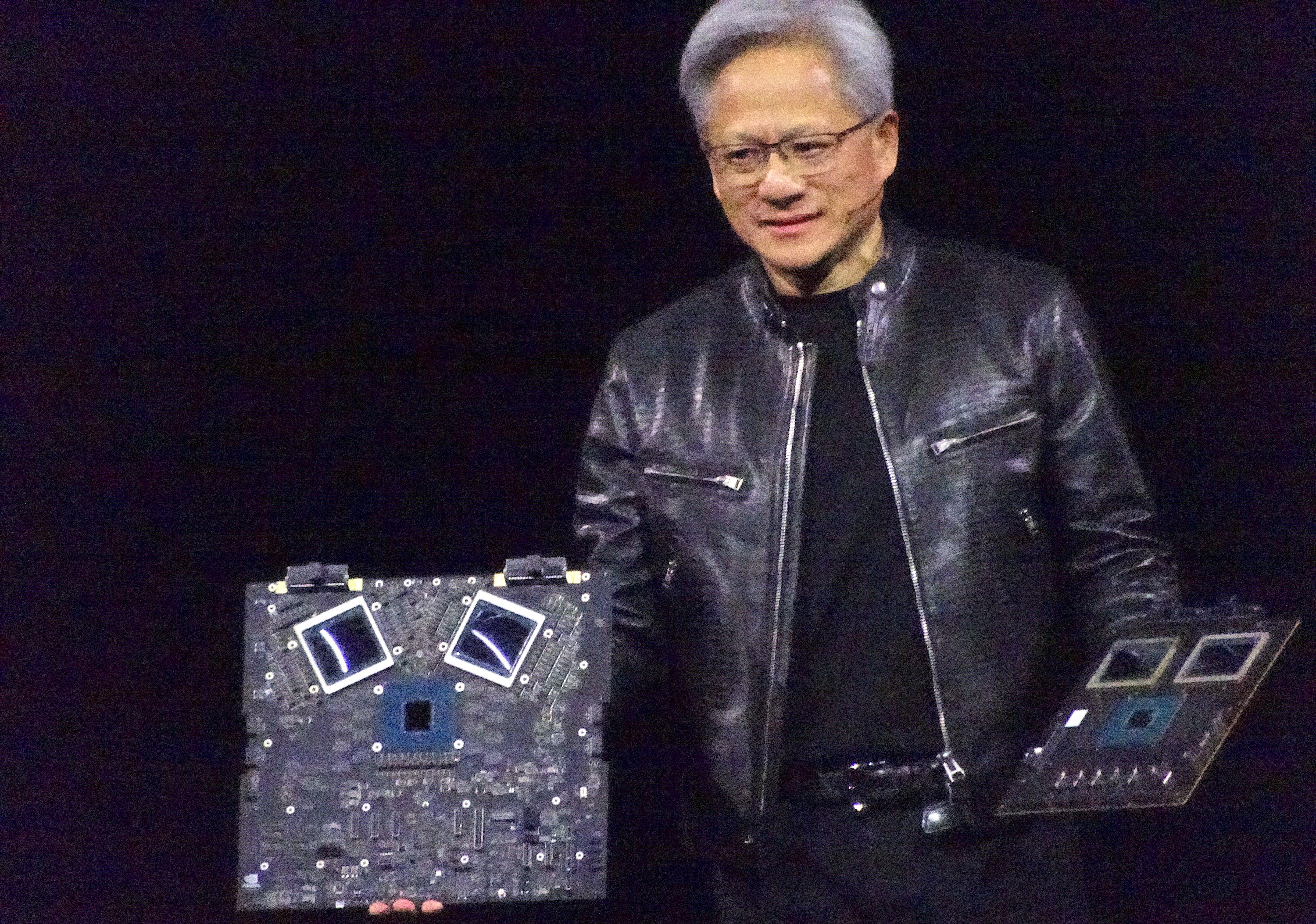

CEO Jensen Huang (pictured) is to AI what John Chambers was to the internet, but the economic impact of AI dwarfs the internet era. During the analyst Q&A, Huang talked about how the vision of what AI could do for us probably sounded crazy years ago, but now it’s real. Staying with the Chambers analogy, he said things like “voice would be free,” which sounded equally crazy but eventually became a reality.

This puts Nvidia in an interesting bellwether position, as its actions, comments and financial performance will now have an impact on not only it but also all its ecosystem partners and many competitors. As Uncle Ben told Peter Parker, “With great power comes great responsibility,” and for Nvidia, being the king of AI changes expectations. Huang and Nvidia seem up to the task, but now the real work begins.

For all the hoopla and mayhem surrounding AI today, we are very early in the AI cycle. While the hyperscalers and a handful of big companies might have their AI strategy in place, most companies need help with where to use it, how to implement it, how to retrain staff, and what kind of ROI can be gained.

Last week, I talked with Bill Schlough, chief information officer of the San Francisco Giants, and asked him how he thinks of using AI; he told me, “The bigger question is, how are we not thinking about AI as it now permeates every aspect of our business? It’s in every conversation we have.”

This is a sentiment echoed by many CIOs today. There is an awareness that an AI strategy is needed, but how it manifests itself has yet to be written. Again, going back to the Internet analogy, I was in corporate information technology in the early years, and everyone – from the board to corporate executives to employees — was banging the “We need an internet strategy” drum.

Today, it’s standard operating procedure, and one day, AI will be as well. Using the baseball metaphor, it seems we aren’t quite in the first inning, but when AI starts to take off, it will accelerate quickly.

The AI and Nvidia critics will point to the fact that GPUs use significantly more power than CPUs, and that’s true, but one can’t look at these things in isolation. Instead, looking at this through the lens of workloads is essential. It’s like looking at vehicles. If I asked someone if a Prius or a Ford F150 was more fuel-efficient, one would immediately say the Prius.

What if I added the caveat that the driver needed to haul 5,000 pounds of gravel across then country. Then the truck wins as it can do it in one drive, whereas the Prius must make several trips.

AI not only enables new workloads but also allows for tasks to be done differently. In the analyst Q&A, Huang took this question head-on and talked about how AI can save much of the wasted energy in the grid. A simple example is that AI can figure out how to charge batteries more efficiently and extend battery life.

He was also very bullish about AI being used to help adapt to situations. “The thing AI could have the most significant impact on is adaptation,” he said. “If harm will come our way, AI can tell us when and where it will show up and help us take corrective action.”

GPUs draw more power and continue to grow power-hungry, but they also enable us to do things so much more efficiently that they create a net positive impact on the planet.

While Nvidia is best known as a GPU company, what made it the company it is today is the software stack that makes working with the GPUs easy, and that started with CUDA and then evolved to add software libraries, SDKs and other programming tools. The next phase of its software strategies is the NIM, for Nvidia Inference Microservices, which brings together optimized inference engines, application programming interfaces and AI models in a container for simple and rapid deployment. Nvidia offers pre-packaged NIMs, but it also allows companies to use their own data for custom NIMs.

The use of NIMs lets companies be a lot more agile as they can experiment more with their software and run “what-if scenarios,” which is important in the GenAI era, particularly with retrieval-augmented generation or RAG. With generative AI, things are rarely perfect out of the box, and queries require more and more refinement.

By packaging everything into a microservice, Nvidia is cutting down the time it takes developers to bring the necessary pieces together. This will only add to the simplicity developers have in working with Nvidia and keep them as the platform of choice for that audience as well as with systems integrators.

Nvidia is often criticized for its vertically integrated solutions. Whether someone is looking at the Drive Kit, Clara, Omniverse, DGX or other Nvidia platforms, there is one commonality, and that is the infrastructure stack is all Nvidia. This includes not only the GPU but also the network and software that sits on top of it.

As mentioned earlier, we are early in the AI cycle and vertical integration is the fastest path to a successful deployment. Without an engineered solution, the customer may be able to cobble together a solution comprised of “best of breed” components but then will spend months tweaking and tuning it for optimal performance.

As the industry matures and deployments become more standardized, the ecosystem can grow, and vertical integration becomes less necessary. It’s worth noting that Nvidia has been good about building reference architectures with many ecosystem partners such as Pure Storage, Cisco Systems and Supermicro. These are still vertically integrated solutions, as Nvidia and its partners have put together the blueprint but do offer customers choice.

Overall, GTC 2024 was a phenomenal show. A tremendous amount of innovation helped make much of the conference’s vision a reality. Nvidia is currently the darling of the AI industry and will reap the benefits of this for at least half a decade, maybe longer. One wonders if the company can continue to innovate at the torrid pace it has, but right now, there is no reason to assume it can’t.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for SiliconANGLE.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.