AI

AI

AI

AI

AI

AI

Hewlett Packard Enterprise Co. is upgrading Aruba Networking Central, its cloud-based network management platform, with large language models designed to make administrators more productive.

The company announced the update today. The models complement the platform’s existing artificial intelligence features, which ease tasks such as fixing malfunctioning switches.

Aruba Networking Central enables administrators to monitor their companies’ network infrastructure through a centralized interface. The platform collects data on Wi-Fi access points in offices, switches and other equipment. It also performs several related tasks: Administrators can use the platform to configure networking equipment and fix malfunctions.

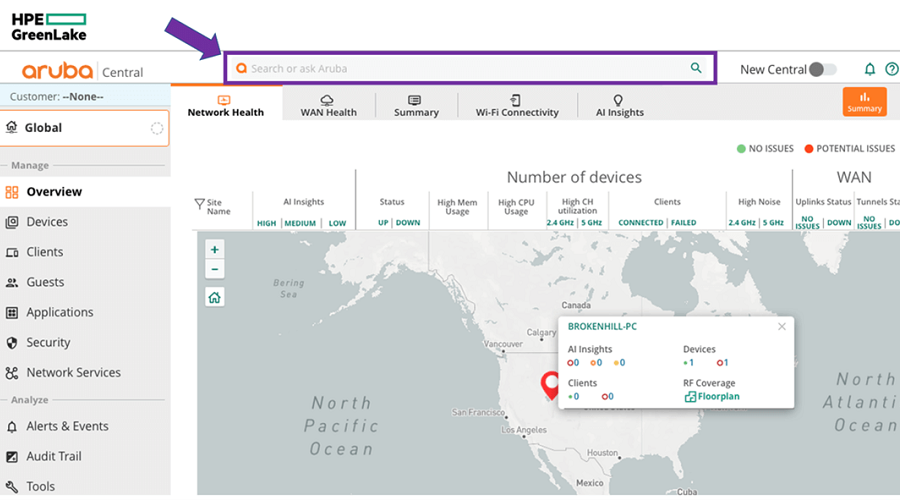

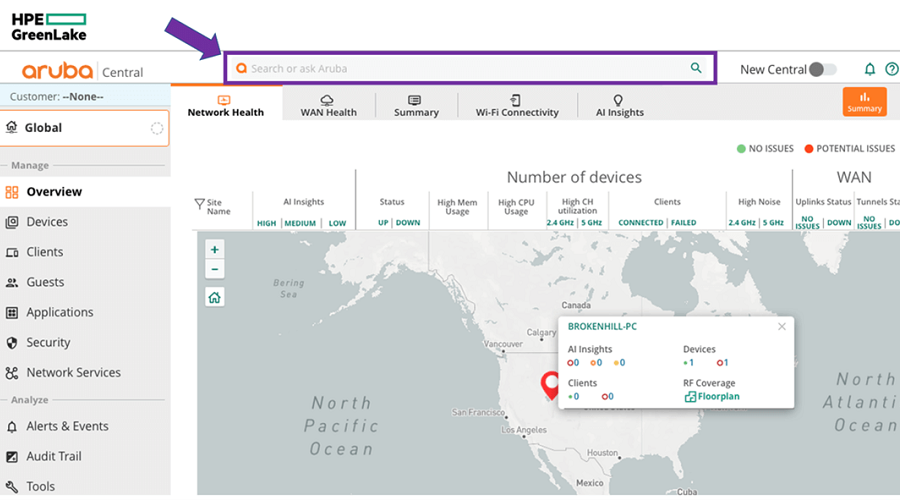

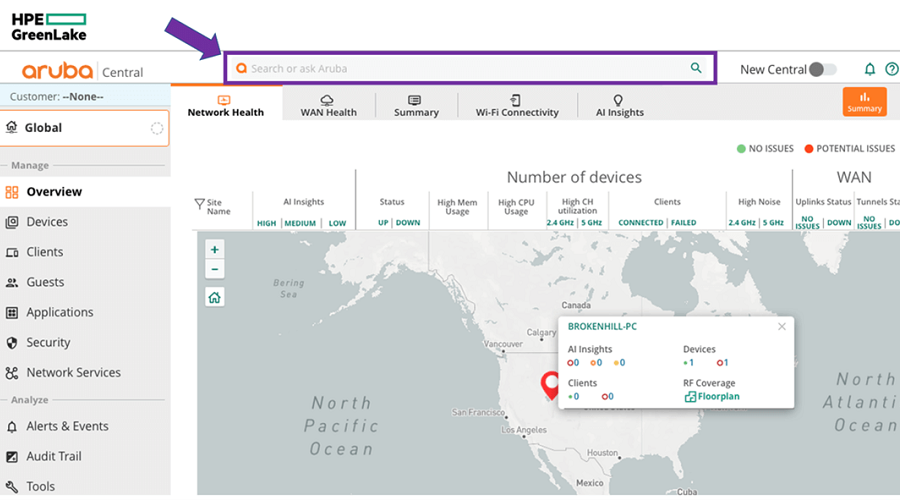

The new LLMs that HPE debuted today are rolling out for Aruba Networking Central’s search bar. The tool, which is located at the top of the interface, already uses AI to help administrators find troubleshooting guidance and other technical information. HPE says its new language models significantly expand the search bar’s capabilities.

Many of the improvements the LLMs bring to the tool focus on boosting ease of use. According to the company, the search bar can now better understand queries that contain networking-related technical terms. A new autocomplete feature suggests multiple queries based on the text the user has started typing. According to HPE, the upgraded search bar returns results significantly faster than many commercial chatbots.

Another new feature allows the tool to summarize documentation about HPE’s Aruba networking products. When an administrator asks for information about tasks such as switch installation, the search bar can generate a natural language response based on the relevant technical guides. Each response includes links to the documents from which the information was sourced.

According to HPE, one of the LLMs in today’s update was trained to detect personal data as well as so-called corporate-identifiable information. The model is used for two tasks. First, it enables the search bar to better understand queries that reference company-specific information such as the name of an office building. Second, it allows HPE to detect queries with personal information and avoid incorporating them in its LLMs’ training datasets.

The company says it trained the new LLMs on a data repository ten times larger than those used by rivals. That repository includes more than 3 million queries entered into Aruba Networking Central’s search bar by users. Additionally, HPE added in the tens of thousands of publicly available technical documents it has published about its Aruba product portfolio.

“We have implemented multiple locally trained and hosted LLMs to take advantage of the human understanding and generative qualities of GenAI without the risk of data leaks via external API queries to and from our data lake,” HPE executives Karthik Ramaswamy and Alan Ni detailed in a blog post.

The LLMs started becoming available to Aruba Networking Central customers earlier this month. HPE expects to complete the rollout by April.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.