AI

AI

AI

AI

AI

AI

Databricks Inc. today launched DBRX, a general-purpose large language model that it says outperforms all existing open-source models — and some proprietary ones — on standard benchmarks.

The company said it’s open-sourcing the model to encourage customers to migrate away from commercial alternatives. It cited a recent Andreessen Horowitz LLC survey that found that nearly 60% of artificial intelligence leaders are interested in increasing open-source usage or switching when fine-tuned open-source models roughly match the performance of proprietary ones.

“The most valuable data, I believe, is sitting inside enterprises,” Databricks Chief Executive Ali Ghodsi (pictured) said in a briefing with journalists. “AI is kind of precluded from those spheres, so we’re trying to enable that with open-source models.”

DBRX uses a “mixture-of-experts” architecture, a type of neural network that divides the learning process among multiple specialized subnetworks known as “experts.” Each expert is proficient in a specific aspect of the designated task. A “gating network” decides how to allocate the input data among the experts optimally.

The MoE architecture is built on the MegaBlocks open source project, which its developer said can more than double training speed compared with other MoE architectures and is up to twice as compute-efficient. It’s only for text, not video and images like some so-called multimodal models from OpenAI, Google LLC and others.

Databricks Generative AI Vice President Naveen Rao, who joined the company after it acquired the startup he co-founded, MosaicML Inc., last year, said he was initially skeptical about MoE when his firm started using it in 2021. However, he now thinks it works more like the human brain than monolithic models. “We’re the ones who made it work,” he said.

Ghodsi said the most relevant use cases for DBRX are those involving critical governance and security, such as financial services and healthcare, or where the tone of responses matters, such as customer self-service.

The model does require some serious horsepower, a PC or a server with at least four Nvidia Corp. H100 graphics processing units, which are in high demand and cost several thousand dollars apiece.

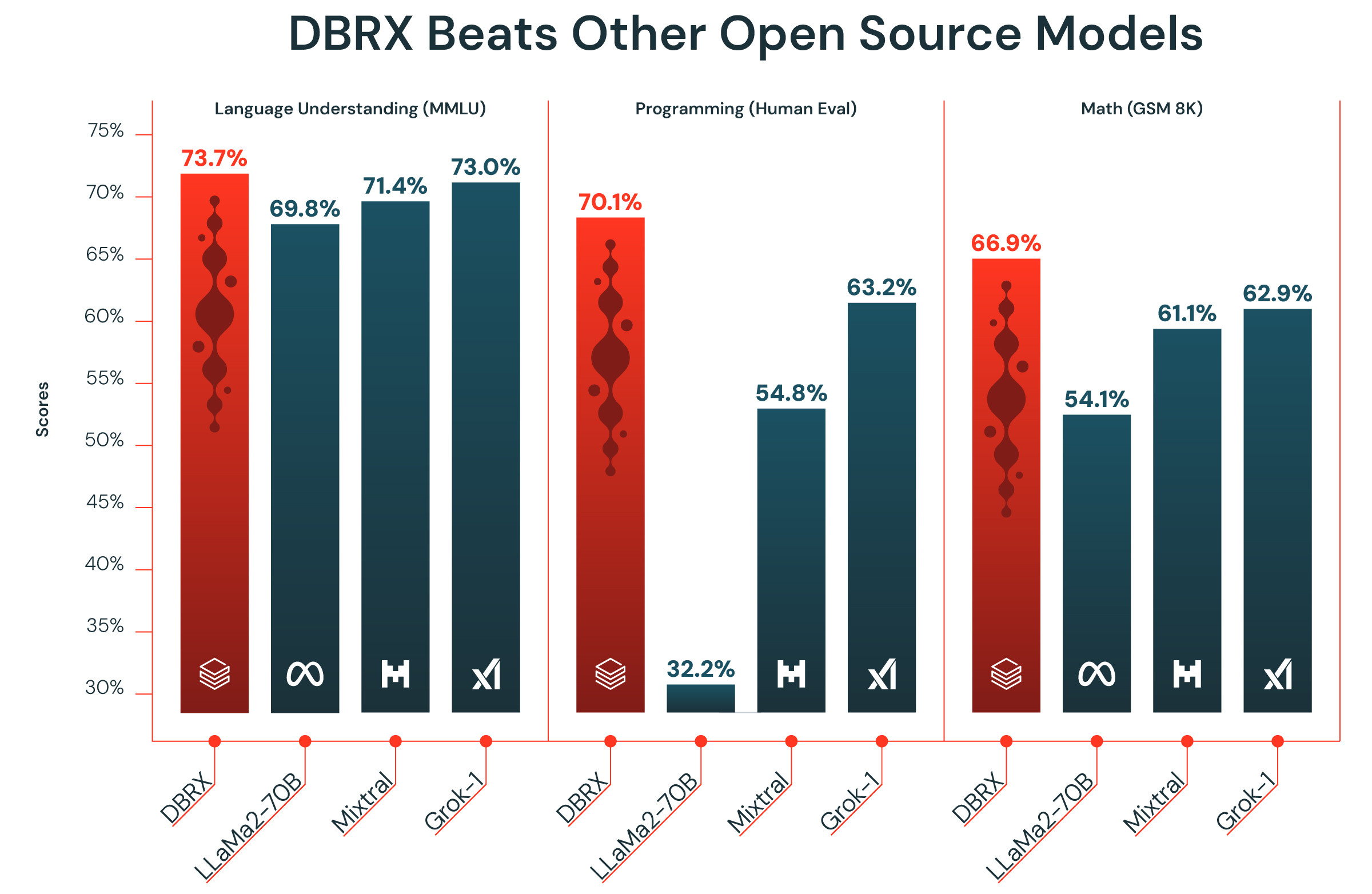

Although DBRX is nearly twice as big as Llama 2, at 132 billion parameters, it’s twice as fast, Databricks said. It claimed DBRX has outperformed existing open-source LLMs Llama 2 70B and Mixtral-8x7B — another model taking the MoE approach — and the proprietary GPT-3.5 – although not GPT-4 — in benchmarks for language understanding, programming, math and logic. The model cost $10 million to train on public and licensed data sources over a two-month period using using 3,000 H100 GPUs.

The key to DBRX is that, while the model is large with 132 billion parameters, it is cleverly designed to operate as a smaller model, explained Tony Baer, a principal at dbInsight LLC. “At run time, a logical switching layer chooses the right expert or mix of experts, and in so doing, literally cuts inference workloads down to more sustainable size.,” he said. “Compared to LLMs, these mixtures of experts should run much faster and cheaper. Databricks is one of the first major commercial language models to take advantage of this emerging approach.”

Databricks said DBRX can be paired with Databricks Mosaic AI, a set of unified tools for building, deploying and monitoring AI models, to build and deploy generative AI applications that are safe and accurate without giving up control of their data.

DBRX is freely available for research and commercial use on GitHub and Hugging Face. Developers can use its long context abilities in retrieval-augmented generation systems and build custom DBRX models on their own data today on the Databricks platform.

“Given the track that Databricks has been on since the MosaicML acquisition, not to mention their depth of funding, it seemed like it would only be a matter of time before they launched their own general-purpose language model, going against the OpenAIs, Anthropics and Coheres of the world,” said Baer. “This is a space where, counter to the narrative of the past 20 years where the cloud lowered barriers to entry for startups, gargantuan training requirements are having just the opposite effect with LLMs. For now, only the well-capitalized are ready to play.”

That’s what Databricks is aiming to change.

With reporting by Robert Hof

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.