INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Updated with Monday stock movement:

Chipmaker Nvidia Corp. has told Microsoft Corp., Meta Platforms Inc., Google LLC and other customers that its upcoming, highly anticipated “Blackwell” B200 graphics processing units for artificial intelligence workloads are likely to be delayed by at least three months.

A report Friday night by The Information said one of Nvidia’s key manufacturing partners has discovered a “design flaw” that will prevent the chips from launching on time. The flaw was discovered “unusually late” during the production process, anonymous sources revealed.

The Blackwell GPUs are the successor to Nvidia’s hugely popular and in-demand H100 chips, which are used to power the vast majority of generative AI applications in the world today. They were announced in March, and are said to deliver a performance boost of up to 30 times over the H100, while reducing energy consumption by as much as 25% on some workloads.

The delay was unexpected, as Nvidia said just three months ago that its “Blackwell-based products” will be available from its partners later this year. They were slated as being the first major release in a cadence of AI chip updates from semiconductor firms, with rivals such as Advanced Micro Devices Inc. also set to launch their own products in the coming months.

Update: Nvidia’s shares were falling more than 7% in Monday morning trading.

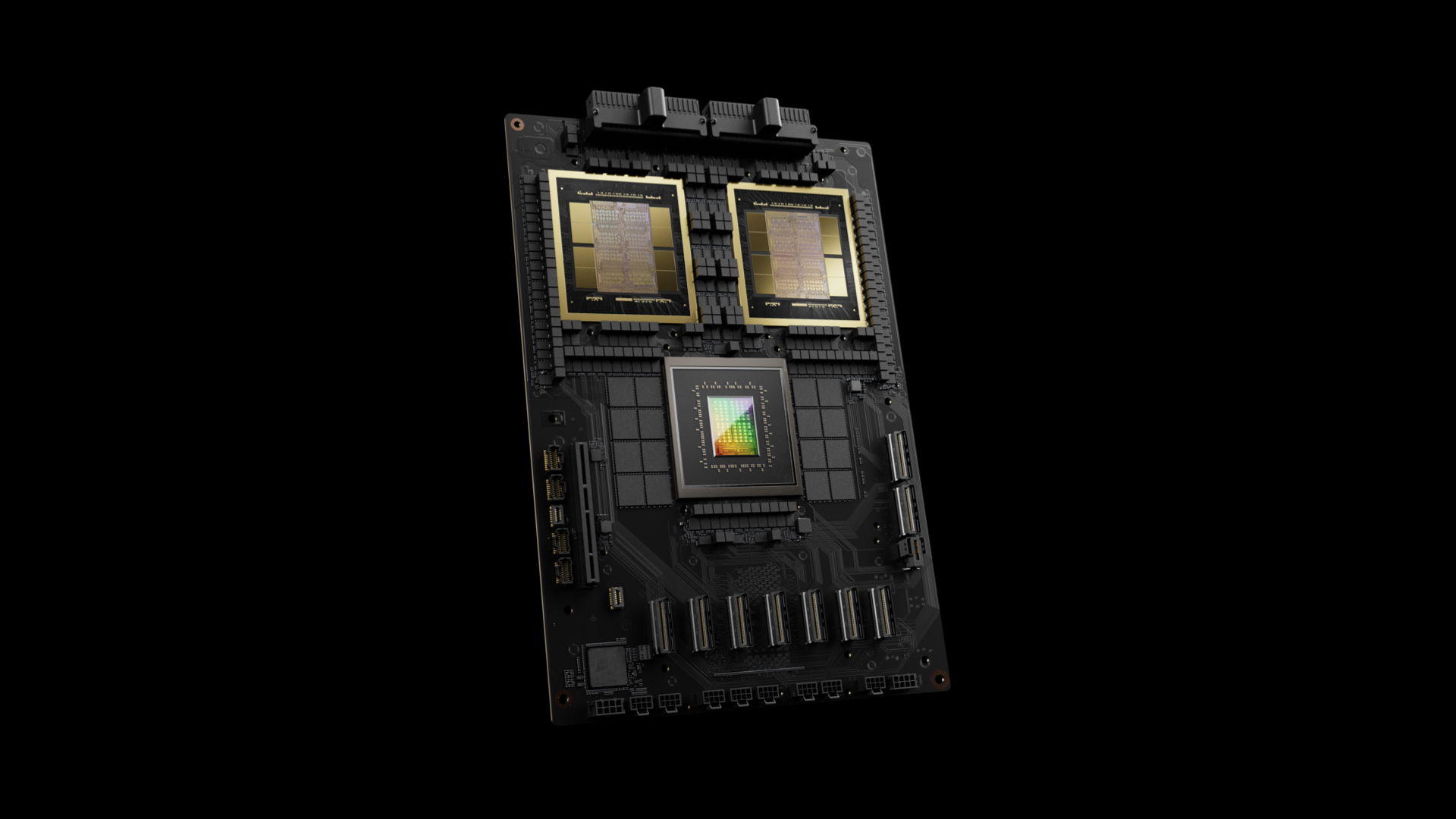

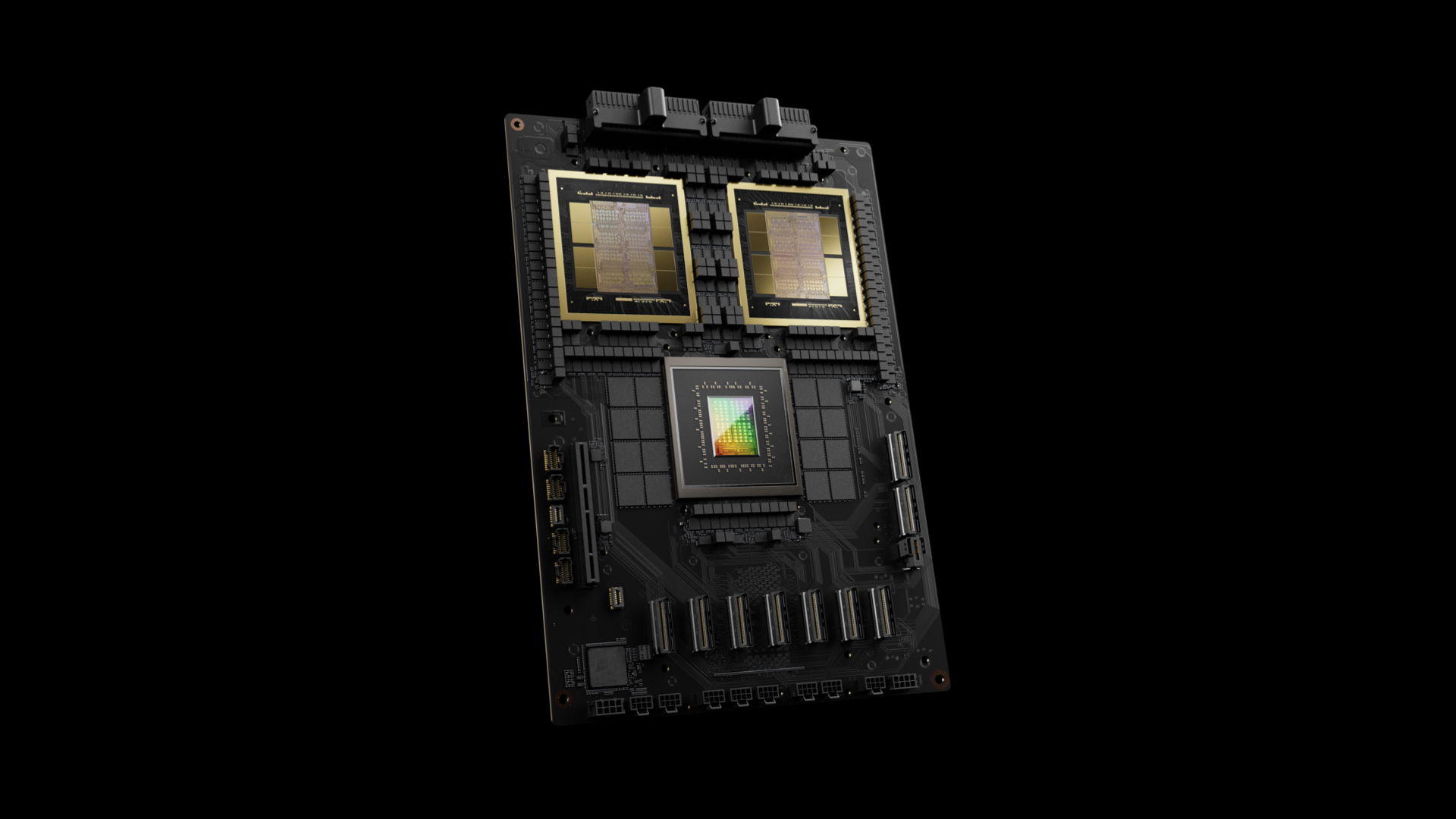

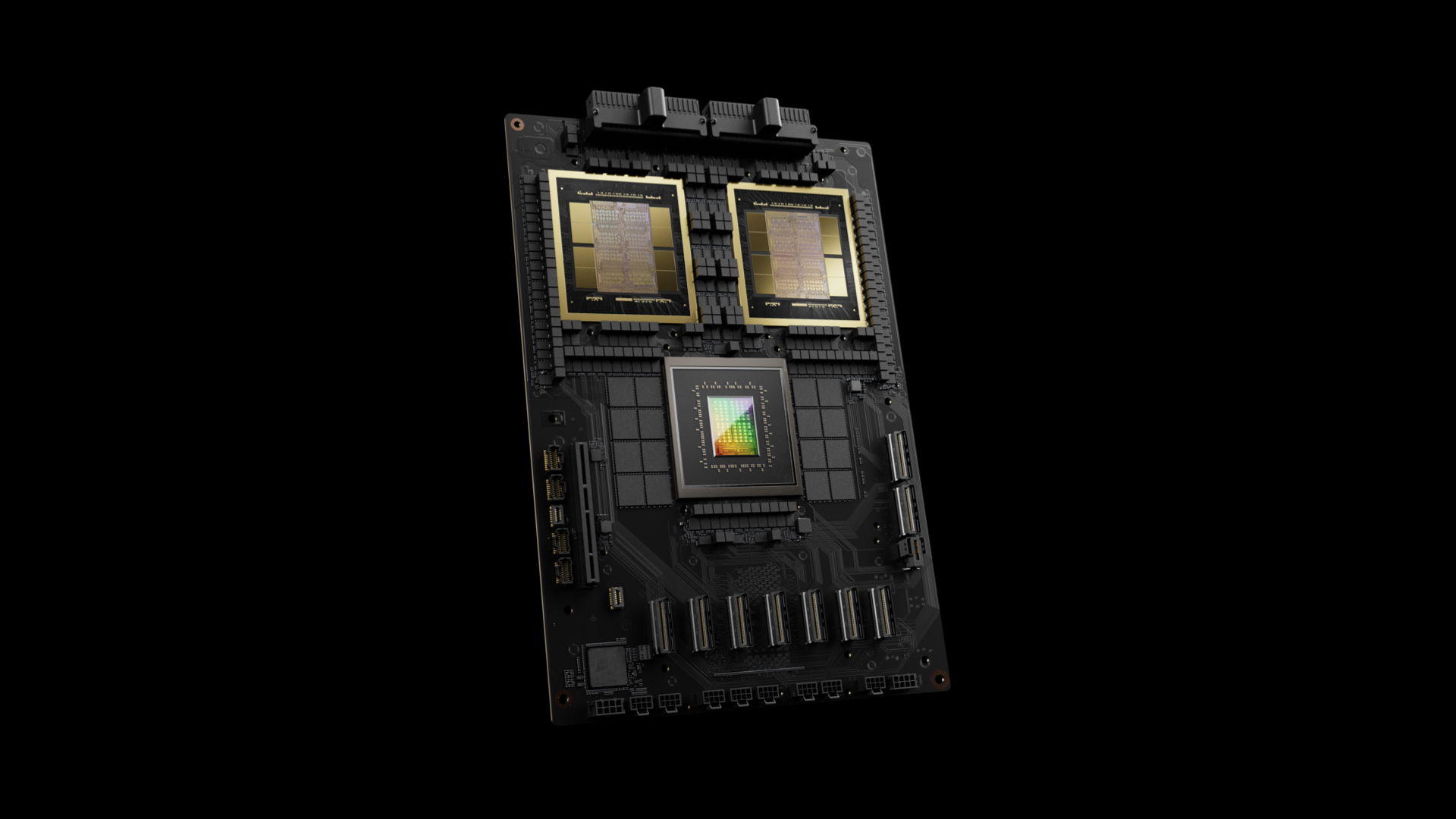

According to The Information, the problem has to do with the processor die that connects two Blackwell GPUs on a single Nvidia GB200 Superchip (pictured), which is the first product set to feature the new GPUs. The problem was reportedly identified by Taiwan Semiconductor Manufacturing Co., which mass-produces the chips on behalf of Nvidia. The discovery has apparently forced Nvidia to revise the design of the die, and it will need a few months to conduct production tests with TSMC before it can start mass-producing the chips as planned.

If true, the delay is expected to cause significant disruption for Nvidia’s customers, many of which have plans to get new AI data centers up and running early next year. Google, for example, is believed to have placed orders for more than 400,000 of the GB200 chips, in a deal valued at more than $10 billion. Meta has also placed a similar order, while Microsoft wants to have 55,000 to 65,000 GB200 chips ready for OpenAI by the first quarter of next year.

To make up for the delay, Nvidia is considering producing a single-GPU version of the Blackwell chip, so it can fulfill some of its first orders.

For Nvidia, it makes sense to delay the launch of the Blackwell GPUs, rather than ship out potentially faulty products. Such a move is not without precedent in the industry. Just last month, AMD announced it will be pushing back the launch of its Ryzen 9000 central processing units due to a simple typo. A delay is better than a catastrophic failure – such as what appears to have happened with Intel Corp.’s 13th and 14th-generation Core processors.

The likely cost of Nvidia’s Blackwell GPUs makes it even more vital that the company gets the product right. It’s said that the AI GB200 Grace Blackwell superchips will cost up to $70,000 apiece, while a complete server rack costs more than $3 million. Nvidia is reportedly expecting to sell between 60,000 and 70,000 complete servers, so any flaws like the one found in Intel’s chips would be hugely expensive and damaging to the company’s reputation.

In any case, Nvidia can probably afford the delay. In the AI chip market, the company is the undisputed leader, with many analysts saying it commands as much as 90% of global AI chip sales.

Although competitors like AMD and Intel have developed their own AI chips, they are yet to gain any significant traction in the market.

Holger Mueller of Constellation Research Inc. said it was almost inevitable that the AI industry would face some delays, as the furious yearly development pace outlined by Nvidia CEO Jensen Huang means there is really no buffer in the event of any production problems, such as the one that appears to have occurred now.

“For cloud providers, this will cause headaches in terms of delayed revenue,” the analyst said.

But rather than the cloud companies who are going to be buying the GB200 chips, it will be the AI companies that suffer the most, as they’re the end users, Mueller said. He pointed out that many of them had been counting on the availability of the Blackwell chips later this year when plotting their product roadmaps and development strategies. With Blackwell potentially not arriving until early 2025, it’s going to cause of a lot of setbacks to a lot of plans, the analyst believes.

“Overall the pace of generative AI development may hit a plateau for a while, but this can be good news for enterprises as it means they’ll get a breather and the opportunity to catch up and think about where the technology can really make a difference,” Mueller said. “The real winner may actually be Google, which runs all of its native AI workloads on its own TPUs. It might give Gemini a chance to get ahead.”

In response to The Information’s report, a spokesperson for Nvidia said, “Hopper demand is very strong, broad Blackwell sampling has started, and production is on track to ramp in the second half.”

The delay is the second setback to hit Nvidia in recent days. Last week, it was revealed that the chipmaker is the subject of two investigations by the U.S. Department of Justice over its AI practices. One of the probes is said to be looking at whether Nvidia’s $700 million acquisition of the Israeli AI startup Run:AI Inc. might have breached antitrust laws, while the second is looking into claims that it unfairly pressured cloud computing companies to buy its chips.

THANK YOU