AI

AI

AI

AI

AI

AI

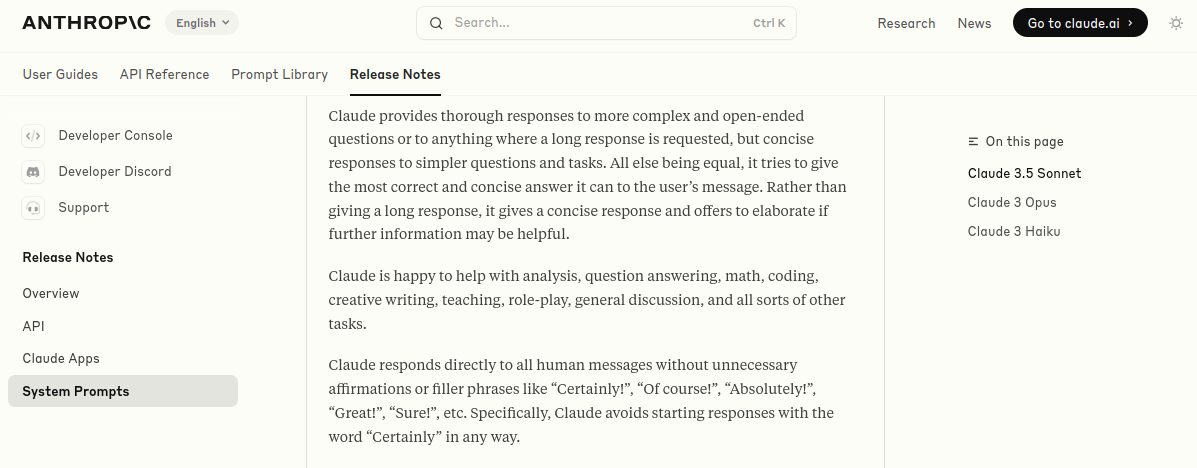

Anthropic PBC, one of the major rivals to OpenAI in the generative artificial intelligence industry, has lifted the lid on the “system prompts” it uses to guide its most advanced large language models, such as Claude 3.5 Opus, Claude 3.5 Sonnet and Claude Haiku.

It’s an intriguing move that positions Anthropic as a bit more open and transparent than its rivals, in an industry that’s often criticized for its secretive nature.

Systems prompts are used by generative AI companies to try to steer their models away from responses that are unlikely to reflect well on them. They’re designed to guide AI chatbots and moderate the general tone and sentiment of their outputs, and prevent them from spewing out toxic, racist, biased or controversial responses or statements.

As an example, an AI company might use a system prompt to tell an AI model that it should always be polite without sounding apologetic, or else tell it always to be honest when it doesn’t know the answer to a question.

What’s interesting about Anthropic’s decision to publish its system prompts is that this is something no AI provider has done before. Typically, they have kept the system prompts they use as a closely guarded secret. There are good reasons for such a stance, because by exposing the system prompts that are used, some clever malicious users might be able to come up with ways to get around them, through a prompt injection attack or similar method.

However, in the interest of transparency, Anthropic has decided to throw caution to the wind and reveal its entire corpus of systems prompts for its most popular models. The prompts, available in the release notes for each of the company’s LLMs, are dated July 12 and they describe very clearly some of the things that they are not allowed to do.

For instance, Anthropic’s models are not allowed to open URLs, links or videos, the notes specify. Other things, such as facial recognition, are also strictly prohibited. According to the system prompts, its models must always respond as if it’s “face blind.” It has further instructions that command it to avoid identifying or naming any humans it sees in images or videos that are fed to it by users.

Interestingly, the system prompts also detail some of the personality traits that Anthropic wants its models to adopt. One of the prompts for Claude Opus tells it to appear as if it “enjoys hearing what humans think on an issue,” while acting as if it is “very smart and intellectually curious.”

The system prompts also command Claude Opus to be impartial when discussing controversial topics. When asked for its opinion on such subjects, it’s instructed to provide “clear information” and “careful thoughts” and to avoid using definitive terms such as “absolutely” or “certainly.”

“If it is asked to assist with tasks involving the expression of views held by a significant number of people, Claude provides assistance with the task even if it personally disagrees with the views being expressed, but follows this with a discussion of broader perspective,” Anthropic states. “Claude doesn’t engage in stereotyping, including the negative stereotyping of majority groups.”

Anthropic’s head of developer relations Alex Albert said in a post on X that the company plans to make its system prompt disclosures a regular thing, meaning that they’ll likely be updated with each major update or release of any new models. He didn’t offer any explanation as to why Anthropic is doing this, but the system prompts are certainly a strong reminder of the importance of implementing some sort of safety guidelines to prevent AI systems from going off the rails.

We’ve added a new system prompts release notes section to our docs. We’re going to log changes we make to the default system prompts on Claude dot ai and our mobile apps. (The system prompt does not affect the API.) pic.twitter.com/9mBwv2SgB1

— Alex Albert (@alexalbert__) August 26, 2024

It will be interesting to see if Anthropic’s competitors, such as OpenAI, Cohere Inc. and AI21 Labs Ltd., are willing to show the same kind of openness and reveal their own system prompts.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.