AI

AI

AI

AI

AI

AI

Nvidia Corp. today announced the release of new tools for developers working on artificial intelligence-enabled robots, including humanoids, that enable faster development cycles using simulation, blueprints and modeling.

The company announced the new tools this week at the annual Conference for Robot Learning, a gathering focusing on the intersection of robotics and machine learning held in Munich, Germany.

The tools included the general availability of Nvidia Isaac Lab, a robot learning framework. There are also six new humanoid robot learning workflows for Project GR00T, to enable AI robot brain development, and new developer tools for video processing.

Seeing and understanding the world is critical to robotics development. Video from cameras must be broken down so that AI models can process it. Nvidia announced the general availability of the open-source Cosmos tokenizer, which provides developers with high-quality tokens with exceptionally high compression rates that run 12x faster than current tokenizers. It couples with the NeMo Curator to optimize and understand inputs.

This also allows developers to build better “world models,” or AI representations of the world that can predict how objects and environments will respond when a robot performs actions.

For example, what will happen when a robot gripper closes on a banana? A ripe banana is soft, so a robot gripper cannot close quickly or too hard, it will smash, deforming it and creating a mess. What about a piece of paper? It must be grabbed differently. Each of these situations involves high-quality encoding and decoding of video data.

Eric Jang, vice-president of AI at 1X Technologies, a humanoid robot startup, explained the Cosmos tokenizer helps his company achieve high compression of data while still retaining extremely high visual quality. “This allows us to train world models with long horizon video generation in an even more compute-efficient manner,” he said.

Not all robot AI brains can be trained in the real world, so Nvidia released Isaac Lab, an open-source robot learning framework built on Omniverse, a digital twin simulation platform that allows developers to test and run robots in virtual worlds.

Omniverse is a real-time 3D graphics collaboration and simulation platform that allows artists, developers and enterprises to build realistic 3D models and scenes of factories, cities and other spaces using fully actualized physics. That makes it a powerful tool for simulating virtual environments to train robots.

Developers can use Isaac Lab to train robots and adjust policies at scale to understand performance and safety. The framework applies to any framework and robot embodiment, including arms, humanoids, quadrupeds and swarms.

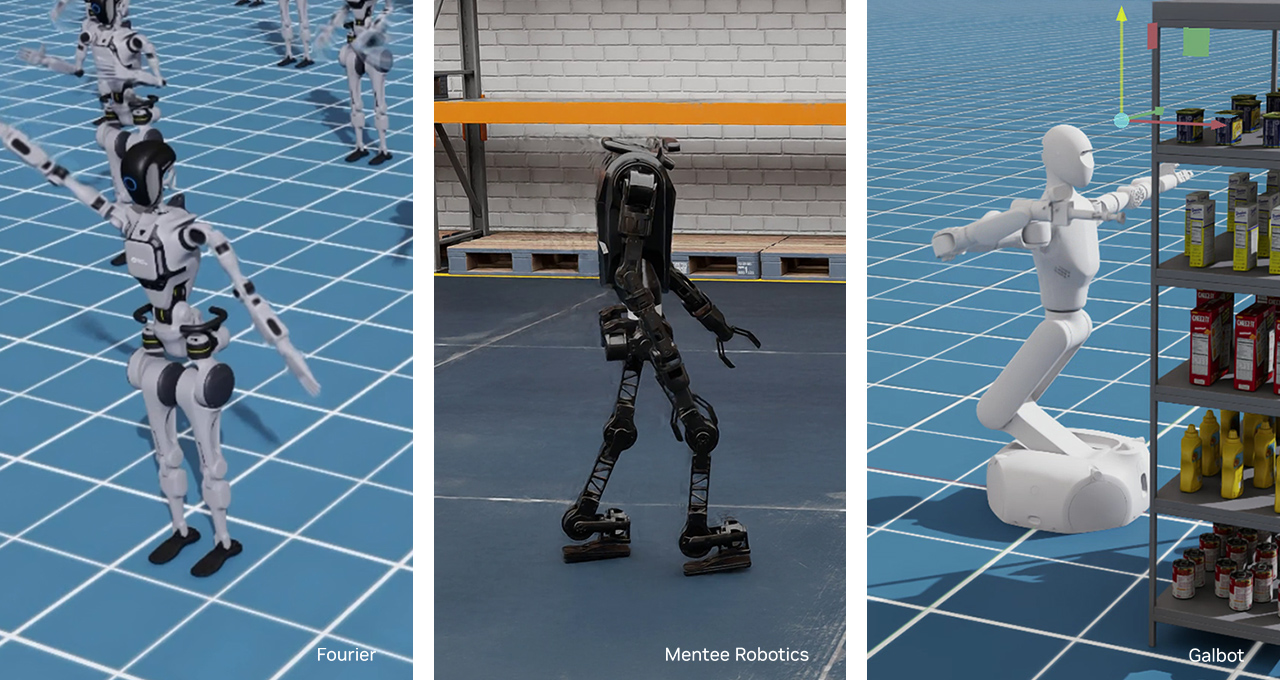

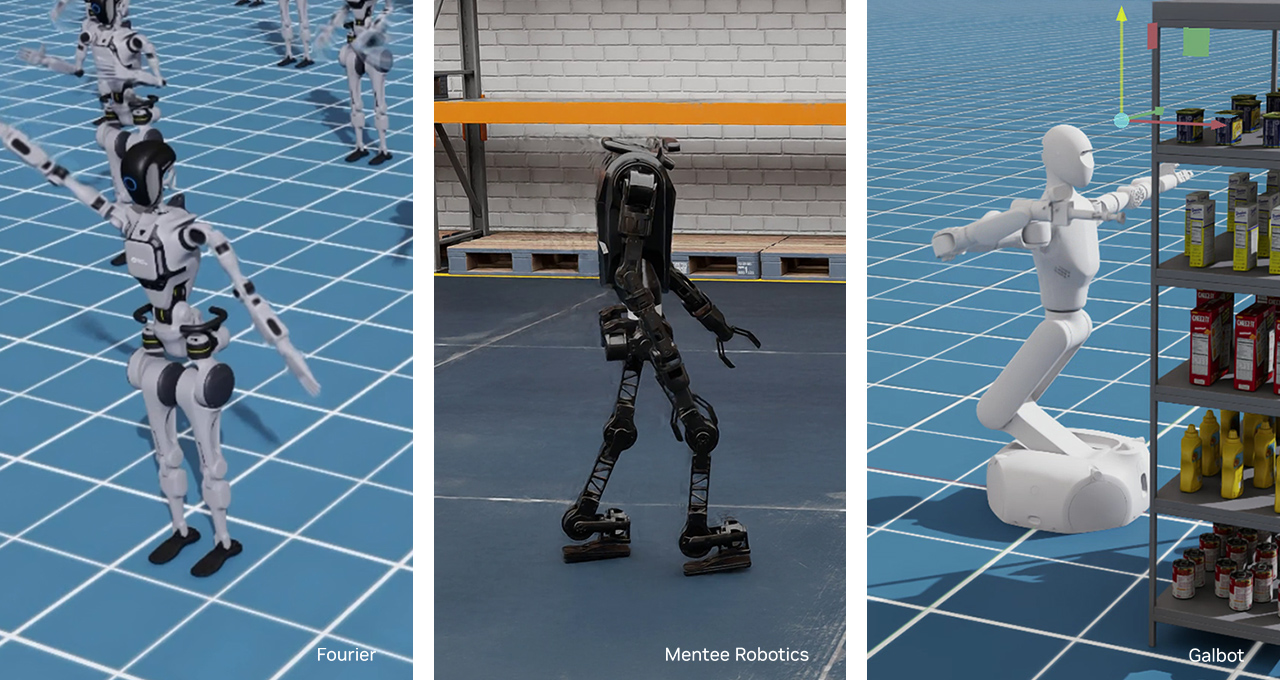

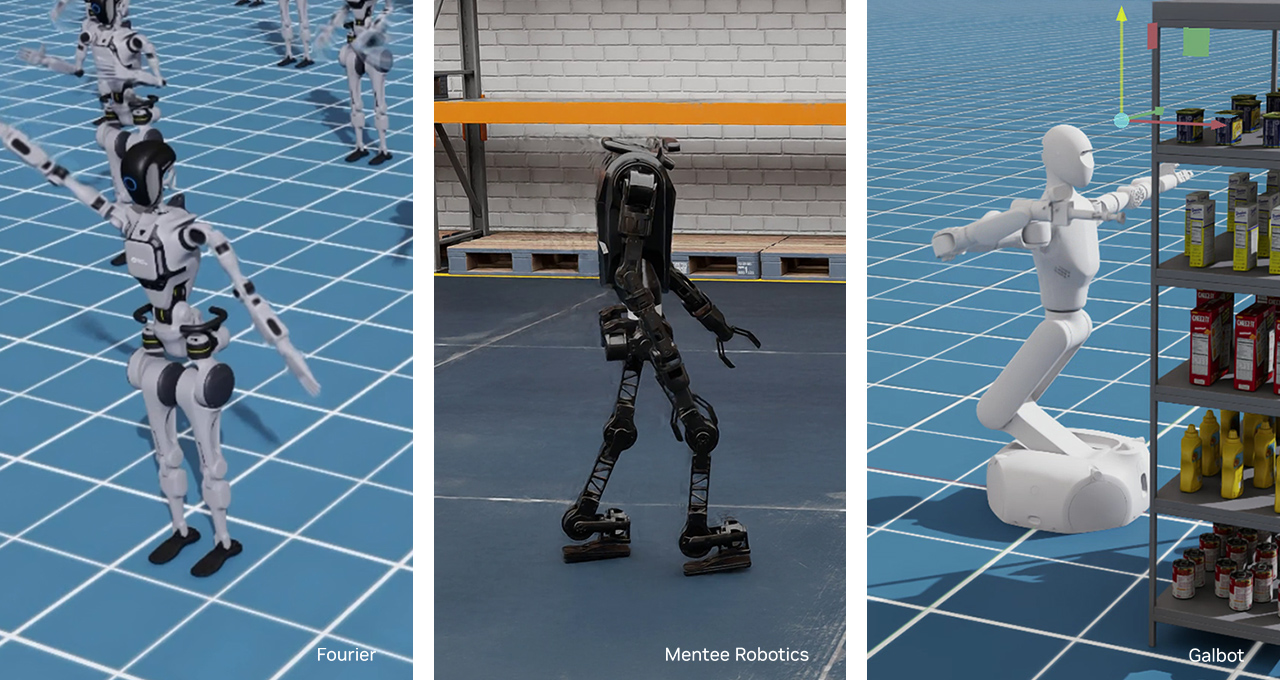

Nvidia said many commercial robot makers and research groups around the world have adopted Isaac Lab into their workflows, including Agility Robots, Boston Dynamics, 1X, Galbot, Fourier, Mentee Robotics and Berkeley Humanoid.

Building and developing advanced humanoid robots is a tough challenge because what comes easily to humans — walking, perceiving and taking action — requires tremendous amounts of hardware engineering, AI training and AI compute to come together for robots to do even simple seemingly simple tasks.

Project GR00T is an initiative from Nvidia that provides developers with AI foundation models for general-purpose humanoid robots, software libraries and data pipelines to help developers rapidly prototype and build faster.

In an effort to provide developers with a leg up building advanced humanoids, Nvidia announced six new Project GR00T workflow blueprints to help them build new capabilities into their robots.

GR00T-Gen allows developers to create realistic simulated environments for training robots to move around in, manipulate objects, and perform other tasks. It uses large language models and 3D generative AI models to create visually diverse scenes and randomized scenes to help create robust training environments.

GR00T-Mimic allows robots to learn from human teachers. Using this workflow, human demonstrators can teleoperate robots and perform actions in the same way that people would, such as walking around a warehouse, pulling boxes from shelves and placing them on carts. The idea is to allow the robot to mimic the same actions in the same environment. Nvidia said the approach uses a limited number of human demonstrations in the physical world using extended reality, such as Apple Vision Pro, and then scaling that motion data to help the robots produce more organic motion themselves.

GR00T-Dexterity and GR00T-Control provide a suite of models and policies for fine-grained dexterous manipulation and broad body control for humanoid robots. Dexterity will help developers work with robots with highly dexterous hands that have actuators and knuckles and deal with missed grasps, grip force and other grip motions. Control will help with motion planning for the entire body for walking, moving limbs or performing tasks.

GR00T-Mobility provides developers with a set of models for helping humanoid robots walk and navigate around obstacles. Mobility is designed to allow for a learning-based approach that can generalize quickly to new environments.

Finally, GR00T-Perception adds advanced software libraries and foundation models for human-robot interaction that help robots “remember” long histories of events. To do this, Nvidia added the aptly named ReMEmbR to Perception. This will give the robot a memory that personalizes human interactions and provides a context and spatial awareness to provide better perception, cognition and adaptability.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.