INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Microsoft Corp. wants to be the infrastructure foundation for the next generation of artificial intelligence, catering to everyone from agile startups to multinational corporations, and to do this it’s introducing some significant advancements and upgrades to the Azure Cloud platform.

The new infrastructure updates announced at Microsoft Ignite 2024 today are all about offering customers more choices in terms of power, performance and cost efficiency. They cover advancements in everything from AI accelerators, computer chips and liquid cooling to data integrations and flexible cloud deployments.

Perhaps the biggest news is the imminent arrival of Nvidia Corp.’s latest graphics processing unit, the eagerly anticipated Blackwell GPUs. Although reports elsewhere claim Nvidia is still having issues with its newest accelerators, Microsoft Vice President of Azure Infrastructure Marketing Omar Khan revealed that the company has already started co-validating the new chips ahead of the launch of a new virtual machine series called the Azure ND GB200 v6.

It will combine the Nvidia GB200 NVL 72 rack-scale design with advanced Quantum InfiniBand networking infrastructure, which makes it possible to link “tens of thousands” of Blackwell GPUs for AI supercomputing, Khan said. However, he declined to mention a launch date for the new offering.

On the other hand, customers will be able to access various new silicon options right now, including new versions of the Azure Maia AI accelerators and the Azure Cobalt central processing units. Another new option is Azure Boost DPU (below), which is Microsoft’s first in-house data processing unit, designed to provide high performance with low energy consumption for data-centric workloads. According to Khan, it absorbs multiple components of a traditional server into a single, dedicated silicon chip, and makes it possible to run cloud storage workloads with three times less power and up to four times the performance.

The company is also introducing a custom-made security chip, called Azure Integrated HSM, a dedicated hardware security module that puts encryption and key management in the hands of customers. Khan said that from next year, Microsoft plans to install Azure Integrated HSM in every new server it deploys in its data centers globally, increasing security for both confidential and general-purpose compute workloads.

Coming soon, there’s a new VM series called the Azure HBv5, which will be powered by Advanced Micro Devices Inc.’s new EPYC 9V64H processors. It’s designed for high-performance computing workloads and is said to be eight times faster than the latest bare-metal and cloud alternatives, and as much as 35 times faster than on-premises servers. Khan said this is because the VMs feature seven terabytes per second of high bandwidth memory. It will launch sometime next year, but customers can sign up for preview access now.

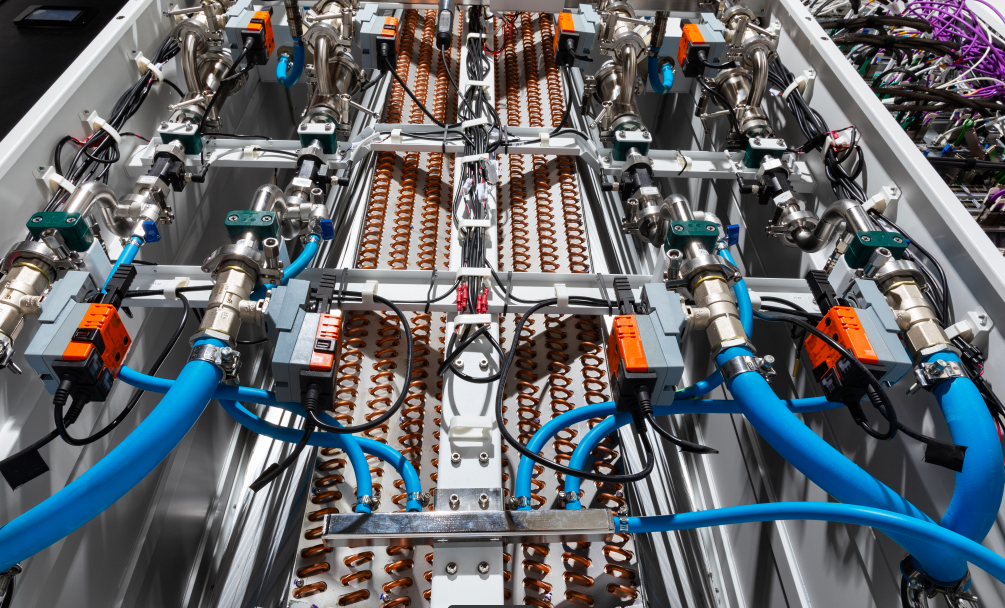

Besides chips, Microsoft has also been focused on improving the technology needed to keep those processors running cool. Its efforts there have paid off with the launch of a next-generation liquid cooling “sidekick rack,” or heat exchanger unit (pictured below) for AI systems. It’s designed to be retrofitted into the company’s existing data centers, and can help to cool off GPUs and other AI accelerators, including those from Nvidia and Microsoft’s in-house chips, the Azure Maia GPUs.

Cooling chips down helps save on energy, and so does more efficient power delivery, which has been another area of focus for the company. Khan explained that Microsoft has collaborated with Meta Platforms Inc. on a new, disaggregated power rack that can help support the addition of more AI accelerators into its existing data centers.

According to Khan, each of the new racks delivers 400 volts of DC power to support up to 35% more AI accelerators per server rack. They also enable dynamic power adjustments, so it can scale up or down as necessary to support different kinds of AI workloads. The new racks are being open-sourced via the Open Compute Project, so everyone can benefit.

In other announcements, Khan said the company is improving its Oracle Database@Azure service, launched earlier this year, with support for Microsoft Purview, which is a new offering that can help customers to better manage and secure their Oracle data and enhance data governance and compliance.

In addition, Microsoft has been working with Oracle to support another new offering. The tongue-twisting Oracle Exadata Database Service On Exascale Infrastructure on Oracle Database@Azure is said to deliver hyper-elastic scaling with pay-per-use economics for customers that want more flexible Oracle database options. That service will become available in more regions too, as Microsoft is expanding availability of Oracle Database@Azure to a total of nine regions globally.

For customers with hybrid cloud deployment requirements, there’s a new cloud-connected infrastructure offering called Azure Local, which is said to bring the capabilities of Azure Stack into a single, unified platform. Azure Stack is the on-premises version of the Azure Cloud, and enables customers to access many of Azure’s cloud features from within their own data centers. With Azure Local, customers can run containers and Azure Virtual Desktop on validated servers from companies including Dell Technologies Inc., Hewlett Packard Enterprise Co. and Lenovo Group Ltd., among others, helping them to meet their compliance requirements.

Windows Server 2025, which became generally available earlier this year, is getting some new features too, including simpler upgrades, enhanced security and support for AI and machine learning. There’s also a new “hotpatching” subscription offering in preview that makes it possible to install new updates with fewer restarts, saving organizations time.

Finally, Khan said Microsoft is making SQL Server 2025 available in preview, giving customers access to an enhanced database offering that leverages Azure Arch to provide cloud agility in any location. The new release is all about supporting AI workloads, with integrated AI application development tools and support for retrieval-augmented generation patterns and vector search capabilities.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.